Practical Solutions and Value of Medical Question-Answering Systems

Enhancing Healthcare Delivery with AI

Medical question-answering systems, powered by large language models (LLMs), provide quick and reliable insights from extensive medical databases to assist clinicians in making accurate diagnoses and treatment decisions.

Challenges in Real-World Clinical Settings

Ensuring the performance of LLMs in controlled benchmarks translates into reliable results in real-world clinical settings is a critical challenge. The strong performance of LLMs on benchmarks may not guarantee their reliability in practical medical settings.

Evaluating LLM Performance in Medicine

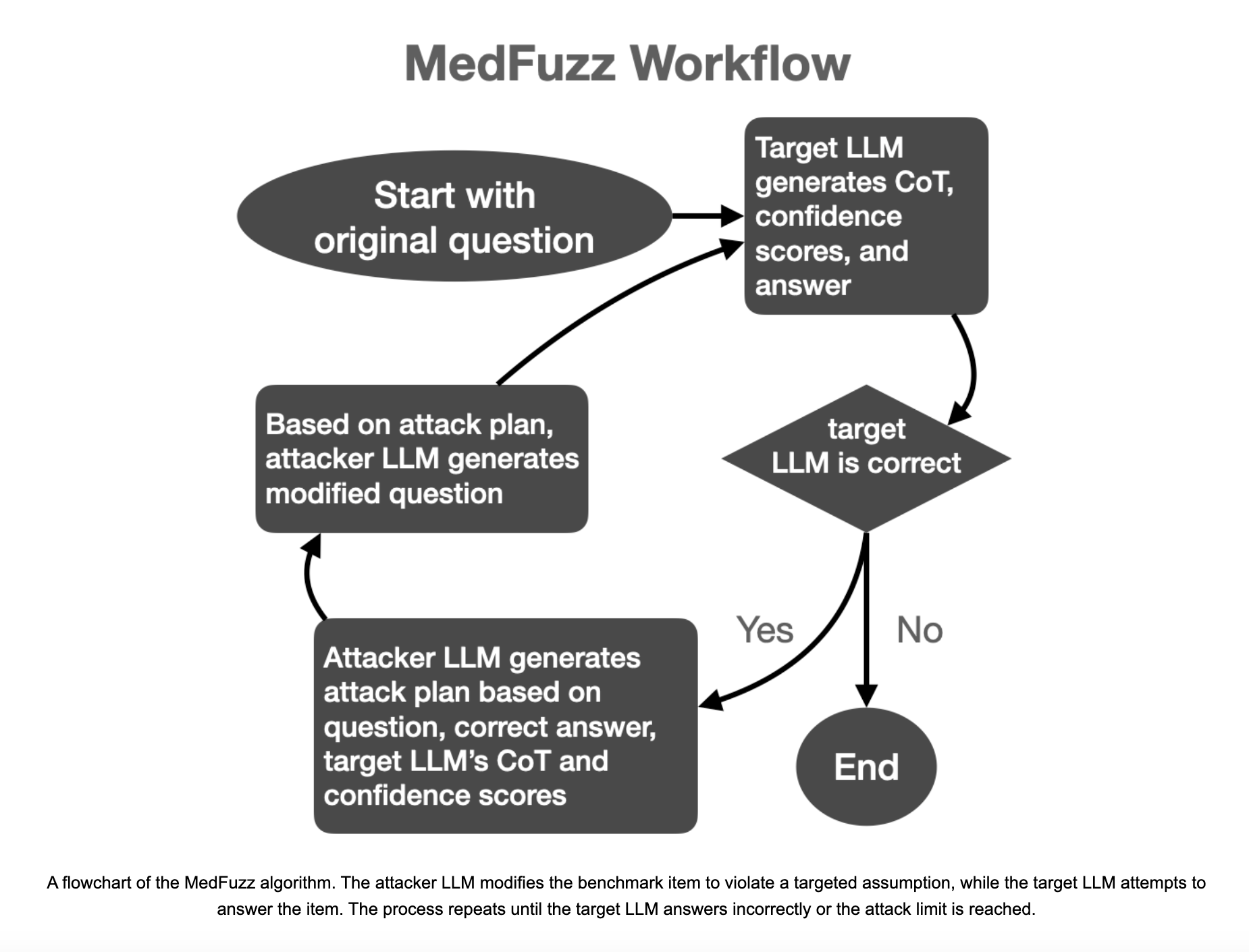

Current benchmarks like MedQA may not fully replicate the complexities of real clinical environments. MedFuzz, an innovative adversarial testing method, evaluates whether LLMs can accurately perform in more complex and realistic clinical settings.

Methodical and Rigorous Approach of MedFuzz

MedFuzz systematically alters questions from medical benchmarks to challenge the LLM’s ability to interpret and respond to queries correctly. It aims to identify weaknesses in LLMs that may not be evident in traditional benchmark tests.

Noteworthy Results and Implications

Experiments with MedFuzz revealed that even highly accurate models could be tricked into giving incorrect answers. This research underscores the need for better evaluation frameworks that test models in dynamic, real-world scenarios.

Evolve Your Company with AI

Microsoft Researchers Propose MedFuzz: A New AI Method for Evaluating the Robustness of Medical Question-Answering LLMs to Adversarial Perturbations can help companies stay competitive and evolve with AI.

AI Implementation Guidance

Identify Automation Opportunities, Define KPIs, Select an AI Solution, and Implement Gradually to leverage AI and redefine your way of work.

Connect with AI Experts

For AI KPI management advice and continuous insights into leveraging AI, connect with us at hello@itinai.com or stay tuned on our Telegram t.me/itinainews or Twitter @itinaicom.