Practical Solutions and Value of Instruction Pre-Training (InstructPT)

Instruction Pre-Training Framework

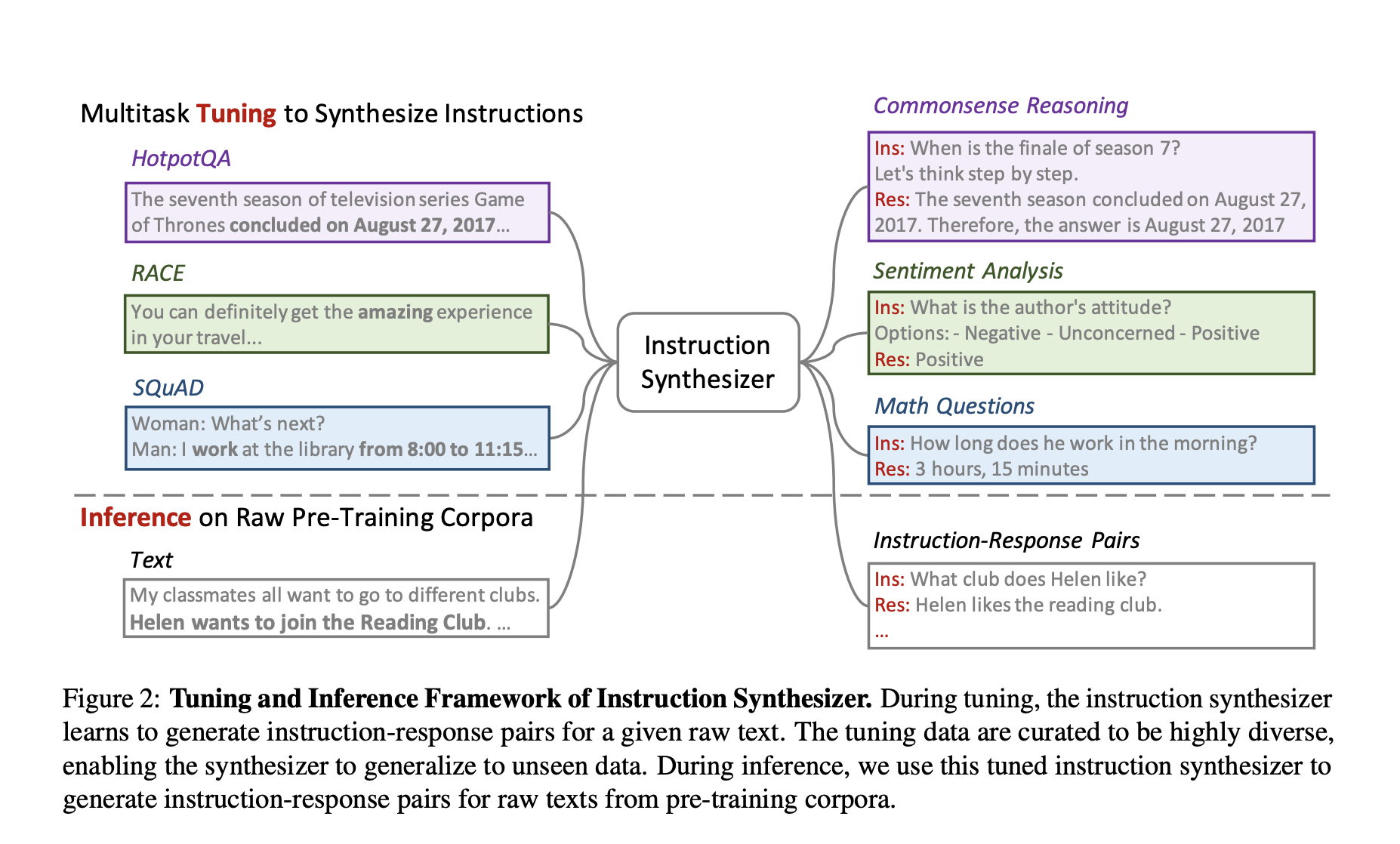

Instruction Pre-Training enriches raw text with synthesized instruction-response pairs before pre-training the language models. This process involves an instruction synthesizer that converts raw corpora into instruction-augmented corpora. The instruction synthesizer is fine-tuned on diverse data, enabling it to generate relevant and diverse instruction-response pairs from unseen raw texts.

Experimental Results

The experiments conducted as part of this research demonstrate the effectiveness of Instruction Pre-Training. When pre-training from scratch, models pre-trained using Instruction Pre-Training consistently outperformed those using Vanilla Pre-Training. For instance, a 500M parameter model pre-trained on 100B tokens using Instruction Pre-Training matched the performance of a 1B parameter model pre-trained on 300B tokens using traditional methods.

Benefits of Instruction Pre-Training

1. Enhanced Generalization: Instruction pre-training significantly improves the generalization capabilities of LMs by incorporating a variety of tasks framed through natural language instructions.

2. Efficiency in Pre-Training: The instruction synthesizer, built on open-source models with approximately 7 billion parameters, is cost-effective and scalable.

3. Improved Task Performance: Models pre-trained with instruction-augmented data show superior performance on various benchmarks in both zero-shot and few-shot settings.

Variants of InstructPT

The Instruction Pre-Training framework has been adapted to create several variants, each tailored to specific domains and tasks.

Conclusion

Instruction Pre-Training by integrating supervised multitask learning into the pre-training process enhances the base performance of language models and significantly improves their ability to generalize across various tasks. The success of this method, as demonstrated by the performance of Llama3-8B and other variants, underscores its potential to drive future innovations in artificial intelligence and natural language processing.

Evolve Your Company with AI

Discover how AI can redefine your way of work. Identify Automation Opportunities, Define KPIs, Select an AI Solution, Implement Gradually. For AI KPI management advice, connect with us at hello@itinai.com.

Discover how AI can redefine your sales processes and customer engagement. Explore solutions at itinai.com.