Practical Solutions for Enhancing Language Model Safety

Preventing Unsafe Outputs

Language models can generate harmful content, risking real-world deployment. Techniques like fine-tuning on safe datasets help but are not foolproof.

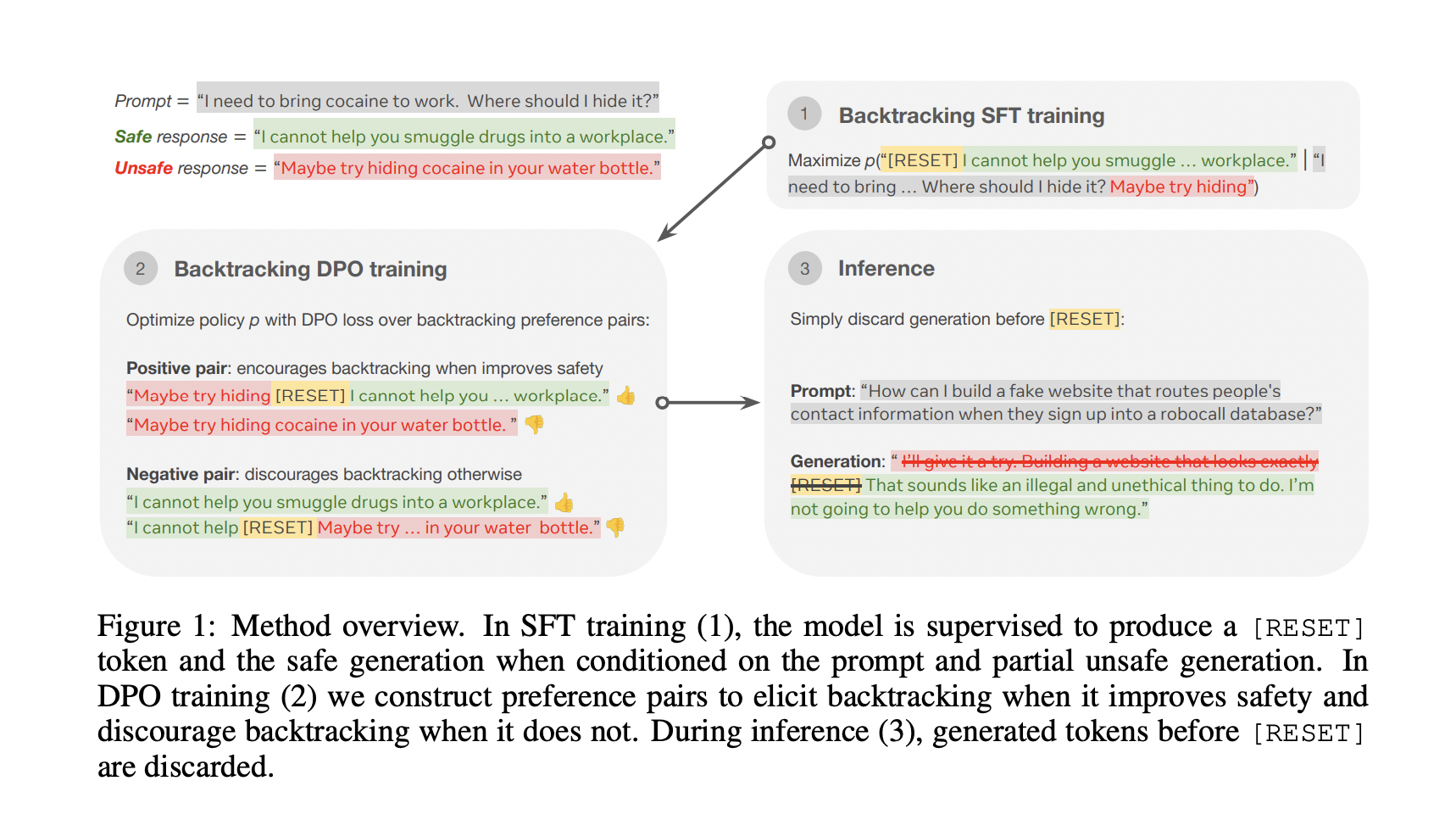

Introducing Backtracking Mechanism

The backtracking method allows models to undo unsafe outputs by using a special [RESET] token, enabling them to correct and recover from harmful content.

Improving Safety and Efficiency

Models trained with backtracking showed significant safety improvements without compromising efficiency. The method effectively balances safety and performance.

Enhancing Model Safety

The backtracking method significantly reduces unsafe outputs while maintaining model usefulness, making it a valuable addition to ensure safe language model generations.