Introduction to MobileLLM

The rise of large language models (LLMs) has greatly improved areas like conversational AI and content creation. However, using these models often requires a lot of cloud resources, which can lead to issues with speed, cost, and environmental impact. Models like GPT-4 need significant computing power, making them expensive and energy-intensive. This is especially challenging for mobile devices that have limited memory and processing capabilities. Therefore, there is a need for smaller, more efficient models that can work well on mobile platforms.

What is MobileLLM?

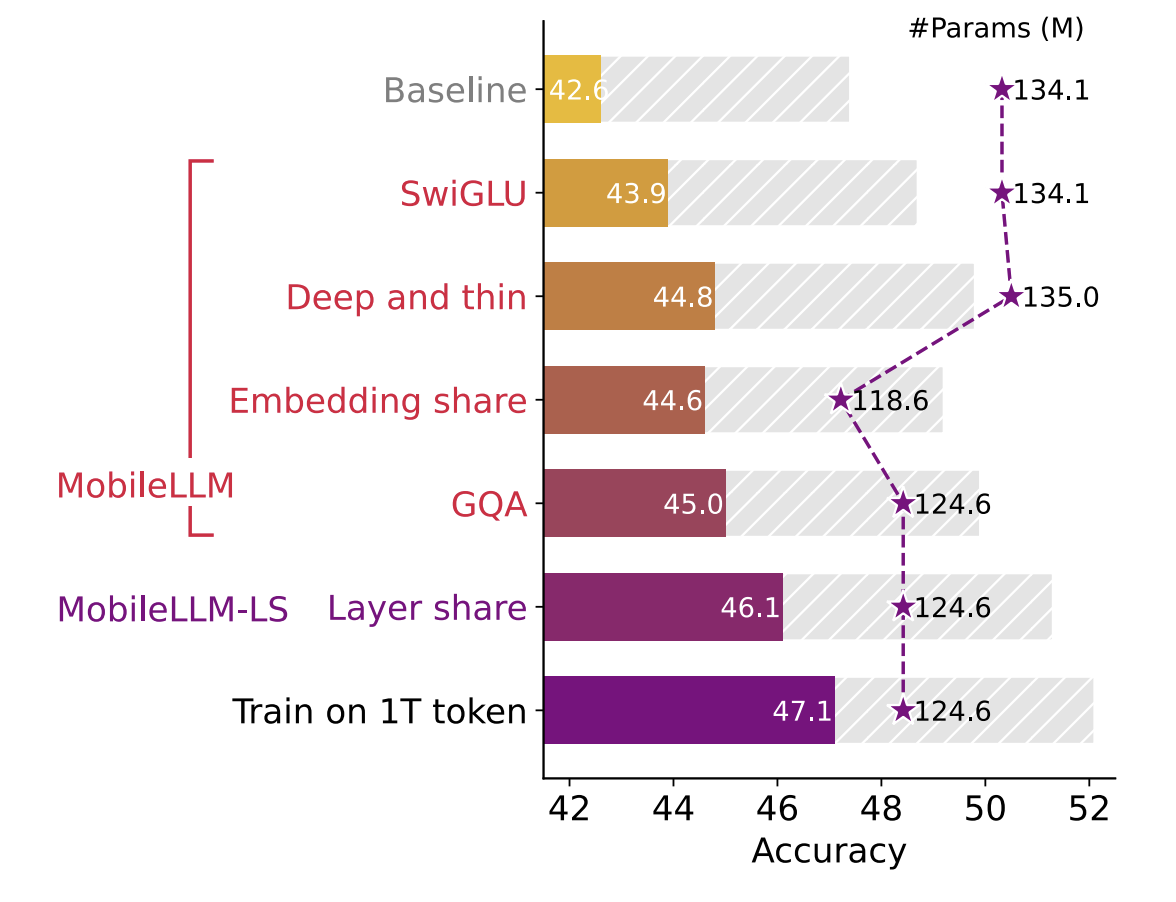

Meta has introduced MobileLLM, a series of language models with sizes ranging from 125M to 1B parameters. These models are designed to run efficiently on mobile devices, offering strong performance without heavy reliance on cloud resources. This results in faster response times and lower operational costs. MobileLLM uses a unique architecture that prioritizes depth over width, allowing it to perform well even with fewer parameters.

Key Features of MobileLLM

- Embedding Sharing: This technique reuses weights between input and output layers, making the model smaller and more efficient.

- Grouped Query Attention (GQA): This optimizes how the model pays attention to different inputs, enhancing efficiency.

- Immediate Block-Wise Weight Sharing: This reduces latency by minimizing weight movement between model blocks, speeding up execution.

Performance and Applications

MobileLLM excels in on-device tasks, outperforming previous models of similar size. For example, the 125M model surpassed earlier models by 2.7%, and the 350M model did so by 4.3%. In API calling tasks, the MobileLLM-350M model matched the performance of larger models, demonstrating its effectiveness despite its smaller size. This makes MobileLLM ideal for applications like chat and API integration, significantly reducing latency and energy use.

Conclusion

Meta’s MobileLLM offers a practical solution to the challenges of using large-scale LLMs by focusing on efficiency and performance. With innovative techniques like depth prioritization and weight sharing, MobileLLM brings advanced language processing capabilities to mobile devices. This development is crucial for enhancing various applications while keeping costs and energy consumption low.

Get Involved

Explore the full release and research on Hugging Face. Follow us on Twitter, join our Telegram Channel, and connect with our LinkedIn Group for updates. If you appreciate our work, subscribe to our newsletter and join our 55k+ ML SubReddit community.

Transform Your Business with AI

Stay competitive by leveraging AI solutions like MobileLLM. Here’s how:

- Identify Automation Opportunities: Find areas in customer interactions that can benefit from AI.

- Define KPIs: Ensure your AI initiatives have measurable impacts.

- Select an AI Solution: Choose tools that fit your needs and allow customization.

- Implement Gradually: Start with a pilot project, gather data, and expand wisely.

For AI KPI management advice, contact us at hello@itinai.com. For ongoing insights, follow us on Telegram or Twitter.

Explore AI Solutions for Sales and Customer Engagement

Discover how AI can transform your sales processes and enhance customer engagement at itinai.com.