Improving Inference in Large Language Models (LLMs)

Inference in large language models is tough because they need a lot of computing power and memory, which can be expensive and energy-intensive. Traditional methods like sparsity, quantization, or pruning often need special hardware or can lower the model’s accuracy, making it hard to use them effectively.

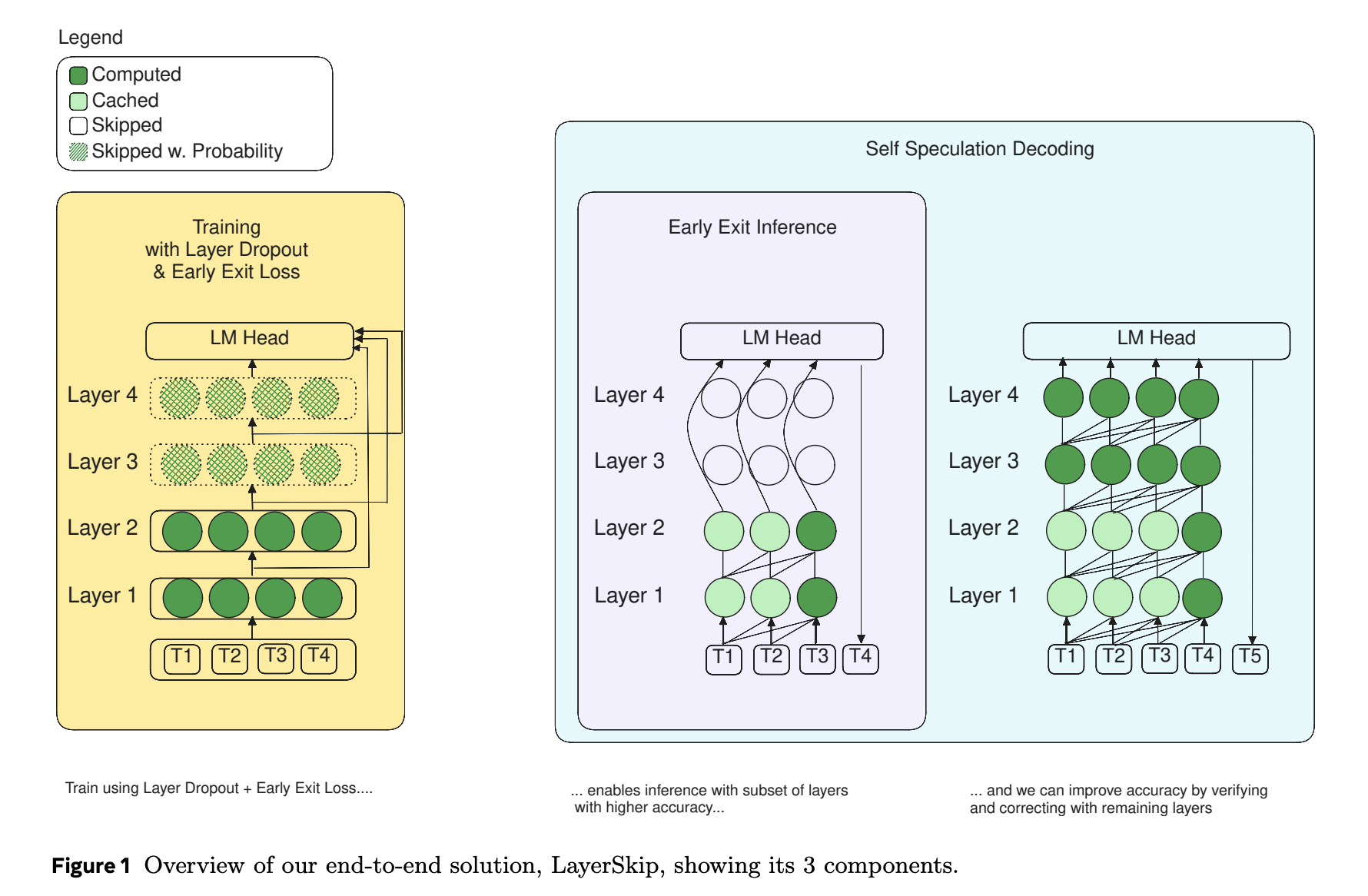

Introducing LayerSkip

Researchers from Meta and various universities have developed LayerSkip, a new solution that enhances LLM efficiency. This approach combines a special training method with self-speculative decoding.

Key Features of LayerSkip

- Training Recipe: Uses layer dropout and early exit loss to create multiple sub-models within the main model.

- Inference Strategy: Allows early exits at earlier layers, cutting down on computing costs while keeping accuracy intact.

- Self-Speculative Decoding: Makes early predictions and checks them with later layers for corrections.

LayerSkip shares weights, enabling the model to skip layers while still producing high-quality results. It has been made open-source, allowing anyone to access the code on GitHub.

Performance Improvements

LayerSkip has shown impressive speed boosts across various tasks and model sizes:

- Up to 2.16× speedup on CNN/DM summarization.

- Up to 1.82× speedup on coding tasks.

- Up to 2.0× speedup on TOPv2 semantic parsing.

This method not only speeds up inference but also reduces memory needs, making it easier to deploy large models on standard hardware.

Why LayerSkip Matters

LayerSkip offers a practical solution for enhancing LLM efficiency during inference, minimizing both computational and memory demands. By integrating layer dropout, early exit loss, and self-speculative decoding, it paves the way for more accessible AI applications.

Get Involved

Explore the Paper, Model Series on Hugging Face, and GitHub. Follow us on Twitter, join our Telegram Channel, and connect with our LinkedIn Group. If you enjoy our work, subscribe to our newsletter and join our 50k+ ML SubReddit.

Upcoming Webinar

Live Webinar – Oct 29, 2024: Discover the best platform for serving fine-tuned models with the Predibase Inference Engine.

Transform Your Business with AI

Stay competitive by leveraging AI solutions:

- Identify Automation Opportunities: Find key customer interactions that can benefit from AI.

- Define KPIs: Ensure measurable impacts from your AI initiatives.

- Select an AI Solution: Choose tools that fit your needs and allow customization.

- Implement Gradually: Start small, gather data, and expand wisely.

For AI KPI management advice, contact us at hello@itinai.com. For ongoing insights, follow us on Telegram or @itinaicom.

Discover how AI can enhance your sales processes and customer engagement at itinai.com.