Introduction to EvalPlanner

The rapid growth of Large Language Models (LLMs) has enhanced their ability to create detailed responses, but evaluating these responses fairly and efficiently is still a challenge. Human evaluation is often too costly and biased. To tackle this, the LLM-as-a-Judge model was introduced to let LLMs evaluate themselves. However, these models still face two main issues: a lack of human-annotated reasoning examples and rigid evaluation methods that can’t adapt to different tasks. To solve these problems, Meta AI has developed EvalPlanner, which enhances LLM judges’ reasoning and decision-making through an improved planning and execution method.

What is EvalPlanner?

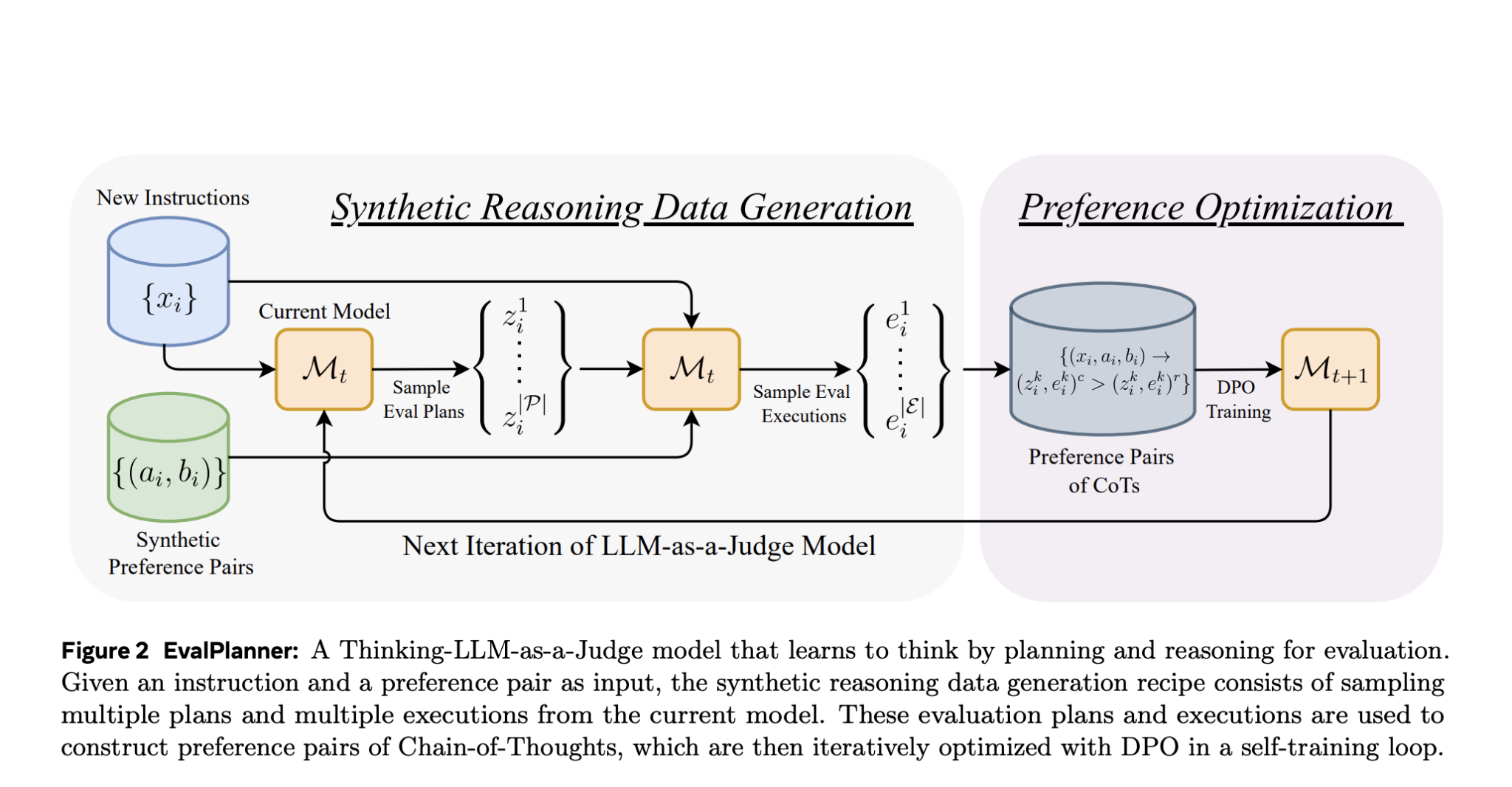

EvalPlanner is a unique algorithm aimed at optimizing LLM-based evaluations. It uses a three-step evaluation process:

- Plan Creation: Develop an open evaluation plan.

- Plan Execution: Carry out the evaluation plan.

- Final Judgment: Make a judgment based on the evaluation.

Unlike previous methods, EvalPlanner allows flexibility in its evaluation plans, making it adaptable to various tasks. It continuously improves itself by learning from synthetic evaluation examples, ensuring evaluations are more reliable and scalable.

Key Features of EvalPlanner:

- Structured Reasoning: Separates planning from execution for better clarity in judgments.

- Self-Training Mechanism: Uses Direct Preference Optimization (DPO) to refine its evaluation process.

- Bias Reduction: By creating unconstrained evaluation plans, it increases judgment accuracy and consistency.

- Scalability: Automatically adapts to new tasks, making it efficient for various applications.

- Transparency: Clearer evaluation processes enhance understanding and debugging.

Performance Insights

Meta AI tested EvalPlanner and found impressive results across multiple benchmarks:

- High Accuracy: Scored 93.9 on RewardBench using far less annotated data than competitors.

- Robustness: Achieved 8% better accuracy in nuanced evaluations than previous models.

- Constraint Management: Outperformed others by 13% in handling complex evaluation tasks.

- Generalization: Performed similarly to larger models with significantly fewer training examples.

Conclusion: Enhancing AI Evaluation

EvalPlanner marks a significant step in AI-based evaluation systems. Its innovative approach to preference optimization and structured evaluation allows for unbiased and efficient assessments of AI-generated content. As AI technology advances, EvalPlanner promises to improve the reliability and fairness of AI evaluations, paving the way for better governance and accountability in AI systems. Future research could expand its applications in areas like Reinforcement Learning and real-world AI audits.

Explore More about EvalPlanner!

For further insights and updates, check out the research paper and connect with us through our social media platforms. If you want to incorporate AI solutions like EvalPlanner into your business, here are some steps to get started:

- Identify Opportunities: Find areas in customer interaction that can benefit from AI.

- Define KPIs: Ensure that AI efforts have measurable goals.

- Select Solutions: Choose AI tools that fit your needs.

- Implement Gradually: Start small, collect data, and expand wisely.

Contact us at hello@itinai.com for AI KPI management advice, and stay updated on our insights via our Telegram channel or Twitter.

Explore how AI can transform your sales processes and customer engagement at itinai.com.