Understanding Reinforcement Learning (RL)

Reinforcement learning (RL) helps agents make decisions by maximizing rewards over time. It’s useful in various fields like robotics, gaming, and automation, where agents learn the best actions by interacting with their surroundings.

Types of RL Approaches

There are two main types of RL methods:

- Model-Free: These are simpler but need a lot of training data.

- Model-Based: These are more structured but require significant computational power.

Researchers are working on combining these methods to create more flexible RL systems that work well in different scenarios.

Challenges in RL

A major challenge is the lack of a one-size-fits-all algorithm that performs well in various environments without needing extensive adjustments. Model-based methods generally perform better across different tasks but are complex and slower. In contrast, model-free methods are easier to use but may not be efficient for new tasks.

Emerging Solutions in RL

New RL methods have been developed, each with its own benefits and drawbacks. For example:

- Model-Based Solutions: DreamerV3 and TD-MPC2 show good results but depend on complex planning and simulations.

- Model-Free Alternatives: TD3 and PPO are less demanding but need specific adjustments for different tasks.

This highlights the need for an RL algorithm that is both adaptable and efficient for various applications.

Introducing MR.Q

The research team from Meta FAIR has created MR.Q, a model-free RL algorithm that uses model-based techniques to enhance learning efficiency. MR.Q stands out because:

- It learns effectively across different benchmarks with minimal adjustments.

- It combines the structured learning of model-based methods without the heavy computational costs.

How MR.Q Works

MR.Q translates state-action pairs into embeddings that relate linearly to the value function. It uses an encoder to extract important features, improving learning stability. Additionally, it employs prioritized sampling and reward scaling to boost training efficiency.

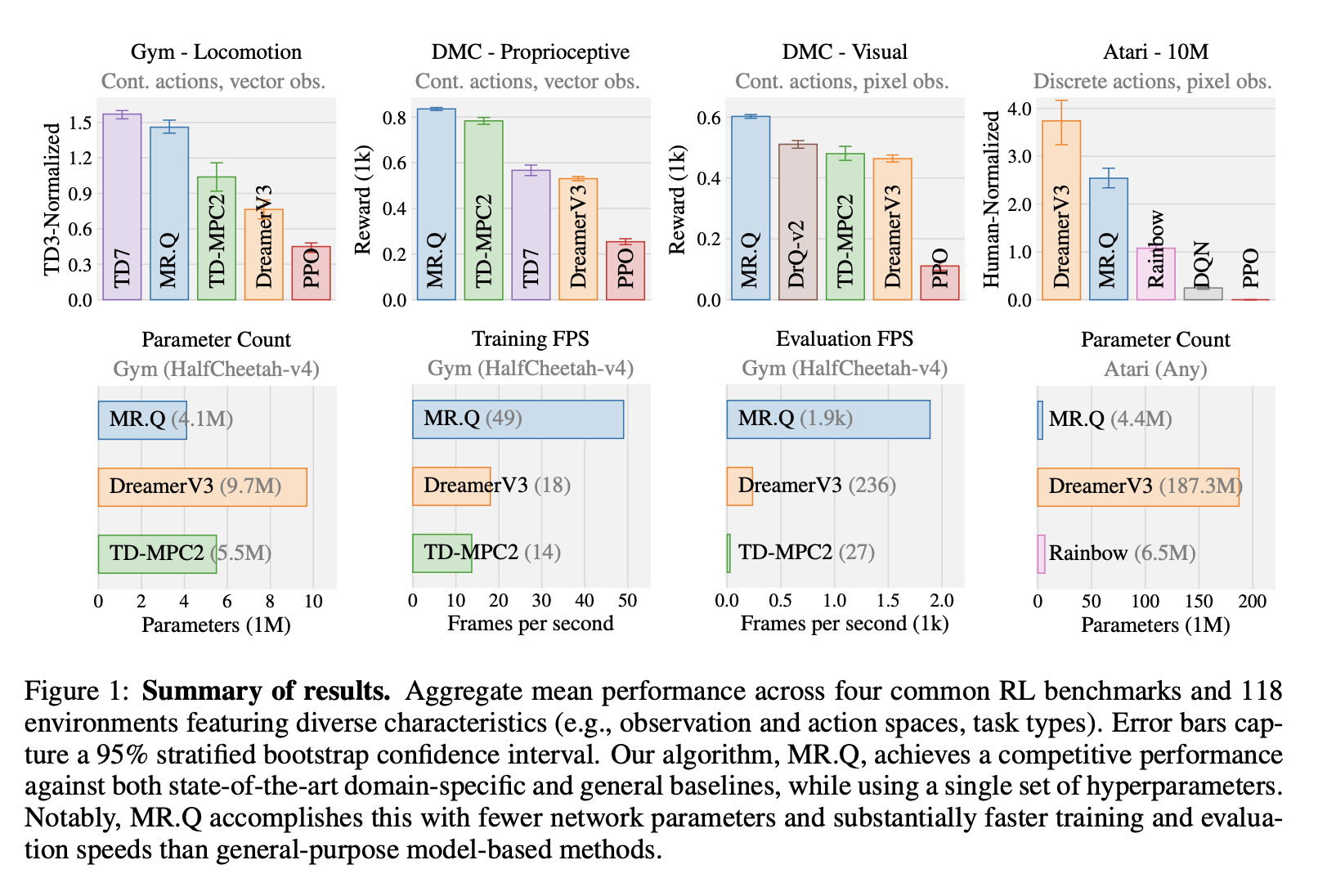

Performance and Efficiency

Tests on various RL benchmarks, including Gym locomotion tasks and Atari, show that MR.Q performs well with just one set of parameters. It outperforms traditional model-free methods like PPO and DQN while being efficient in resource usage. MR.Q excels particularly in discrete-action spaces and continuous control tasks.

Future Directions

The study emphasizes the advantages of integrating model-based elements into model-free RL algorithms. MR.Q represents progress towards creating a more adaptable RL framework, with future improvements aimed at tackling challenges like complex exploration and non-Markovian environments.

Explore Further

For more details, check out the research paper. Acknowledgments go to the researchers involved. Follow us on Twitter, join our Telegram Channel, and connect with our LinkedIn Group. Join our 70k+ ML SubReddit for ongoing discussions.

Leverage AI for Your Business

Consider how AI can enhance your operations:

- Identify Automation Opportunities: Find key customer interactions that can benefit from AI.

- Define KPIs: Ensure your AI projects have measurable business impacts.

- Select an AI Solution: Choose tools that meet your needs and allow customization.

- Implement Gradually: Start small, gather insights, and expand AI usage wisely.

For AI KPI management advice, contact us at hello@itinai.com. For ongoing insights, follow us on Telegram or @itinaicom.

Transform Your Sales and Customer Engagement

Discover how AI can redefine your sales processes and customer interactions at itinai.com.