Theory of Mind (ToM) in AI

Theory of Mind (ToM) is a key aspect of human social intelligence. It helps people understand and predict what others are thinking and feeling. This ability is vital for good communication and teamwork. For AI to work well with humans, it needs to mimic this understanding.

Challenges in AI ToM Development

Despite advancements in AI, teaching large language models (LLMs) ToM is still difficult. Many existing evaluations are too simple, leading to an overestimation of what these models can do. Current benchmarks often focus on basic scenarios that don’t reflect real human reasoning, which limits our understanding of LLM capabilities. This shows the need for better tools to measure and improve ToM in AI.

Limitations of Current Evaluation Methods

Previous methods of evaluating ToM used simple psychological tests, like the Sally-Anne test. While useful, these tests are too narrow and don’t cover the variety of actions in real life. Many models perform well on these tests but struggle with more complex situations. Current methods also focus on tweaking prompts rather than improving the core training data, which is not enough for genuine progress.

Introducing ExploreToM

A team from FAIR at Meta, the University of Washington, and Carnegie Mellon University has developed ExploreToM, a new framework for evaluating and training ToM in AI. This framework uses an A*-search algorithm to create diverse and challenging datasets that truly test LLMs’ abilities.

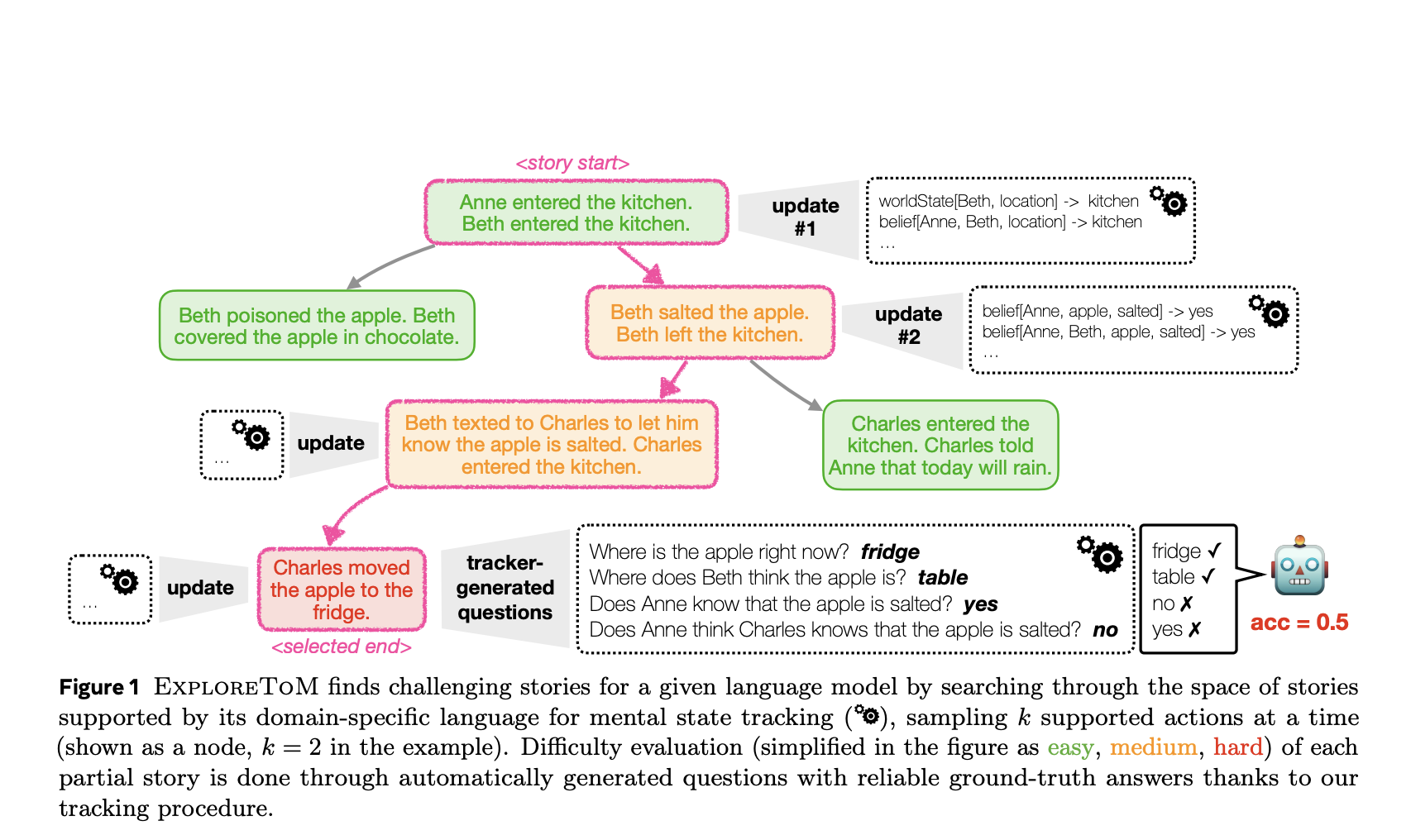

How ExploreToM Works

ExploreToM generates complex stories using a specialized language that tracks mental states throughout the narrative. This ensures that each story tests specific aspects of ToM reasoning. The A*-search algorithm helps identify scenarios that will challenge existing AI models, leading to a rich dataset. Additionally, it simulates situations where different characters have varied beliefs, making the evaluation more realistic.

Performance Insights

In tests, models like GPT-4o and Llama-3.1-70B showed very low accuracies of 9% and 0% on ExploreToM datasets. However, after fine-tuning with this data, there was a significant improvement of 27 points on the ToMi benchmark. This highlights the importance of challenging training data for enhancing ToM in AI.

Key Takeaways from ExploreToM

- ExploreToM uses advanced algorithms to create datasets that reveal gaps in ToM reasoning.

- The low accuracy of models shows the need for better evaluation standards.

- Fine-tuning on ExploreToM data leads to significant performance improvements.

- The framework supports complex scenarios, improving the realism of evaluations.

- ExploreToM allows for large-scale data generation to challenge even advanced AI models.

Conclusion

ExploreToM addresses the shortcomings of existing benchmarks and offers a scalable approach to data generation. It lays the groundwork for significant advancements in AI’s ability to engage in complex social reasoning. This research highlights the limitations of current models and the potential for quality training data to improve AI understanding of human interactions.

If you want to enhance your business using AI, consider the practical steps outlined:

- Identify Automation Opportunities: Find key customer interaction points that could benefit from AI.

- Define KPIs: Ensure measurable impacts from your AI initiatives.

- Select an AI Solution: Choose tools that fit your needs and allow for customization.

- Implement Gradually: Start with a pilot project, collect data, and expand wisely.

For AI management advice, contact us at hello@itinai.com. For ongoing insights, follow us on Telegram or @itinaicom.

Explore how AI can transform your sales processes and customer engagement at itinai.com.