Understanding the Limitations of Large Language Models (LLMs)

Large Language Models (LLMs) have improved how we process language, but they face challenges due to their reliance on tokenization. Tokenization breaks text into fixed parts before training, which can lead to inefficiencies and biases, especially with different languages or complex data. This method also limits how well the models can scale and adapt to various types of information.

Introducing Byte Latent Transformer (BLT) by Meta AI

Meta AI has developed the Byte Latent Transformer (BLT) to overcome these challenges. BLT does not use tokenization; instead, it processes raw byte sequences and groups them based on their complexity. This innovative approach allows BLT to perform as well as, or better than, traditional tokenized models while being more efficient and robust.

How BLT Works

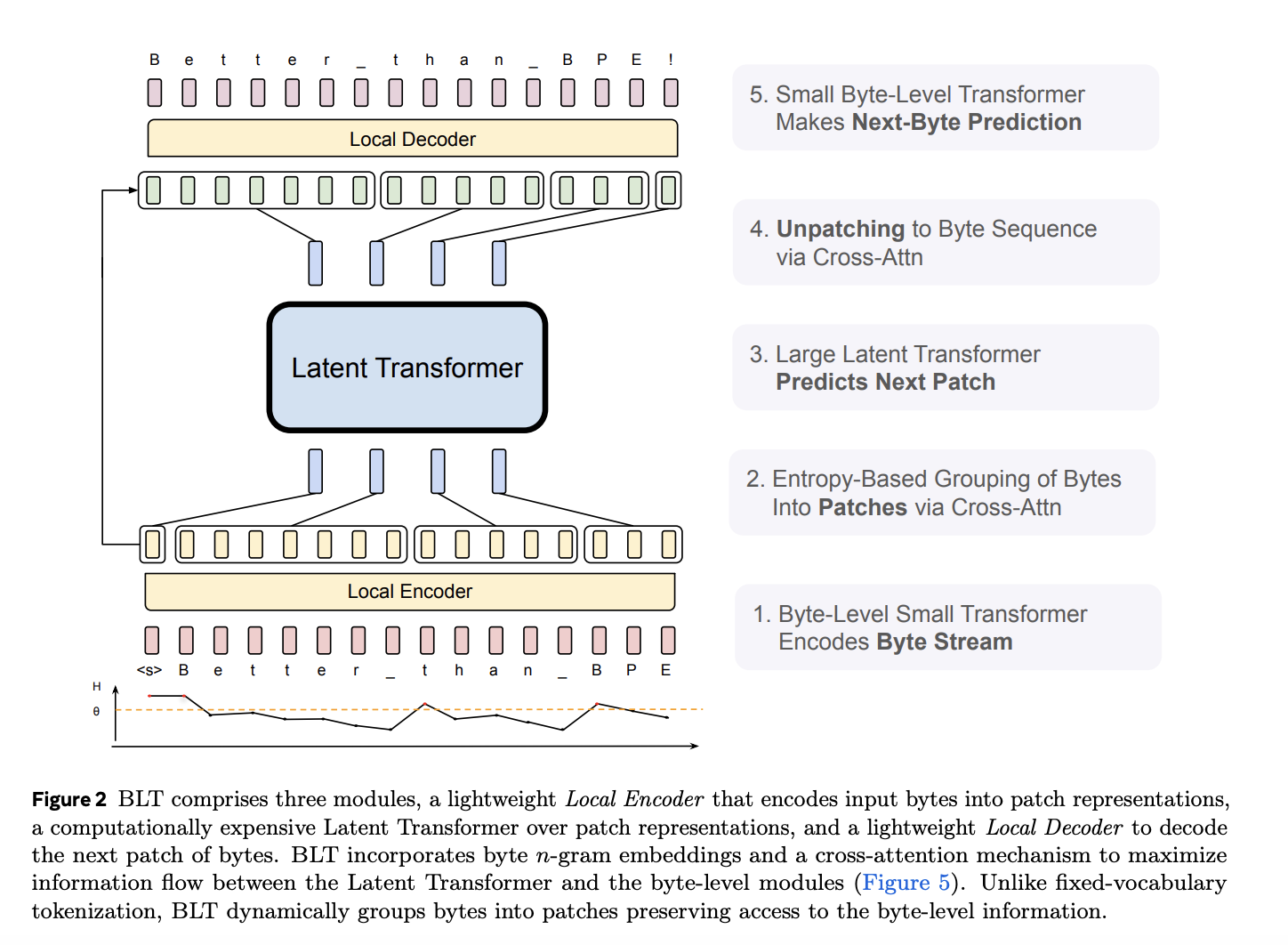

BLT uses a dynamic patching method that encodes bytes into variable-sized groups. This allows it to focus computational power on the most complex parts of the data, improving efficiency. Unlike fixed tokenization, BLT can handle a wider variety of inputs effectively.

Key Features of BLT

- Local Encoder: This lightweight component transforms byte sequences into patch representations, optimizing resource use.

- Latent Transformer: This model processes the patches, concentrating on areas that require more attention for better performance.

- Local Decoder: This reconstructs the byte sequences, allowing for seamless training without tokenization.

By adapting patch sizes, BLT reduces the computational demands typically associated with traditional methods, enhancing its ability to manage diverse data types.

Performance Advantages of BLT

BLT outperforms traditional models in several key areas. It achieves similar or better results than leading tokenized models while using up to 50% fewer computational resources. This efficiency means BLT can scale effectively without sacrificing accuracy.

On various benchmarks, BLT excels in reasoning tasks and understanding complex data. It is particularly effective in handling noisy or structured data, making it suitable for a wide range of applications, including multilingual contexts.

Conclusion: The Future of AI with BLT

Meta AI’s Byte Latent Transformer is a significant advancement in language model design. By eliminating the need for tokenization, BLT offers improved efficiency, scalability, and robustness. Its ability to handle vast amounts of data and parameters positions it as a transformative solution in natural language processing.

As AI continues to evolve, BLT sets a new standard for adaptable and efficient models. For businesses looking to leverage AI, exploring BLT can redefine operations and enhance competitiveness.

Get Involved

For more information, check out the Paper and GitHub Page. Follow us on Twitter, join our Telegram Channel, and connect with our LinkedIn Group. Don’t forget to join our 60k+ ML SubReddit!

If you want to advance your company with AI, consider the practical benefits of implementing Meta AI’s BLT. Identify automation opportunities, define measurable KPIs, select suitable AI tools, and implement solutions gradually for maximum impact.

For AI KPI management advice, reach out to us at hello@itinai.com. Stay updated on leveraging AI by following us on Telegram at t.me/itinainews or Twitter @itinaicom.

Explore how AI can enhance your sales processes and customer engagement at itinai.com.