Transforming AI with Insight-RAG

Challenges of Traditional RAG Frameworks

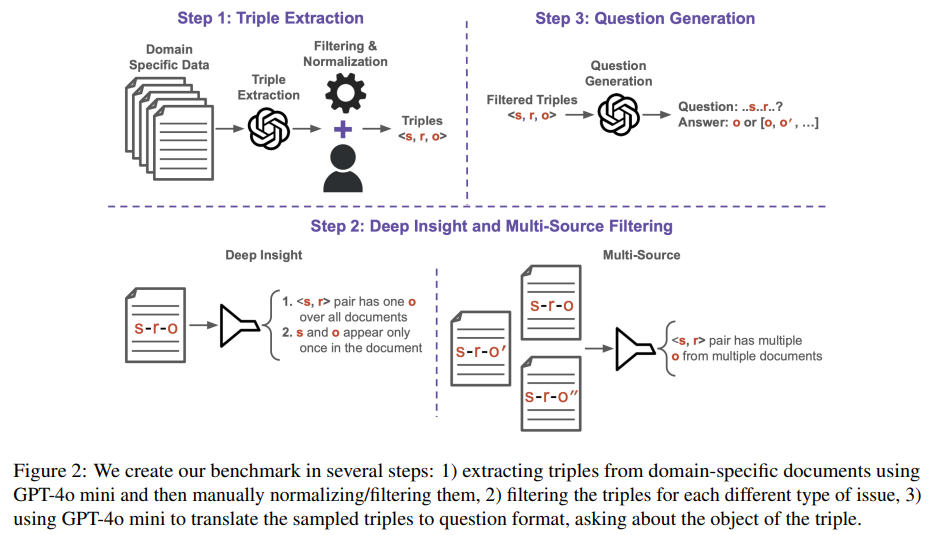

Retrieval-Augmented Generation (RAG) frameworks have gained popularity for enhancing Large Language Models (LLMs) by integrating external knowledge. However, traditional RAG methods often focus on surface-level document relevance, leading to missed insights and limitations in more complex applications. They struggle with tasks that require synthesizing information from diverse qualitative data or analyzing intricate content, such as legal or business texts.

The Need for Improvement

While previous RAG models improved accuracy in tasks like summarization and open-domain question answering, their retrieval mechanisms were not deeply effective in extracting nuanced information. This inadequacy highlighted the necessity for more advanced solutions that can handle complex, non-decomposable tasks.

Current Trends in Insight Extraction

Recent developments in insight extraction have shown LLMs’ potential to mine detailed, context-specific information from unstructured text. Techniques utilizing advanced transformer models, like OpenIE6, have enhanced the identification of key details, expanding LLM applications into areas such as keyphrase extraction and document mining.

Introducing Insight-RAG

Megagon Labs has developed a groundbreaking framework named Insight-RAG, which enhances traditional RAG by integrating an intermediate step for insight extraction. This novel approach allows for a deeper understanding of information needs before retrieving relevant content.

How Insight-RAG Works

- Insight Identifier: This component analyzes the input query to determine its core informational requirements, filtering relevant context.

- Insight Miner: Utilizing a domain-specific LLM, the Insight Miner retrieves detailed content that aligns with the identified insights.

- Response Generator: This final step combines the original query with mined insights to produce a contextually rich and accurate response.

Performance Evaluation

Researchers evaluated Insight-RAG against three benchmarks using scientific paper abstracts from the AAN and OC datasets. They focused on challenges such as the extraction of deeply buried insights, multi-source information, and citation recommendations. Results showed that Insight-RAG consistently outperformed traditional RAG methods, particularly in handling subtle or distributed information.

Key Findings

- Insight-RAG excels in extracting hidden details and integrating information from multiple documents.

- It is effective for tasks beyond question answering, demonstrating broader applicability.

- Models DeepSeek-R1 and Llama-3.3 showed impressive results across all benchmarks, indicating the robustness of Insight-RAG.

Future Directions

Looking ahead, Insight-RAG has the potential to expand into various fields, including law and medicine. Future enhancements could involve hierarchical insight extraction, handling multimodal data, integrating expert input, and exploring cross-domain insight transfer.

Conclusion

Insight-RAG represents a significant advancement in the retrieval-augmented generation landscape. By introducing an intermediate insight extraction step, this framework addresses the shortcomings of traditional RAG approaches, facilitating deeper insights and broader applicability across various tasks. As AI continues to evolve, investing in such innovative solutions can dramatically enhance business processes and decision-making.

Explore AI Solutions

Discover how artificial intelligence can revolutionize your business operations. Consider the following steps:

- Identify processes suitable for automation and explore areas where AI can add value.

- Define key performance indicators (KPIs) to measure the impact of AI investments on your business.

- Select customizable tools that align with your specific objectives.

- Start small, gather effectiveness data, and gradually expand your AI initiatives.

For guidance on managing AI in your business, contact us at hello@itinai.ru or follow us on our social media channels.