Understanding the Challenge in Evaluating Vision-Language Models

Evaluating vision-language models (VLMs) is complex because they need to be tested across many real-world tasks. Current benchmarks often focus on a limited range of tasks, which doesn’t fully showcase the models’ abilities. This issue is even more critical for newer multimodal models, which require extensive testing in various scenarios.

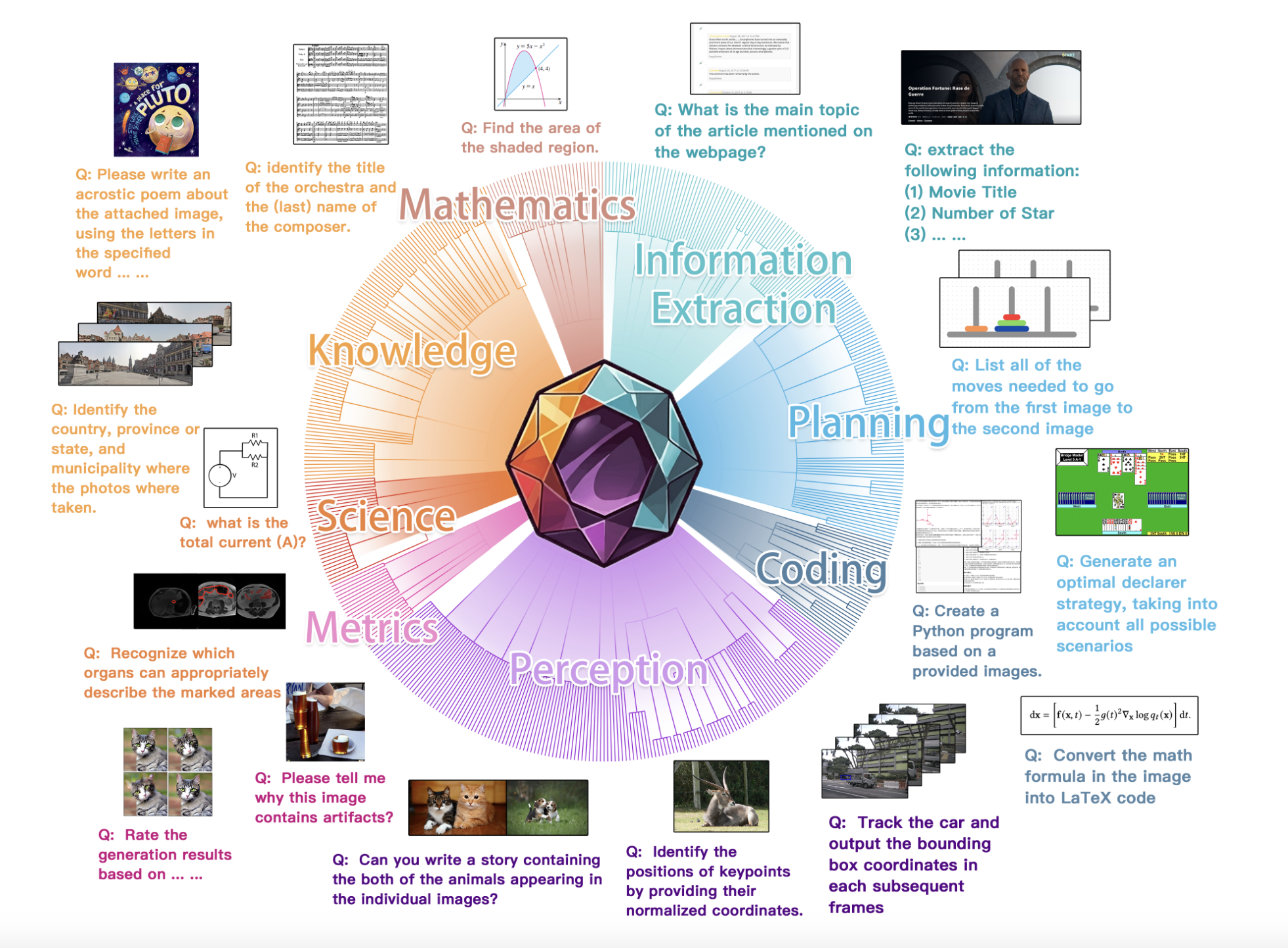

Introducing MEGA-Bench: A Comprehensive Benchmark

The MEGA-Bench Team has developed MEGA-Bench, a groundbreaking benchmark that evaluates over 500 real-world tasks. This tool is designed to assess multimodal models’ performance across different inputs, outputs, and skills, going beyond previous benchmarks.

Key Features of MEGA-Bench

- Diverse Outputs: Unlike older benchmarks that only used multiple-choice questions, MEGA-Bench includes various outputs like numbers, phrases, code, LaTeX, and JSON.

- Comprehensive Task Coverage: It features 505 multimodal tasks curated by 16 experts, categorized by application type, input type, output format, and skills needed.

- Extensive Metrics: MEGA-Bench includes over 40 metrics for detailed analysis of model performance.

- Interactive Visualization: Users can explore model strengths and weaknesses easily, making MEGA-Bench a practical evaluation tool.

Insights from MEGA-Bench Evaluations

Testing state-of-the-art VLMs with MEGA-Bench revealed some important findings:

- Top Performance: GPT-4o outperformed competitors by 3.5%.

- Open-Source Success: Qwen2-VL performed nearly as well as proprietary models, surpassing the second-best open-source model by 10%.

- Efficiency: Gemini 1.5 Flash excelled in user interface and document tasks.

- Chain-of-Thought Prompting: Proprietary models benefited from this technique, while open-source models did not leverage it effectively.

Why MEGA-Bench Matters

MEGA-Bench is a significant step forward in multimodal benchmarking. It offers a thorough evaluation of VLM capabilities, supporting a wide range of inputs and outputs. This benchmark helps developers and researchers understand and improve VLMs for practical applications, setting a new standard in model evaluation.

Get Involved and Learn More

Check out the Paper and Project for more details. Follow us on Twitter, join our Telegram Channel, and connect with our LinkedIn Group. If you appreciate our work, subscribe to our newsletter. Join our 50k+ ML SubReddit community!

Upcoming Live Webinar

Oct 29, 2024: The Best Platform for Serving Fine-Tuned Models: Predibase Inference Engine

Transform Your Business with AI

Utilize MEGA-Bench to enhance your company’s AI efforts:

- Identify Automation Opportunities: Find customer interaction points that can benefit from AI.

- Define KPIs: Ensure your AI initiatives have measurable impacts.

- Select an AI Solution: Choose tools that fit your needs and allow customization.

- Implement Gradually: Start small, gather data, and expand AI usage wisely.

For AI KPI management advice, reach out to us at hello@itinai.com. For ongoing insights, follow us on Telegram at t.me/itinainews or on Twitter at @itinaicom.

Discover how AI can enhance your sales processes and customer engagement. Explore more at itinai.com.