Understanding Tensor Product Attention (TPA)

Large language models (LLMs) are essential in natural language processing (NLP), excelling in generating and understanding text. However, they struggle with long input sequences due to memory challenges, especially during inference. This limitation affects their performance in practical applications.

Introducing Tensor Product Attention (TPA)

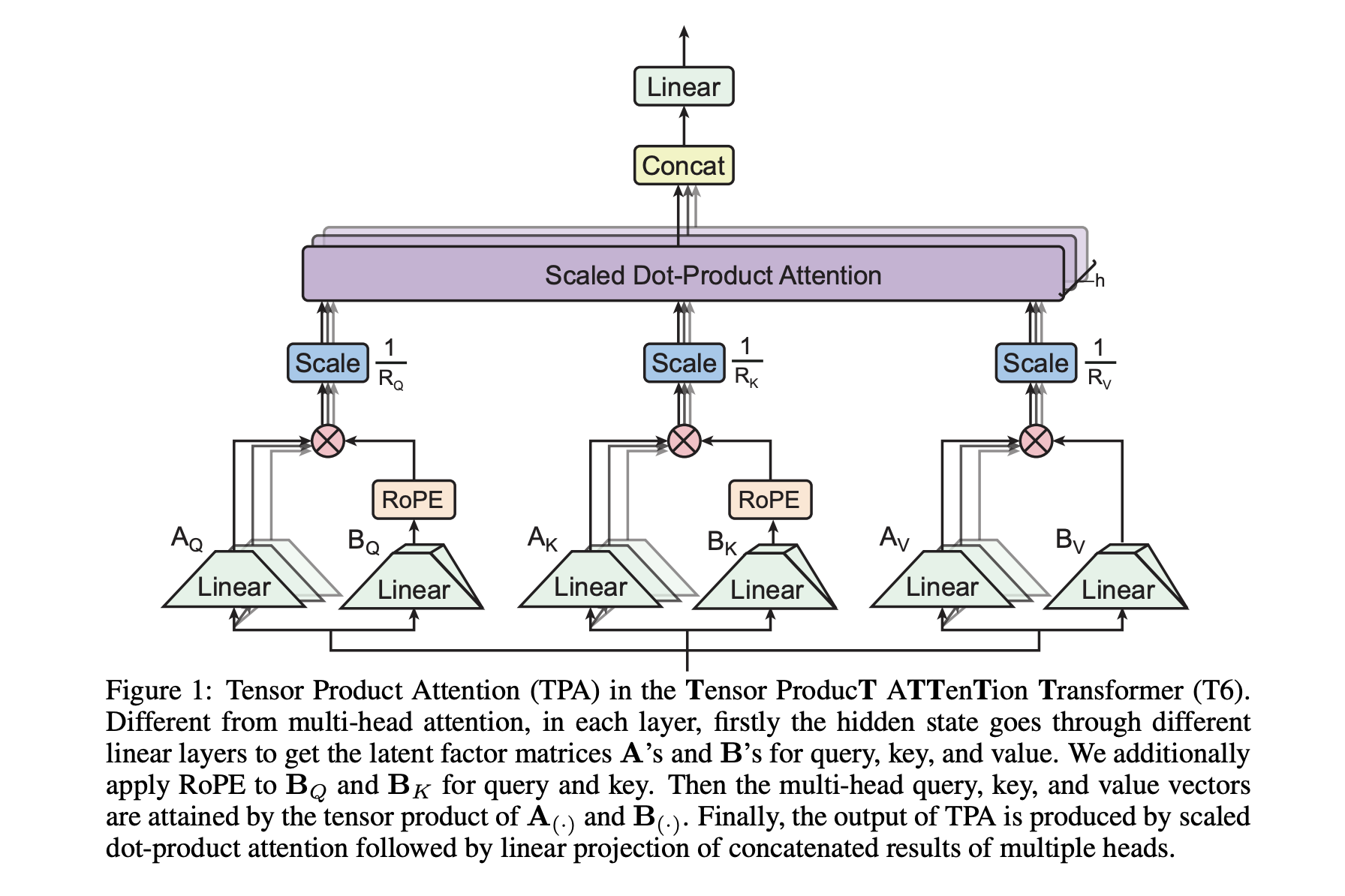

A research team from Tsinghua University and other institutions has developed TPA, a new attention mechanism that addresses memory issues. TPA uses tensor decompositions to compactly represent queries, keys, and values (QKV), which significantly reduces memory usage during inference. This allows LLMs to handle longer sequences without sacrificing performance.

Key Benefits of TPA

- Memory Efficiency: TPA reduces the size of the KV cache, allowing for longer sequences to be processed effectively.

- Performance Improvement: TPA maintains or even enhances model performance compared to traditional methods.

- Seamless Integration: TPA works well with existing architectures, making it easy to implement.

Technical Advantages

TPA dynamically factorizes QKV activations into low-rank components, which are tailored to the input data. This method significantly reduces memory consumption compared to standard multi-head attention (MHA).

Additionally, TPA integrates effectively with Rotary Position Embedding (RoPE), ensuring that positional information is preserved while enhancing caching efficiency.

Results and Performance

In tests, TPA outperformed various baseline models in language tasks, showing faster convergence and lower validation losses. It excelled in downstream tasks, achieving high accuracy in zero-shot and two-shot scenarios.

Conclusion

TPA offers a practical solution to the memory challenges faced by large language models, making it a valuable tool for real-world applications. Its efficient design and strong performance across benchmarks highlight its potential to enhance LLM capabilities.

For more information, check out the Paper and GitHub Page. Follow us on Twitter, join our Telegram Channel, and connect with our LinkedIn Group. Don’t forget to join our 65k+ ML SubReddit.

Transform Your Business with AI

Stay competitive by leveraging AI solutions like TPA:

- Identify Automation Opportunities: Find key areas in customer interactions that can benefit from AI.

- Define KPIs: Ensure your AI initiatives have measurable impacts on your business.

- Select an AI Solution: Choose tools that fit your needs and allow for customization.

- Implement Gradually: Start with a pilot project, gather data, and expand wisely.

For AI KPI management advice, contact us at hello@itinai.com. Stay updated on AI insights through our Telegram or @itinaicom.

Explore how AI can enhance your sales and customer engagement at itinai.com.