Large Language Models (LLMs) and Their Reasoning Capabilities

LLMs can solve math problems, make logical inferences, and assist in programming. Their success often depends on two methods: supervised fine-tuning (SFT) with human help and inference-time search with external checks. While SFT provides structured reasoning, it demands a lot of human effort and is limited by the quality of the model used. Inference-time strategies, like verifier-guided sampling, improve accuracy but require more computing power. This raises a crucial question: Can an LLM learn to reason on its own without heavy supervision or external checks? Researchers have developed Satori, a 7B parameter LLM that aims to internalize reasoning and self-improvement.

Introducing Satori

A Self-Reflective and Self-Exploratory Model

Developed by researchers from MIT and other institutions, Satori uses autoregressive search to improve its reasoning and explore new strategies independently. Unlike traditional models that need extensive fine-tuning, Satori uses a new approach called Chain-of-Action-Thought (COAT) reasoning. It is built on Qwen-2.5-Math-7B and follows a two-step training process: format tuning (FT) and large-scale self-improvement through reinforcement learning (RL).

Technical Details and Benefits of Satori

1. Format Tuning (FT) Stage

Satori starts with a small dataset (around 10,000 samples) to teach COAT reasoning, which includes three key actions:

- Continue: Extends the reasoning process.

- Reflect: Encourages a review of previous steps.

- Explore: Promotes considering different approaches.

Unlike typical Chain-of-Thought training, COAT allows for flexible decision-making during reasoning.

2. Reinforcement Learning (RL) Stage

This stage uses a large-scale self-improvement method called Reinforcement Learning with Restart and Explore (RAE). The model refines its reasoning from earlier steps and receives scores for self-corrections and exploration, leading to continuous learning.

Insights and Performance

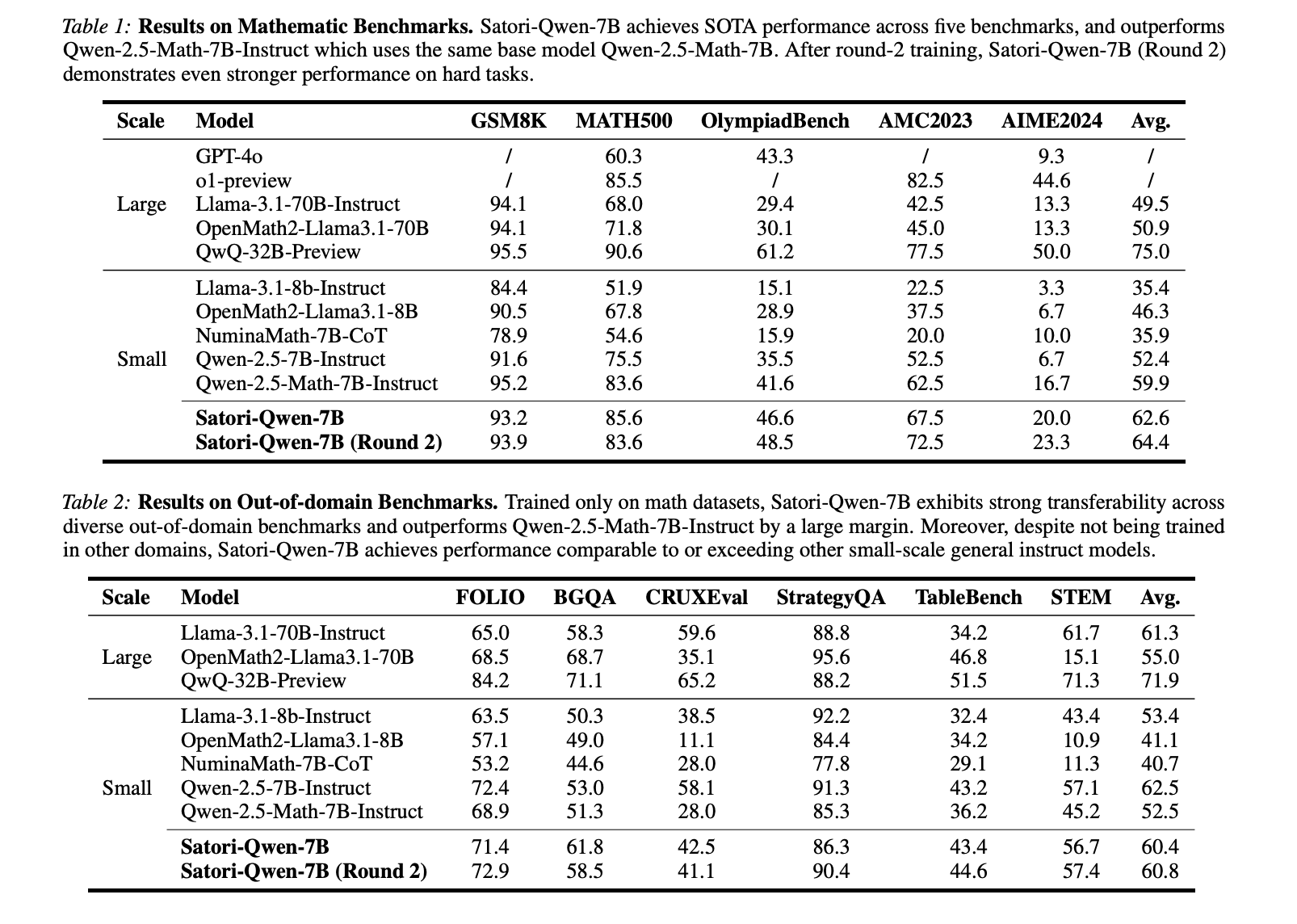

Evaluations show that Satori excels in various benchmarks, often outperforming models that depend on supervised fine-tuning. Key findings include:

- Math Performance: Satori outperforms Qwen-2.5-Math-7B-Instruct on several math datasets.

- Self-Improvement: With more reinforcement learning rounds, Satori continues to refine its abilities without human help.

- Generalization: Satori shows strong reasoning skills in diverse tasks beyond math, indicating adaptability.

- Efficiency: Satori achieves similar or better results than traditional models with far fewer training samples (10K vs. 300K).

Conclusion: Advancing Autonomous Learning in LLMs

Satori represents a significant advancement in LLM reasoning, showing that models can improve their reasoning without external help or high-quality teacher models. By integrating COAT reasoning, reinforcement learning, and autoregressive search, Satori enhances problem-solving accuracy and adaptability to new tasks. Future research may focus on refining these techniques and applying them to broader areas.

Explore the Paper and GitHub Page. All credit goes to the researchers behind this project. Follow us on Twitter, join our Telegram Channel, and connect with us on LinkedIn. Join our 75k+ ML SubReddit.

Transform Your Business with AI

Stay competitive and leverage AI with Satori:

- Identify Automation Opportunities: Find key customer interactions that can benefit from AI.

- Define KPIs: Ensure measurable impacts on your business outcomes.

- Select an AI Solution: Choose tools that fit your needs and offer customization.

- Implement Gradually: Start with a pilot project, collect data, and expand AI usage wisely.

For AI KPI management advice, reach out to us at hello@itinai.com. For ongoing insights on leveraging AI, follow us on Telegram or @itinaicom.

Discover how AI can enhance your sales processes and customer engagement at itinai.com.