Unlocking Up to 2x Speedup in LLaMA Models for Long-Context Applications

Practical Solutions and Value

Large Language Models (LLMs) are widely used in interactive chatbots and document analysis, but serving these models with low latency and high throughput is challenging. Conventional approaches for improving one often compromise the other. However, a new approach called MagicDec has shown that speculative decoding can enhance both latency and throughput without sacrificing accuracy.

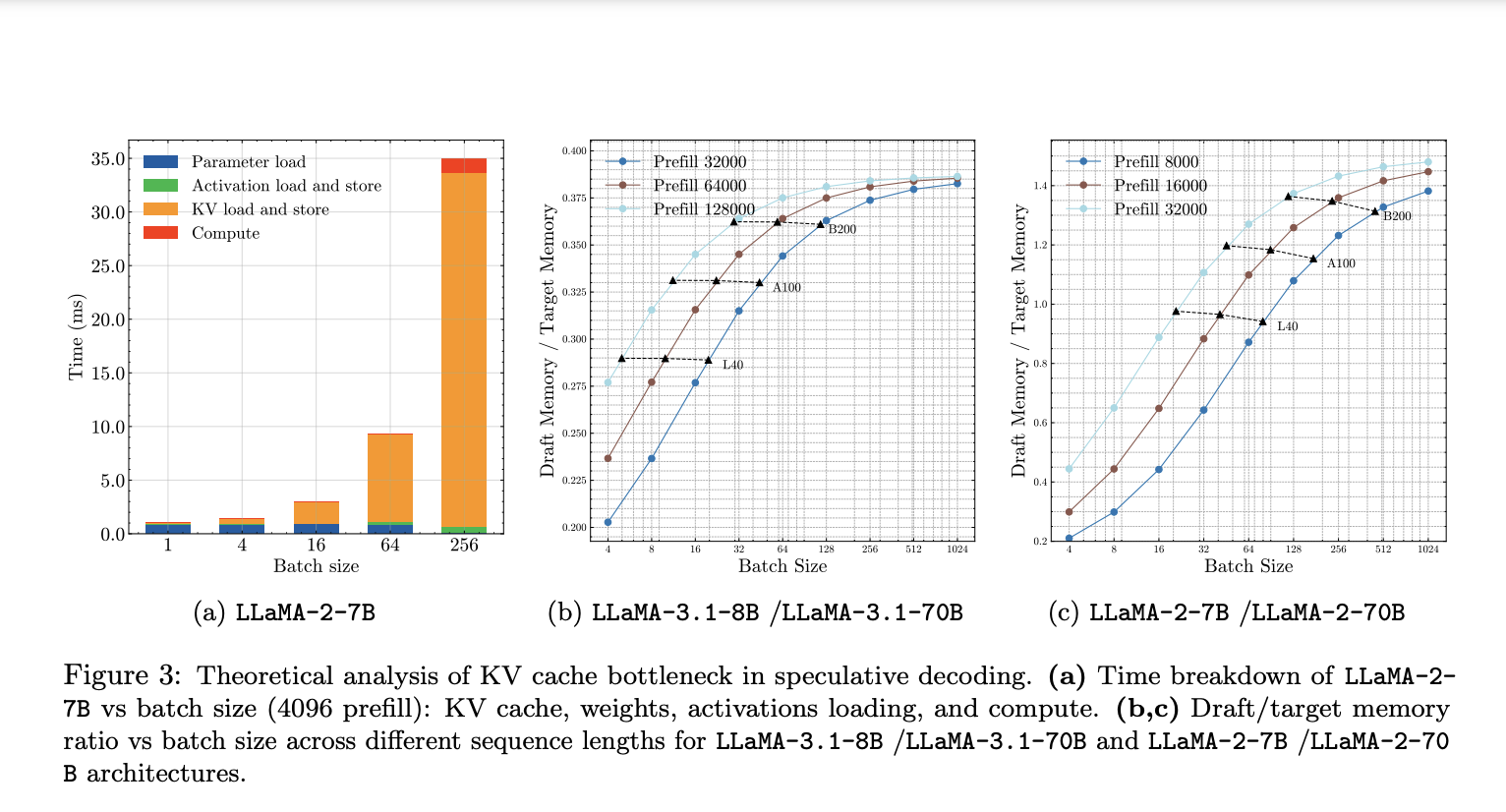

Existing methods for serving LLMs often require a tradeoff between latency and throughput. While some techniques can achieve high throughput by serving more requests simultaneously, they don’t reduce latency for individual requests. On the other hand, lossy methods can improve both metrics but at the cost of reduced model performance. Speculative decoding has shown promise in lowering latency, but its effectiveness for improving throughput, especially with larger batch sizes, has been questioned.

MagicDec, developed by researchers from Carnegie Mellon University, Moffett AI, and Meta AI, takes a novel approach to deploying speculative decoding for high-throughput inference. It introduces intelligent drafting strategies and addresses key-value cache bottlenecks to improve speed with increasing batch size, demonstrating up to 2x speedup for LLaMA models when serving batch sizes ranging from 32 to 256 on 8 NVIDIA A100 GPUs.

The implications of this research are game-changing for the field of LLM serving. By challenging the conventional belief that speculative decoding is inefficient for increasing throughput, MagicDec opens up new possibilities for optimizing LLM inference. As long-context applications become more common, the method’s ability to improve performance across a range of batch sizes and sequence lengths makes it particularly valuable.

MagicDec represents a major step forward in efficiently addressing the challenges of serving large language models. It paves the way for more efficient and scalable LLM applications, crucial in enabling the widespread deployment of these powerful models across various use cases.

AI Solutions for Business

Want to evolve your company with AI and stay competitive? Use MagicDec to unlock up to 2x speedup in LLaMA Models for long-context applications.

Discover how AI can redefine your way of work:

- Identify Automation Opportunities: Locate key customer interaction points that can benefit from AI.

- Define KPIs: Ensure your AI endeavors have measurable impacts on business outcomes.

- Select an AI Solution: Choose tools that align with your needs and provide customization.

- Implement Gradually: Start with a pilot, gather data, and expand AI usage judiciously.

For AI KPI management advice, connect with us at hello@itinai.com. For continuous insights into leveraging AI, stay tuned on our Telegram or Twitter.

Discover how AI can redefine your sales processes and customer engagement. Explore solutions at itinai.com.