M1: A New Approach to AI Reasoning

Understanding the Need for Efficient Reasoning Models

Effective reasoning is critical for addressing complex challenges in fields like mathematics and programming. Traditional transformer-based models have shown significant improvements due to their ability to perform long-chain-of-thought reasoning. However, these models have limitations, including:

- Quadratic Computational Complexity: This makes processing long sequences inefficient.

- Increased Costs: Techniques that enhance model performance often lead to higher computational expenses.

- Scalability Issues: Transformers struggle with large-batch processing and lengthy contexts.

Exploring Alternative Architectures

To overcome these challenges, researchers have investigated various alternatives to transformer architectures, including:

- RNN-based Models: Offer better memory efficiency.

- State Space Models (SSMs): Allow for faster inference.

- Hybrid Models: Combine self-attention with subquadratic layers to enhance performance.

- Knowledge Distillation: Transfers capabilities from larger models to smaller, more efficient ones.

Introducing M1: A Hybrid Solution

Researchers from TogetherAI, Cornell University, the University of Geneva, and Princeton have developed M1, a hybrid linear RNN reasoning model based on the Mamba architecture. M1 has shown to:

- Outperform previous linear RNN models.

- Match the performance of state-of-the-art distilled transformer models like DeepSeek R1.

- Achieve a 3x speedup in inference compared to similar-sized transformers.

This model enhances reasoning accuracy through techniques such as self-consistency and verification, making it a robust option for large-scale inference tasks.

Development and Training of M1

M1 is built using a three-stage process:

- Distillation: A pretrained transformer model is distilled into the Mamba architecture, improving performance with modified linear projections.

- Supervised Fine-Tuning (SFT): The model is fine-tuned on datasets focused on mathematical reasoning.

- Reinforcement Learning (RL): Employs GRPO to enhance reasoning capabilities and response diversity.

Experimental Validation

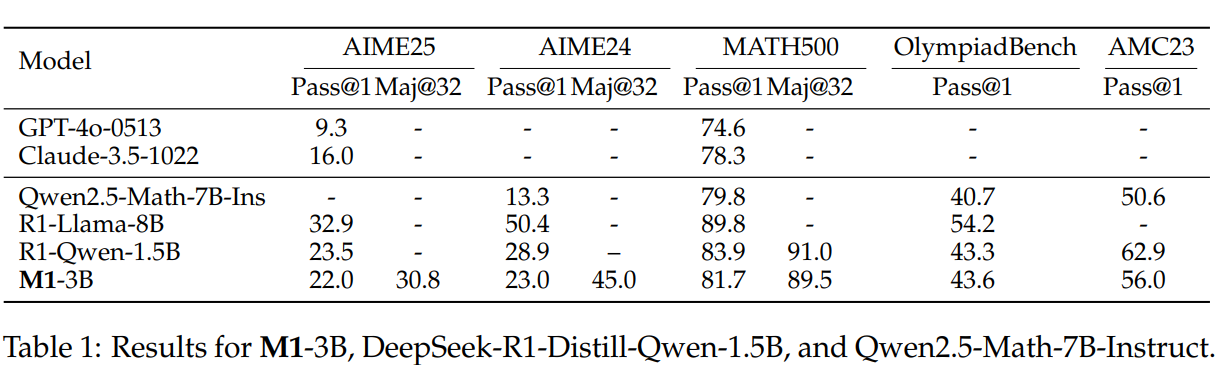

The M1 model was evaluated using various math benchmarks, including MATH500 and AIME25. The evaluation metrics included:

- Coverage (pass@k): Indicates the likelihood of generating a correct solution among multiple outputs.

- Inference Speed: Assesses efficiency in large-batch generation and handling longer sequences.

Results show that M1 competes strongly with existing state-of-the-art models, especially in tasks requiring reasoning.

Conclusion

In summary, M1 represents a significant advancement in AI reasoning models. By leveraging the Mamba architecture and incorporating innovative training techniques, M1 achieves performance levels comparable to top models while offering over three times the inference speed of similar-sized transformers. This efficiency makes it an attractive solution for businesses looking to implement AI in mathematical reasoning tasks. M1 not only enhances accuracy but also supports resource-intensive strategies, positioning it as a leading alternative to traditional transformer-based architectures.

For businesses looking to harness the power of AI, consider identifying processes that can be automated and selecting appropriate tools tailored to your objectives. Start small, monitor effectiveness, and progressively expand your AI initiatives. For further guidance, feel free to reach out to us at hello@itinai.ru.