Practical AI Solutions for Your Business

LMMS-EVAL: A Unified and Standardized Multimodal AI Benchmark Framework

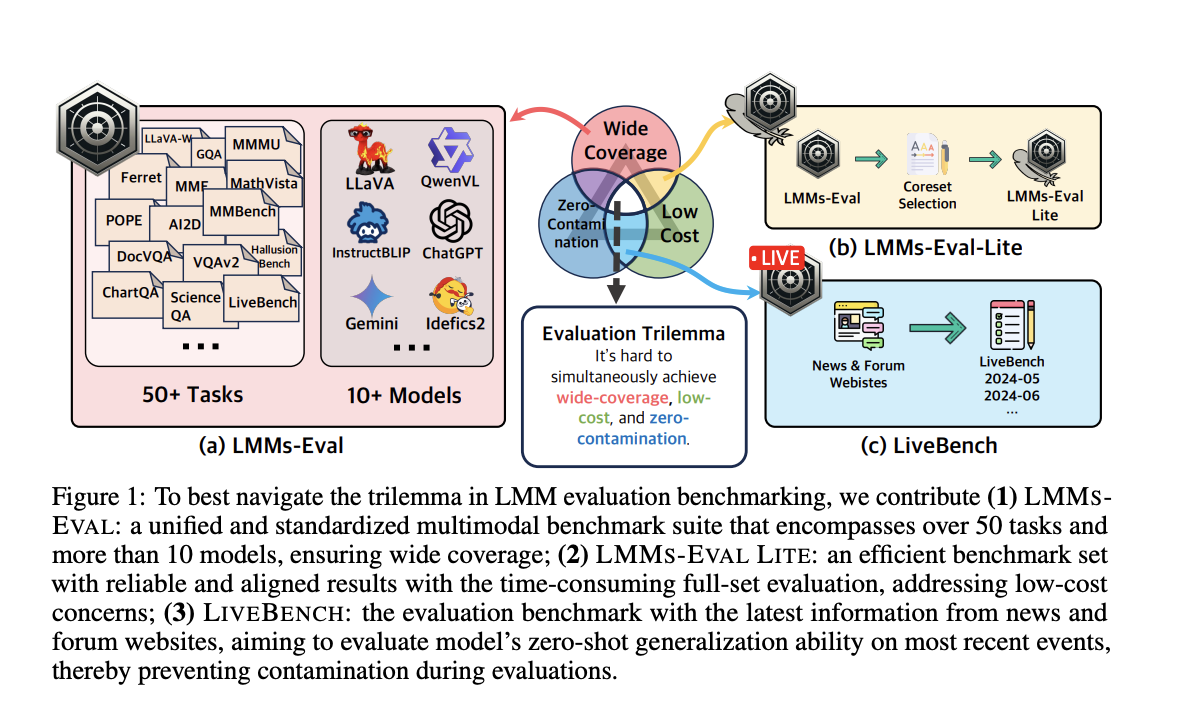

Fundamental Large Language Models (LLMs) like GPT-4, Gemini, and Claude have shown remarkable capabilities, rivaling or surpassing human performance. To address the need for transparent and reproducible evaluations of language and multimodal models, the LMMS-EVAL suite has been developed.

LMMS-EVAL evaluates over ten models with over 30 sub-variants across more than 50 tasks, ensuring impartial and consistent comparisons. It offers a standardized assessment pipeline to guarantee openness and repeatability.

LMMS-EVAL LITE: Affordable and Comprehensive Evaluation

LMMS-EVAL LITE provides a cost-effective and thorough evaluation by focusing on a variety of tasks and eliminating unnecessary data instances. It offers dependable and consistent results while reducing expenses, making it an affordable substitute for in-depth model evaluations.

LIVEBENCH: Benchmarking Zero-Shot Generalization Ability

LIVEBENCH evaluates models’ zero-shot generalization ability on current events by using up-to-date data from news and forum websites. It offers an affordable and broadly applicable approach to assess multimodal models, ensuring their continued applicability and precision in real-world situations.

Unlock the Power of AI for Your Business

AI benchmarks are crucial for distinguishing between models, identifying flaws, and guiding future advancements. LMMS-EVAL, LMMS-EVAL LITE, and LiveBench are designed to close gaps in assessment frameworks and facilitate the continuous development of AI.

Evolve Your Company with AI

Discover how AI can redefine your way of work. Identify Automation Opportunities, Define KPIs, Select an AI Solution, and Implement Gradually. For AI KPI management advice, connect with us at hello@itinai.com.

Reimagine Sales Processes and Customer Engagement with AI

Explore AI solutions at itinai.com. For continuous insights into leveraging AI, stay tuned on our Telegram or Twitter.