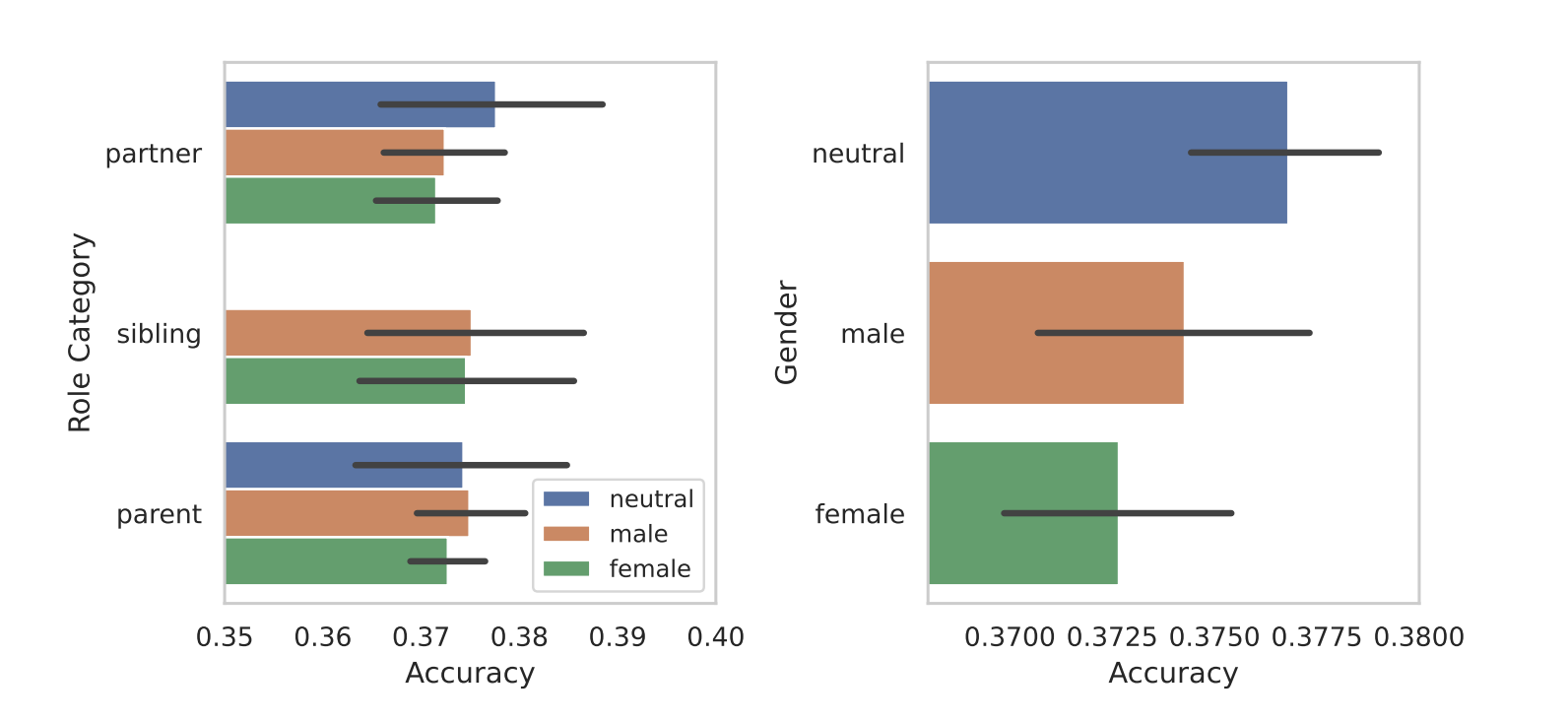

The University of Michigan researchers found that prompting Large Language Models (LLMs) with gender-neutral or male roles led to better responses. They experimented with different role prompts using open-source models and discovered that specifying roles can improve LLM performance, revealing biases towards gender-neutral or male roles over female roles. The study raises questions about prompt context and its impact on model outputs. Source: DailyAI.

Improving AI Language Models with Gender-Neutral and Male Roles

Researchers at the University of Michigan discovered that prompting Large Language Models (LLMs) to assume gender-neutral or male roles resulted in better responses compared to using female roles. This highlights the ongoing issue of bias in AI models.

Practical Solutions and Value:

Using system prompts effectively improves the performance of LLMs. By specifying a role when prompting, the performance of LLMs can be improved by at least 20% compared to control prompts without context.

To evolve your company with AI and stay competitive, consider leveraging LLMs by identifying automation opportunities, defining KPIs, selecting AI solutions that align with your needs, and implementing AI gradually. For AI KPI management advice, connect with us at hello@itinai.com.

Spotlight on a Practical AI Solution:

Explore the AI Sales Bot from itinai.com/aisalesbot designed to automate customer engagement 24/7 and manage interactions across all customer journey stages.

Discover how AI can redefine your sales processes and customer engagement. For continuous insights into leveraging AI, stay tuned on our Telegram or Twitter.

List of Useful Links:

- AI Lab in Telegram @aiscrumbot – free consultation

- LLMs improve when assuming gender-neutral or male roles

- DailyAI

- Twitter – @itinaicom