The article delves into the transformer’s decoder architecture, emphasizing the loop-like, iterative nature that contrasts with the linear processing of the encoder. It discusses the masked multi-head attention and encoder-decoder attention mechanisms, demonstrating their implementation in Python and NumPy through a translation example. The decoder’s role in Large Language Models (LLMs) is highlighted.

“`html

Exploring the Transformer’s Decoder Architecture: Masked Multi-Head Attention, Encoder-Decoder Attention, and Practical Implementation

Introduction

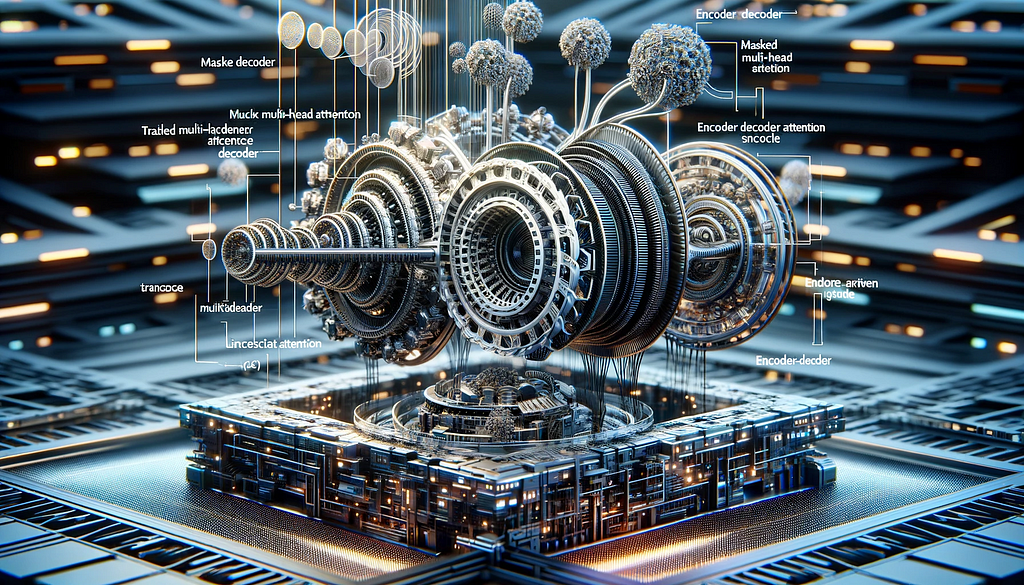

In this article, we explore the decoder component of the transformer architecture, focusing on its differences and similarities with the encoder. The decoder’s unique feature is its loop-like, iterative nature, which contrasts with the encoder’s linear processing. Central to the decoder are two modified forms of the attention mechanism: masked multi-head attention and encoder-decoder multi-head attention.

Practical Implementation

We will also demonstrate how these concepts are implemented using Python and NumPy. We have created a simple example of translating a sentence from English to Portuguese. This practical approach will help illustrate the inner workings of the decoder in a transformer model and provide a clearer understanding of its role in Large Language Models (LLMs).

One Big While Loop

The decoder works iteratively from a

Follow the Numbers

We consider the same sentence used in our previous article and feed the decoder to perform a translation task to the Portuguese language. The encoder constructs the vectors, and the decoder processes the data for translation using Python and NumPy.

Zooming in the Masked Attention Layer

We take a closer look at what happens in the masked attention sector when the matrix size is larger than just one number. We demonstrate the step-by-step process of the masked attention layer using Python and NumPy.

Conclusions

Our detailed examination of the transformer architecture’s decoder component shows its intricacies and how it integrates components from the encoder while generating new information. The practical implementation using Python and NumPy demonstrates how the decoder processes data when performing machine translation.

References

References to the original paper and author are provided for further reading and exploration of the topic.

Spotlight on a Practical AI Solution

Discover how AI can redefine your company’s way of work and stay competitive. Identify Automation Opportunities, Define KPIs, Select an AI Solution, and Implement Gradually. Connect with us at hello@itinai.com for AI KPI management advice and continuous insights into leveraging AI. Explore practical AI solutions at itinai.com.

“`

List of Useful Links:

- AI Lab in Telegram @aiscrumbot – free consultation

- LLMs and Transformers from Scratch: the Decoder | by Luís Roque

- Towards Data Science – Medium

- Twitter – @itinaicom