LLM+FOON Framework: Enhancing Robotic Cooking Task Planning

Introduction

The development of robots for home environments, particularly in cooking, has gained significant traction. These robots must perform various tasks that require visual interpretation, manipulation, and decision-making. Cooking presents unique challenges due to the variety of utensils, differing visual perspectives, and the often incomplete nature of instructional materials, such as videos. To effectively navigate these challenges, a reliable method is essential for logical planning, flexible understanding, and adaptability to diverse environments.

Challenges in Robotic Cooking Task Planning

Lack of Standardization

One of the main issues in translating cooking videos into actionable robotic tasks is the inconsistency found in online content. Videos may omit steps, include irrelevant introductions, or present arrangements that do not match the robot’s operational setup. Consequently, robots face the challenge of interpreting visual and textual data, inferring missing steps, and converting this information into a sequence of physical actions.

Limitations of Current Tools

Current robotic planning tools often rely on logic-based models like PDDL or data-driven approaches utilizing Large Language Models (LLMs). While LLMs are skilled at reasoning from various inputs, they struggle to validate the feasibility of generated plans in a robotic context. Existing prompt-based feedback mechanisms have shown limitations in confirming the logical correctness of actions, especially in complex, multi-step cooking tasks.

The LLM+FOON Framework

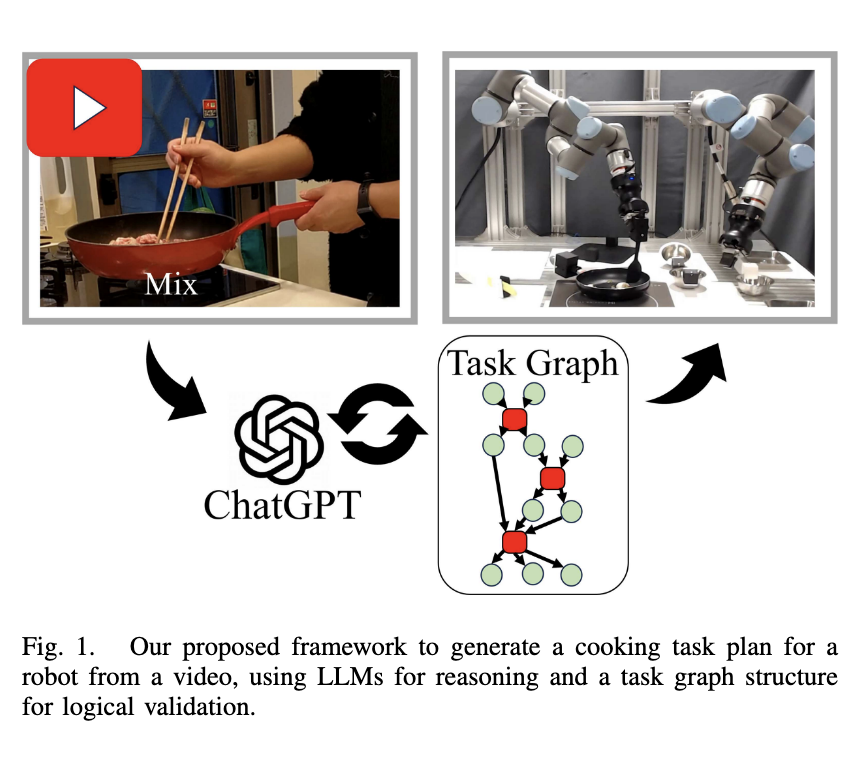

Researchers from the University of Osaka and the National Institute of Advanced Industrial Science and Technology (AIST), Japan, have introduced a novel framework that integrates an LLM with a Functional Object-Oriented Network (FOON) to enhance cooking task planning from video instructions.

How It Works

This hybrid system employs an LLM to analyze cooking videos and generate task sequences. These sequences are then transformed into FOON-based graphs, where each action is validated against the robot’s operational environment. If any action is found to be infeasible, feedback is provided for the LLM to revise the plan, ensuring that only logically sound steps are included.

Processing Layers

- Cooking videos are segmented based on subtitles, extracted using Optical Character Recognition (OCR).

- Key video frames are organized into a 3×3 grid as input images.

- The LLM is provided with structured task descriptions, constraints, and environment layouts to infer target object states.

- FOON cross-verifies these states, ensuring logical consistency.

Results and Case Study

The framework was tested using five complete cooking recipes derived from ten videos. The results were promising, with the FOON-enhanced method successfully generating feasible task plans for 80% (4 out of 5) of the recipes, compared to only 20% (1 out of 5) for the baseline approach that utilized only the LLM. Additionally, the system achieved an 86% success rate in accurately predicting object states.

In a real-world application, a dual-arm UR3e robot demonstrated the method by successfully completing a gyudon (beef bowl) recipe, even inferring a missing action not shown in the video. This highlights the system’s ability to adapt to incomplete instructions while maintaining task accuracy.

Conclusion

This research addresses the critical issues of hallucination and logical inconsistency in LLM-based robotic task planning. The proposed LLM+FOON framework provides a robust solution for generating actionable plans from unstructured cooking videos by incorporating FOON as a validation mechanism. This methodology effectively bridges reasoning and logical verification, enabling robots to execute complex tasks while adapting to environmental conditions.

Call to Action

Explore how artificial intelligence can transform your business operations. Identify areas where automation can add value, establish key performance indicators (KPIs) to measure the impact of AI, and select tools that align with your objectives. Start small, collect data, and gradually expand your AI initiatives.

For guidance on managing AI in your business, contact us at hello@itinai.ru or connect with us on Telegram, X, and LinkedIn.