Enhancing Recommendations with LLM-KT

Collaborative Filtering (CF) is a popular method used in recommendation systems to align user preferences with products. However, it often faces challenges in understanding complex relationships and adapting to changing user behavior. Recent research has shown that Large Language Models (LLMs) can improve recommendations by utilizing their reasoning capabilities.

Introducing LLM-KT

Researchers from multiple universities and labs have developed LLM-KT, a flexible framework that enhances CF models by integrating LLM-generated features into the model’s internal layers. This innovative approach allows models to learn and adapt without needing structural changes, making it compatible with various CF models.

Key Benefits

- Achieves a significant 21% increase in NDCG@10 performance based on experiments with MovieLens and Amazon datasets.

- Provides an intuitive way for CF models to learn user preferences by creating profiles derived from user-item interactions.

- Utilizes customized prompts for each user to generate preference summaries, which are then transformed into embeddings.

Efficient Knowledge Transfer

LLM-KT introduces a knowledge transfer method that embeds LLM features within a specific internal layer, enabling CF models to accurately reflect user preferences. This is achieved through a combined training process that aligns profile embeddings with the model’s internal representations.

Flexible Framework Features

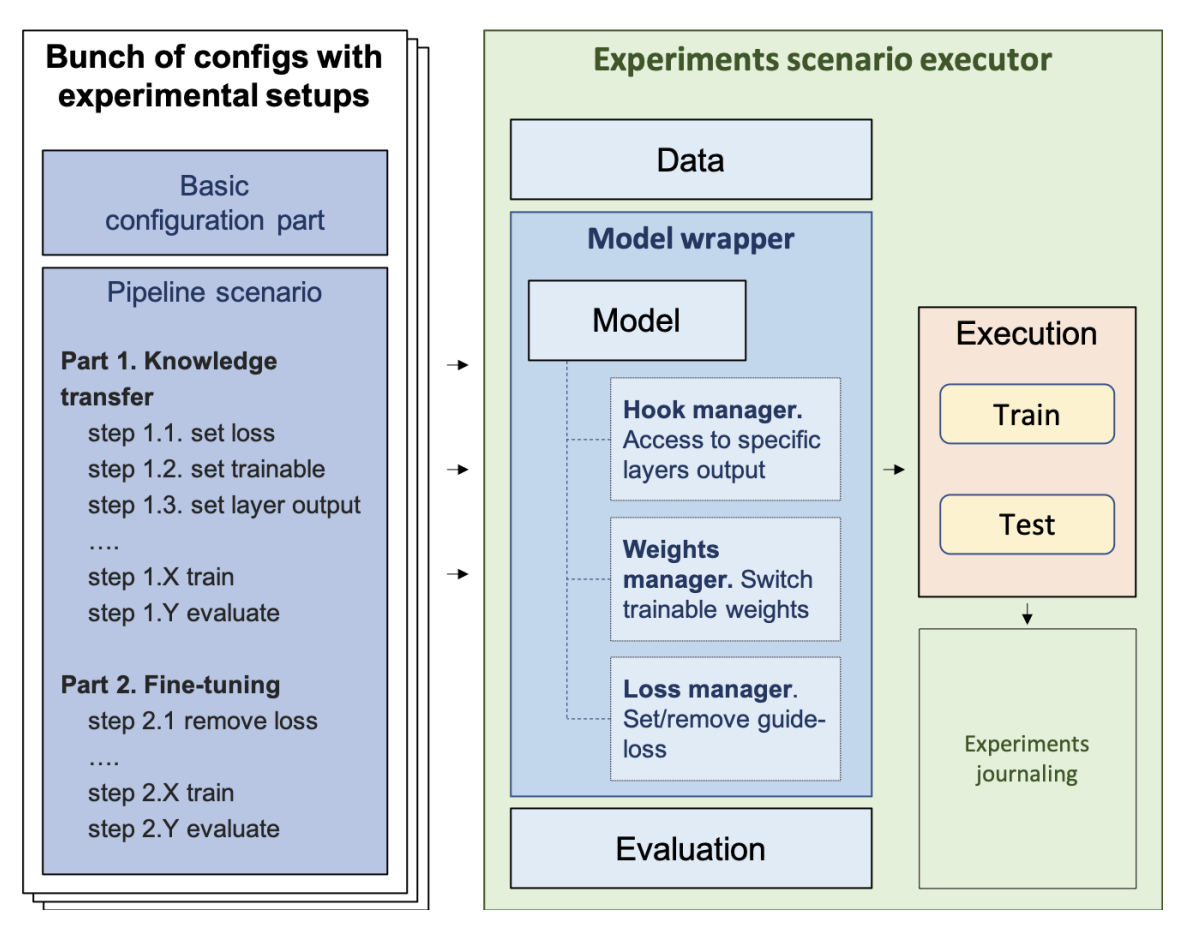

Built on the RecBole platform, LLM-KT supports easy configuration for various experimental setups. Key features include:

- Integration of LLM-generated profiles from different sources.

- An adaptable configuration system for streamlined experiments.

- Tools for batch execution and result analysis.

Testing and Results

The framework has been tested on the Amazon and MovieLens datasets, comparing traditional and context-aware CF models. Results show consistent improvements in performance metrics, making LLM-KT competitive with leading methods in the field.

Why Choose LLM-KT?

LLM-KT provides a robust solution for enhancing collaborative filtering models by embedding LLM-generated features. This unique approach allows seamless knowledge transfer without altering model structures, making it versatile for various CF applications.

For further insights and to stay updated on our research, follow us on Twitter, join our Telegram Channel, and be part of our LinkedIn Group. If you’re interested in evolving your business with AI, reach out to us at hello@itinai.com.

Discover AI Solutions

Explore how AI can transform your business processes. Here’s how to get started:

- Identify Automation Opportunities: Find key areas for AI implementation in customer interactions.

- Define KPIs: Ensure measurable impacts from your AI initiatives.

- Select an AI Solution: Choose tools that fit your business needs.

- Implement Gradually: Begin with a pilot project, analyze the data, and expand as necessary.

For ongoing AI insights and updates, follow us on Telegram or Twitter.