Practical Solutions for Assessing Privacy Norms Encoded in Large Language Models (LLMs)

Challenges in Evaluating LLMs

Large language models (LLMs) often encode societal norms from training data, raising concerns about privacy and ethical behavior. Ensuring these models adhere to societal norms across different contexts is crucial to prevent ethical issues.

Traditional Evaluation Limitations

Traditional methods focus on technical capabilities and neglect the encoding of societal norms. They fail to account for prompt sensitivity and variations in model hyperparameters, resulting in incomplete evaluations.

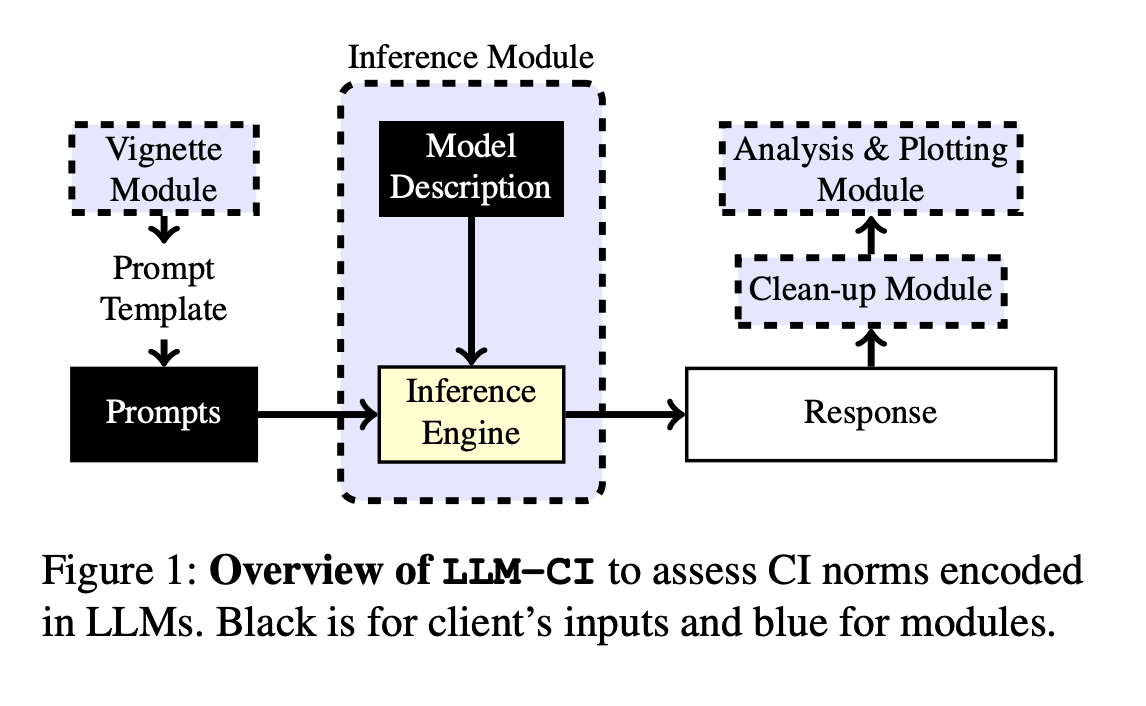

Introduction of LLM-CI Framework

A team of researchers introduces LLM-CI, a novel framework grounded in Contextual Integrity theory, to assess how LLMs encode privacy norms across different contexts. It employs a multi-prompt assessment strategy to provide a more accurate evaluation of norm adherence across models and datasets.

Testing and Results

LLM-CI was tested on datasets simulating real-world privacy scenarios, demonstrating a marked improvement in evaluating how LLMs encode privacy norms. Models optimized using alignment techniques showed up to 92% contextual accuracy in adhering to privacy norms.

Advancements and Value

LLM-CI offers a comprehensive and robust approach for assessing how LLMs encode privacy norms, significantly advancing the understanding of how well LLMs align with societal norms, particularly in sensitive areas such as privacy.

AI Solutions for Business Transformation

Unlocking AI’s Potential

Identify Automation Opportunities, Define KPIs, Select an AI Solution, and Implement Gradually to leverage AI for business transformation.

Connect with Us

For AI KPI management advice, connect with us at hello@itinai.com. For continuous insights into leveraging AI, stay tuned on our Telegram t.me/itinainews or Twitter @itinaicom.

Discover AI Solutions

Discover how AI can redefine your sales processes and customer engagement. Explore solutions at itinai.com.