The Value of LLaVA-Critic in AI Evaluation

Practical Solutions and Benefits:

The LLaVA-Critic is a specialized Large Multimodal Model (LMM) designed for evaluating the performance of other models across various tasks.

It offers a reliable and open-source alternative to proprietary models, reducing the need for costly human feedback collection.

LLaVA-Critic excels in two key areas: as a generalized evaluator aligning with human preferences and as a superior reward model for enhancing visual chat capabilities.

By fine-tuning a pre-trained LMM, LLaVA-Critic provides scalable solutions for generating effective reward signals and improving model performance.

Key Features:

LLaVA-Critic is trained to predict quantitative scores based on specified criteria and provides detailed justifications for its judgments.

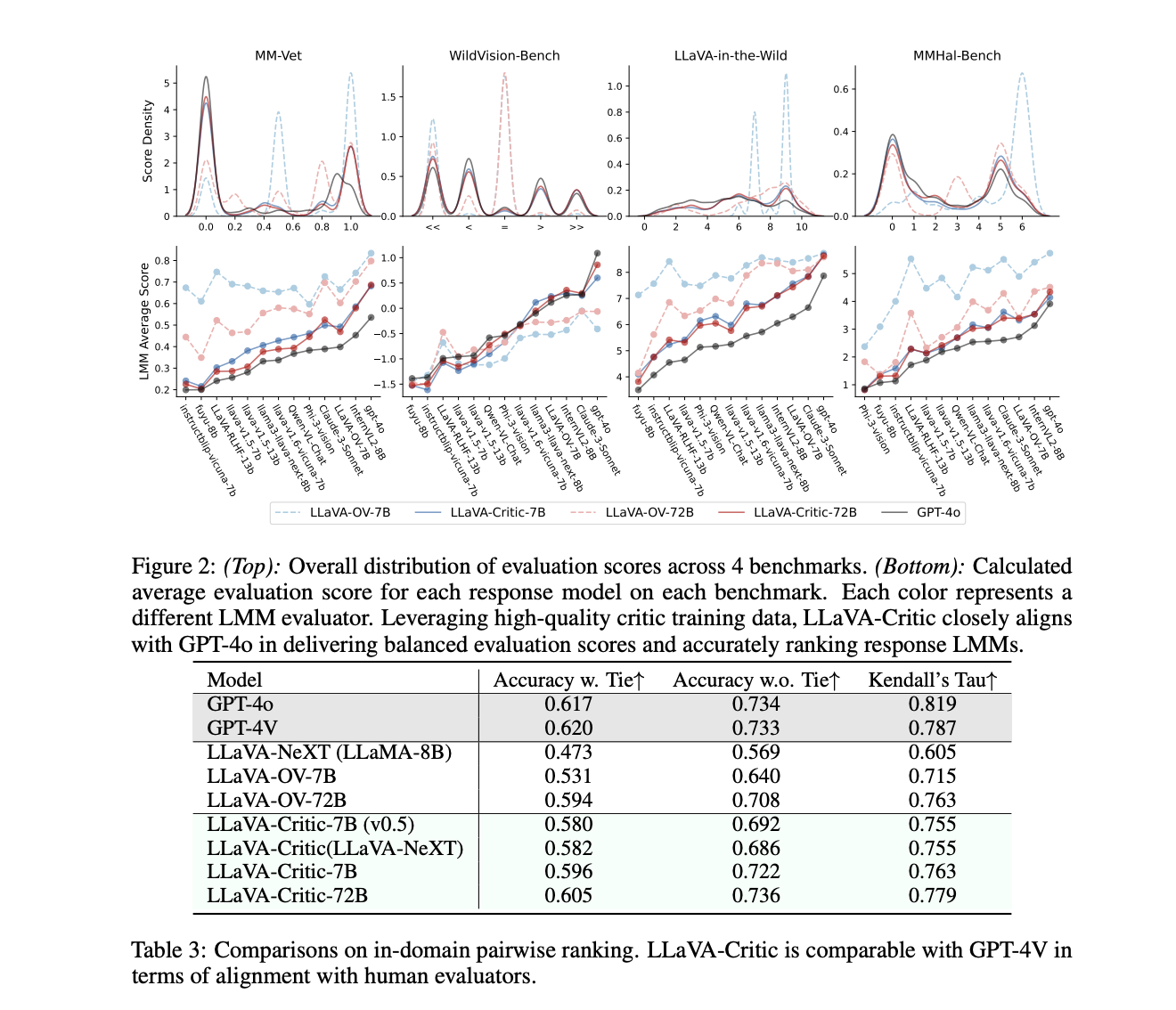

It outperforms baseline models in pointwise scoring and pairwise ranking, showcasing high accuracy and correlation with commercial models.

The model is developed by leveraging diverse instruction-following data and offers a scalable approach for AI evaluation tasks.

Conclusion:

LLaVA-Critic represents a significant advancement in AI evaluation, offering practical solutions for assessing multimodal model performance.

Researchers have demonstrated its effectiveness in various scenarios, highlighting its potential for future advancements in AI alignment feedback.

For more information on the research project, visit the Paper and Project linked above.