Practical AI Solutions with Llama-Deploy

Introduction

The llama-deploy solution simplifies the deployment of AI-driven agentic workflows, making it easier to scale and deploy them as microservices. This practical solution bridges the gap between development and production, offering a user-friendly and efficient method for deploying scalable workflows.

Architecture

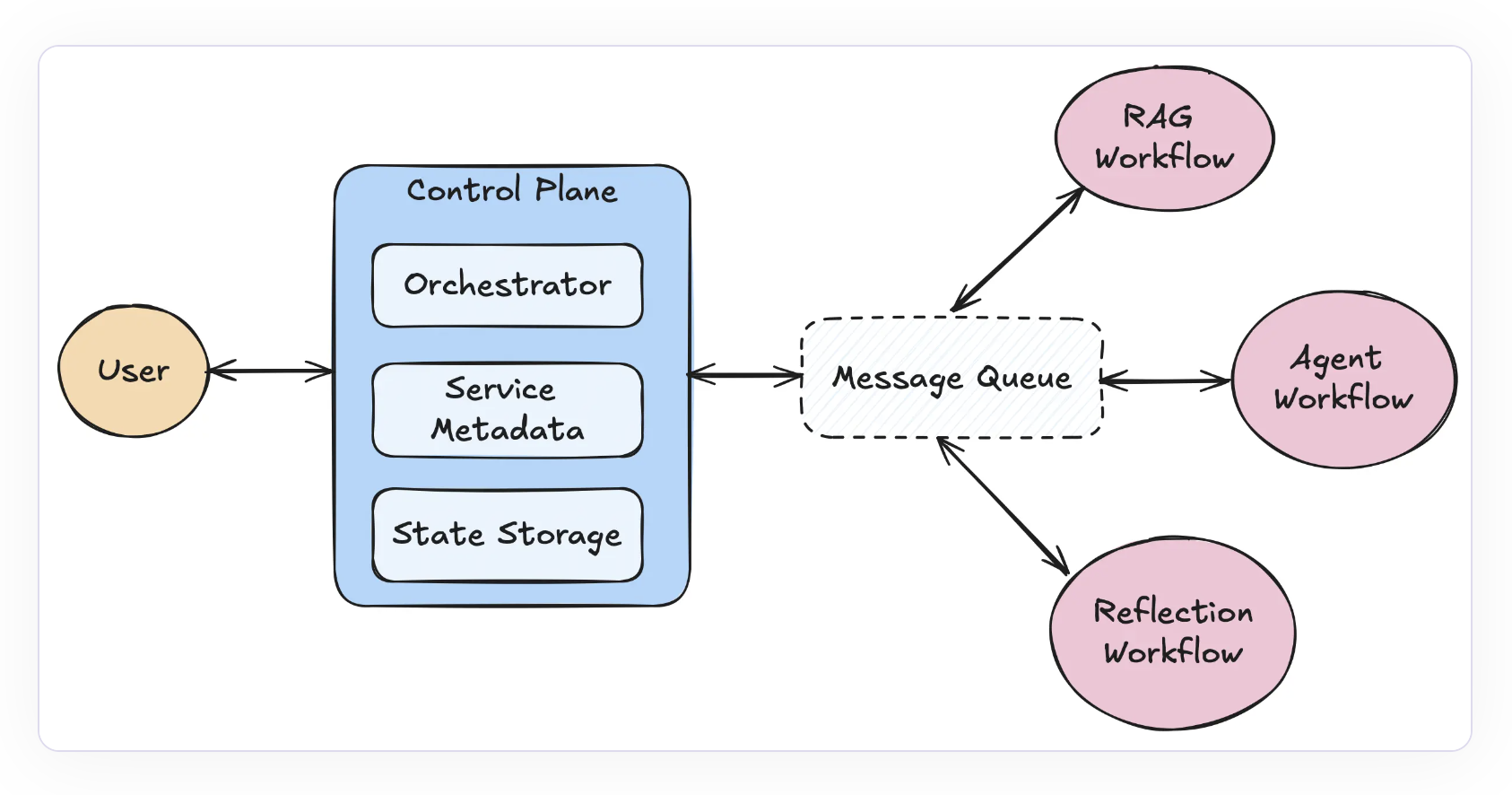

Llama-deploy offers a fault-tolerant, scalable, and easily deployable architecture for multi-agent systems, with key components such as message queues, the Control Plane, orchestrator, and workflow services.

Primary Features

Key features of llama-deploy include easy deployment with minimal code modifications, scalability through microservice architecture, fault tolerance, flexibility for system modifications, and optimization for high-concurrency and asynchronous operations.

Getting Started

Getting started with llama-deploy is simple, with easy installation using Pip and compatibility with RabbitMQ or Kubernetes (k8s). Its engaged community and open-source nature make it a standard agentic workflow deployment tool.

Conclusion

Llama-deploy streamlines the deployment process for agent workflows, enabling smooth scaling in production environments. It unifies agent workflow experiences and provides a seamless transition from development to production.

AI Evolution and Automation Opportunities

Discover how AI can redefine your way of work, identify automation opportunities, define KPIs, select AI solutions, and implement AI gradually for business impact.

Connect with Us

For AI KPI management advice and continuous insights into leveraging AI, connect with us at hello@itinai.com or stay tuned on our Telegram t.me/itinainews or Twitter @itinaicom.

Discover how AI can redefine your sales processes and customer engagement. Explore solutions at itinai.com.