LiteLLM: Managing API Calls to Large Language Models

Managing and optimizing API calls to various Large Language Model (LLM) providers can be complex, especially when dealing with different formats, rate limits, and cost controls. Existing solutions typically involve manual integration of different APIs, lacking flexibility or scalability to efficiently manage multiple providers. This can make it challenging to streamline operations, particularly in enterprise environments.

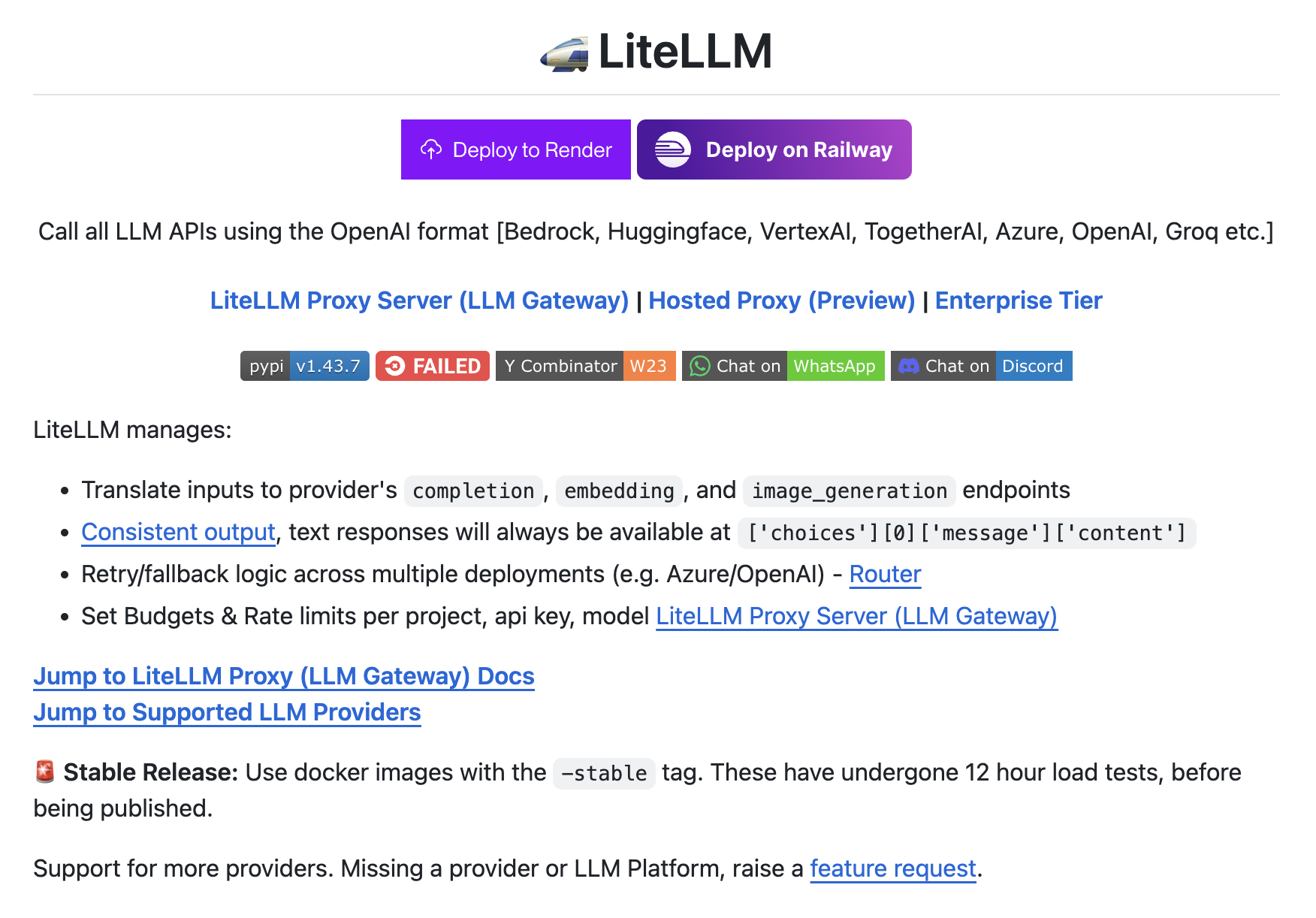

Introducing LiteLLM

The LiteLLM Proxy Server provides a unified interface for calling LLM APIs using a consistent format, regardless of the provider. It supports a wide range of LLM providers, including OpenAI, Huggingface, Azure, and Google VertexAI, simplifying the development process by allowing developers to switch between providers without changing the core logic of their applications. Additionally, LiteLLM offers features like retry and fallback mechanisms, budget and rate limit management, and comprehensive logging and observability through integrations with tools like Lunary and Helicone.

LiteLLM demonstrates robust capabilities with its support for synchronous and asynchronous API calls, load balancing across multiple deployments, and tools for tracking spending and managing API keys securely. This scalability and efficiency make it suitable for enterprise-level applications where managing multiple LLM providers is essential. Developers can maintain visibility and control over their API usage through its integration with various logging and monitoring tools.

In conclusion, LiteLLM offers a comprehensive solution for managing API calls across various LLM providers, simplifying the development process, and providing essential tools for cost control and observability. By offering a unified interface and supporting a wide range of features, it helps developers streamline their workflows, reduce complexity, and ensure that their applications are both efficient and scalable.

Evolve Your Company with AI

If you want to evolve your company with AI, stay competitive, and leverage LiteLLM for managing API calls across various LLM providers, it offers a unified interface and essential tools for cost control and observability.

Discover how AI can redefine your way of work:

- Identify Automation Opportunities: Locate key customer interaction points that can benefit from AI.

- Define KPIs: Ensure your AI endeavors have measurable impacts on business outcomes.

- Select an AI Solution: Choose tools that align with your needs and provide customization.

- Implement Gradually: Start with a pilot, gather data, and expand AI usage judiciously.

For AI KPI management advice, connect with us at hello@itinai.com. For continuous insights into leveraging AI, stay tuned on our Telegram or Twitter.

Discover how AI can redefine your sales processes and customer engagement. Explore solutions at itinai.com.