Liquid AI Introduces Liquid Foundation Models (LFMs)

Practical Solutions and Value Highlights:

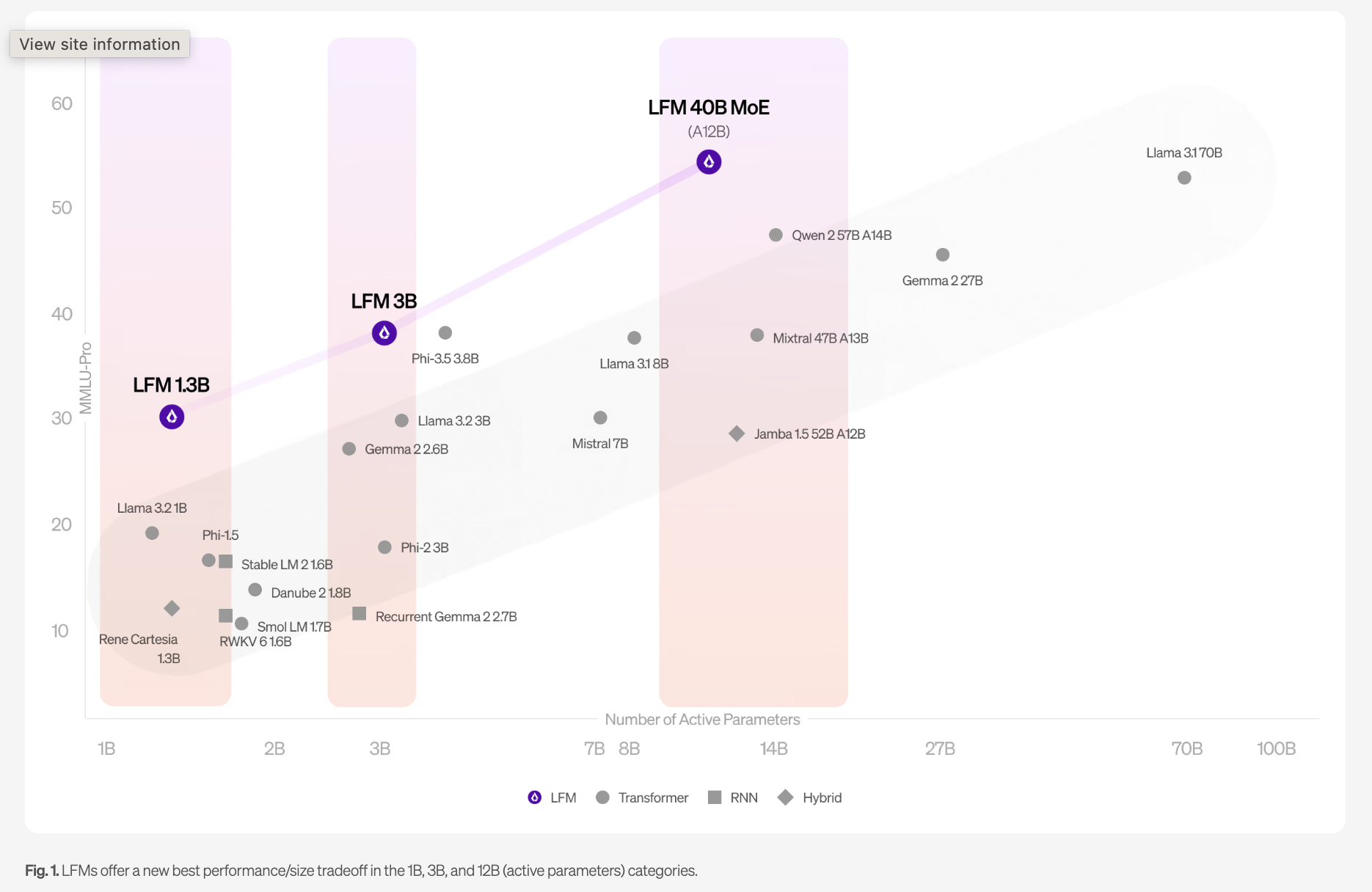

– **LFMs** set new standards for generative AI models with top performance and efficiency.

– **LFM series** includes 1B, 3B, and 40B models for various applications.

– **LFMs** optimize performance while maintaining a smaller memory footprint.

Architectural Innovations and Design Principles:

– **LFMs** leverage dynamical systems and signal processing for robust AI capabilities.

– **Featurization** and **footprint** are key design aspects for efficient computation.

– **Optimized** for deployment on various hardware platforms for enhanced performance.

Performance Benchmarks and Comparison:

– **LFMs** outperform similar models in benchmarks, showcasing impressive results.

– **LFMs** excel in handling sequential data types and offer multilingual support.

– **40B MoE model** balances size and quality, achieving high performance.

Key Strengths and Use Cases:

– **LFMs** excel in general knowledge, multilingual support, and long-context tasks.

– **Optimized** for document analysis, chatbots, and Retrieval-Augmented Generation.

– **Future iterations** to address gaps in zero-shot code tasks and numerical calculations.

Deployment and Future Directions:

– **LFMs** available for testing on various platforms with continuous optimization.

– **Adaptable** across modalities and hardware requirements for flexible deployment.

– **Roadmap** includes extending LFMs to industries like finance, biotech, and electronics.

Conclusion:

– **LFMs** by Liquid AI redefine AI model design with superior performance.

– **Controlled release** models offer innovative architecture and efficiency.

– **Stay competitive** by leveraging AI advancements for business growth.

For more AI insights and solutions, contact us at hello@itinai.com or visit our website at itinai.com.