Practical Solutions for Efficient Deployment of Large Language Models

Challenges in Real-World Applications

Large language models (LLMs) have faced limitations in practical applications due to high processing power and memory requirements.

Introducing LightLLM Framework

LightLLM is a lightweight and scalable framework designed to optimize LLMs for resource-constrained environments like mobile devices and edge computing.

Key Optimization Techniques

LightLLM utilizes quantization, pruning, and distillation to reduce model size while maintaining performance and usability.

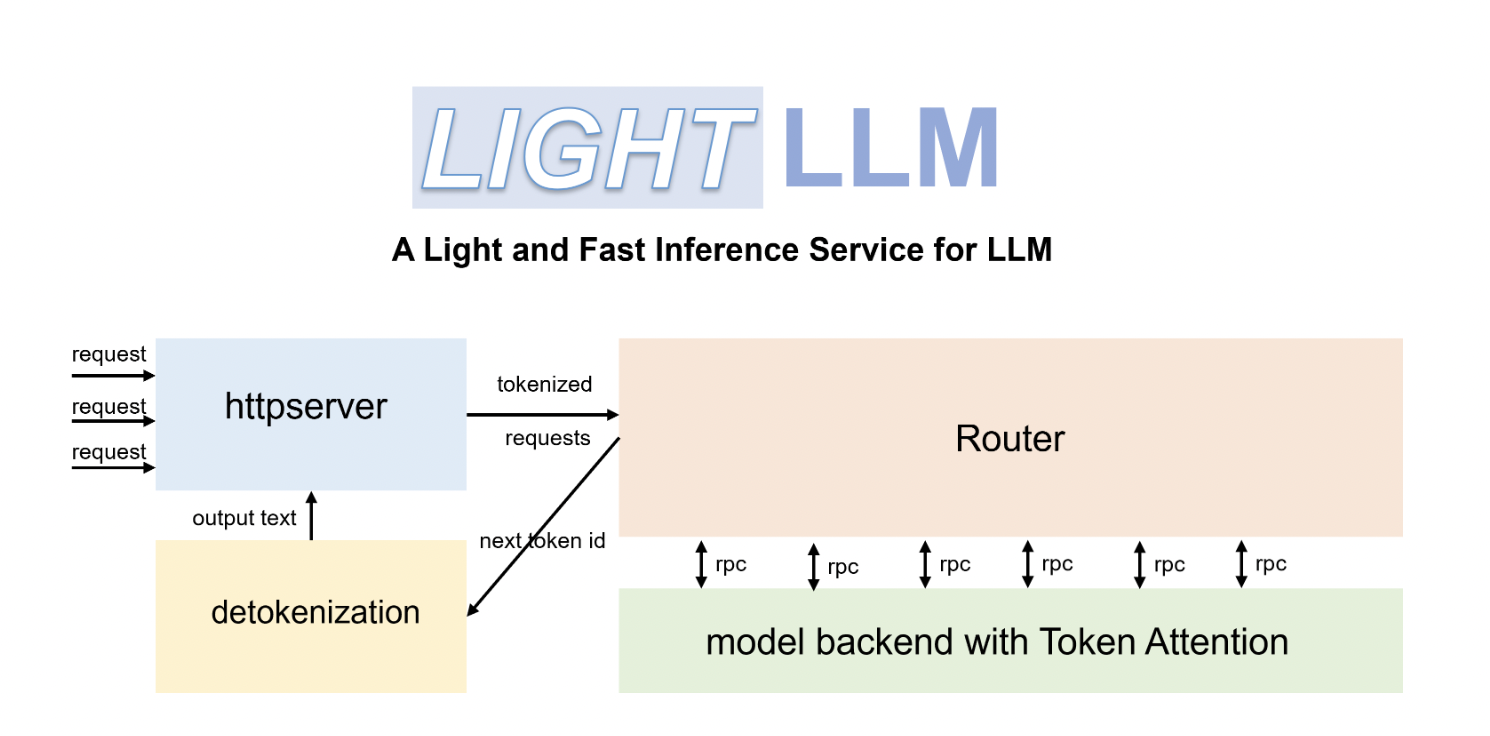

Architecture and Performance

LightLLM includes components for model handling, inference, optimization, and hardware utilization, ensuring high performance and efficiency.

Value Proposition

LightLLM offers an efficient solution for deploying LLMs in constrained environments, enhancing inference speed and resource utilization.

How AI Can Benefit Your Business

Implementing AI solutions like LightLLM can redefine work processes, automate tasks, and improve customer interactions, leading to measurable business outcomes.

Connect with Us for AI Solutions

For AI KPI management advice and insights on leveraging AI, contact us at hello@itinai.com or follow our updates on Telegram and Twitter.