Understanding Hallucinations in Large Language Models (LLMs)

What Are Hallucinations?

Researchers have raised concerns about LLMs generating content that seems plausible but is actually inaccurate. Despite this, these “hallucinations” can be beneficial in creative fields like drug discovery, where new ideas are crucial.

LLMs in Scientific Research

LLMs are being used in various scientific areas, including materials science, biology, and chemistry. They assist in tasks like molecular descriptions and drug design. While traditional models like MolT5 are accurate, LLMs can produce creative outputs that, although not always factually correct, can provide valuable insights for drug discovery.

The Challenge of Drug Discovery

Drug discovery is a complex and expensive process that requires exploring vast chemical spaces to find new solutions. Machine learning and generative models have been used to support this field, and researchers are now looking at how LLMs can help with molecule design and predictions.

Harnessing Creativity from Hallucinations

Hallucinations in LLMs can mimic creative thinking by combining existing knowledge to generate new ideas. This approach can lead to innovative discoveries, similar to how penicillin was accidentally found. By utilizing these creative insights, LLMs can help identify unique molecules and drive innovation in drug discovery.

Research Findings

Researchers from ScaDS.AI and Dresden University of Technology tested the idea that hallucinations can improve LLM performance in drug discovery. They used seven instruction-tuned LLMs, including GPT-4o and Llama-3.1-8B, and found that incorporating hallucinated descriptions significantly improved classification tasks. For instance, Llama-3.1-8B showed an 18.35% improvement in performance.

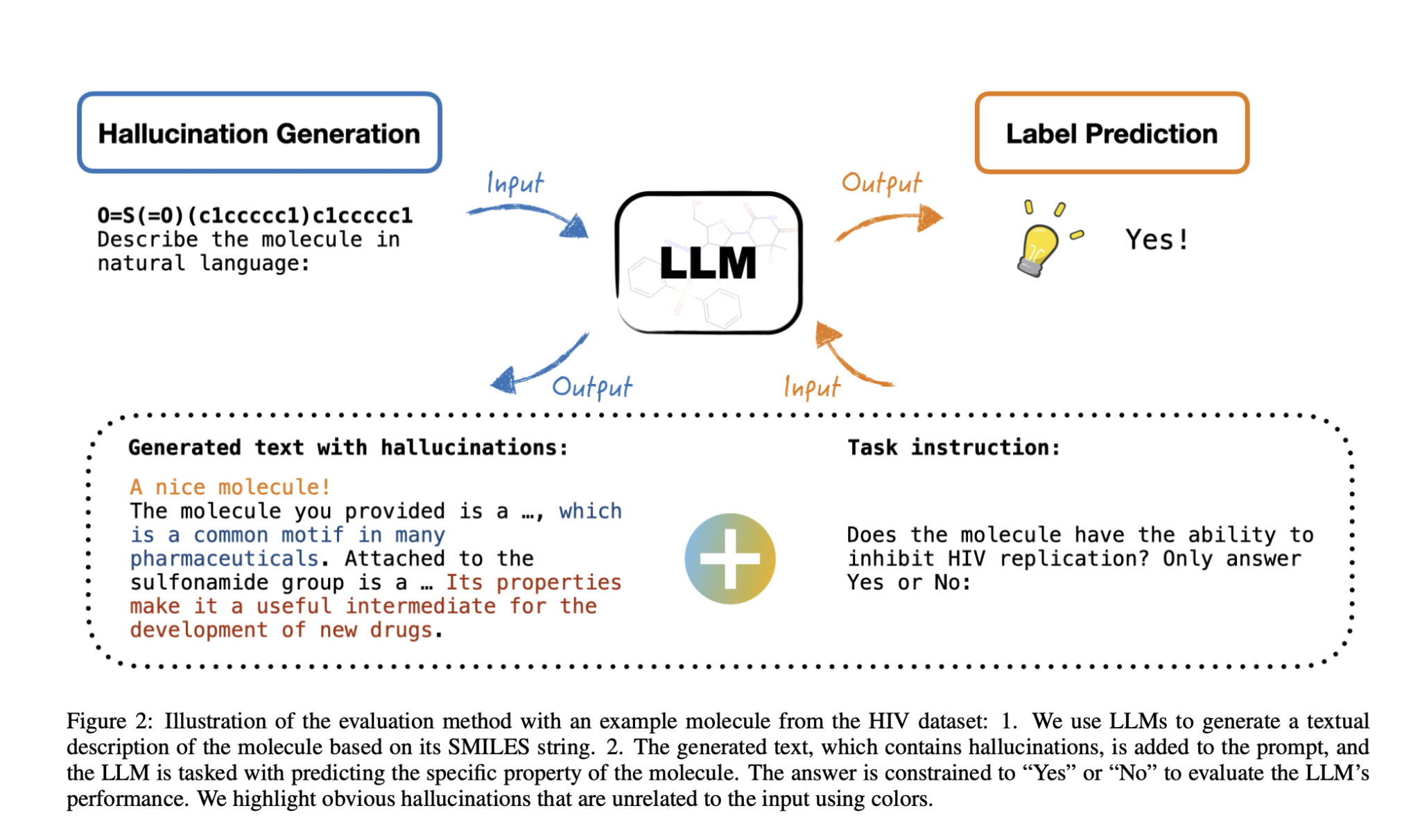

Generating and Evaluating Hallucinations

To create hallucinations, SMILES strings (a way to represent molecules) are converted into natural language descriptions. These descriptions are then assessed for accuracy. The study found that while LLMs often lack factual consistency, the generated text can still provide useful information for predictions.

Impact on Molecular Property Predictions

The research examined how hallucinations affect predictions of molecular properties. It compared results from using only SMILES strings, SMILES with MolT5 descriptions, and hallucinated descriptions. The findings indicated that hallucinations generally enhance performance, especially in larger models.

Conclusion and Future Directions

The study demonstrates that hallucinations in LLMs can improve drug discovery tasks. The results confirm that using hallucinated descriptions leads to better performance compared to traditional methods. This highlights the creative potential of AI in pharmaceutical research and encourages further exploration of its applications.

Get Involved

Check out the full research paper for more insights. Follow us on Twitter, join our Telegram Channel, and connect with our LinkedIn Group. Don’t forget to join our 70k+ ML SubReddit community!

Transform Your Business with AI

To stay competitive, consider leveraging LLMs in your drug discovery processes. Here are some practical steps:

– **Identify Automation Opportunities:** Find customer interaction points that can benefit from AI.

– **Define KPIs:** Ensure your AI initiatives have measurable impacts.

– **Select an AI Solution:** Choose tools that fit your needs and allow for customization.

– **Implement Gradually:** Start with a pilot project, gather data, and expand carefully.

For AI KPI management advice, reach out to us at hello@itinai.com. Stay updated on AI insights by following us on Telegram or Twitter. Explore how AI can enhance your sales processes and customer engagement at itinai.com.