Understanding Neural Networks and Their Representations

Neural networks (NNs) are powerful tools that reduce complex data into simpler forms. Researchers typically focus on the outcomes of these models but are now increasingly interested in how they understand and represent data internally. This understanding can help in reusing features for other tasks and examining different model structures. By exploring these internal representations, we gain insights into the information processing and patterns within neural networks.

Why Compare Representations?

Comparing what different neural models learn is important for many research areas. Various methods have been developed to assess the similarity between different representations. Some classical methods include Canonical Correlation Analysis (CCA) and its variations. However, recent findings show that new techniques, like Centered Kernel Alignment (CKA), can be sensitive to minor changes. This highlights the need for more robust analysis tools.

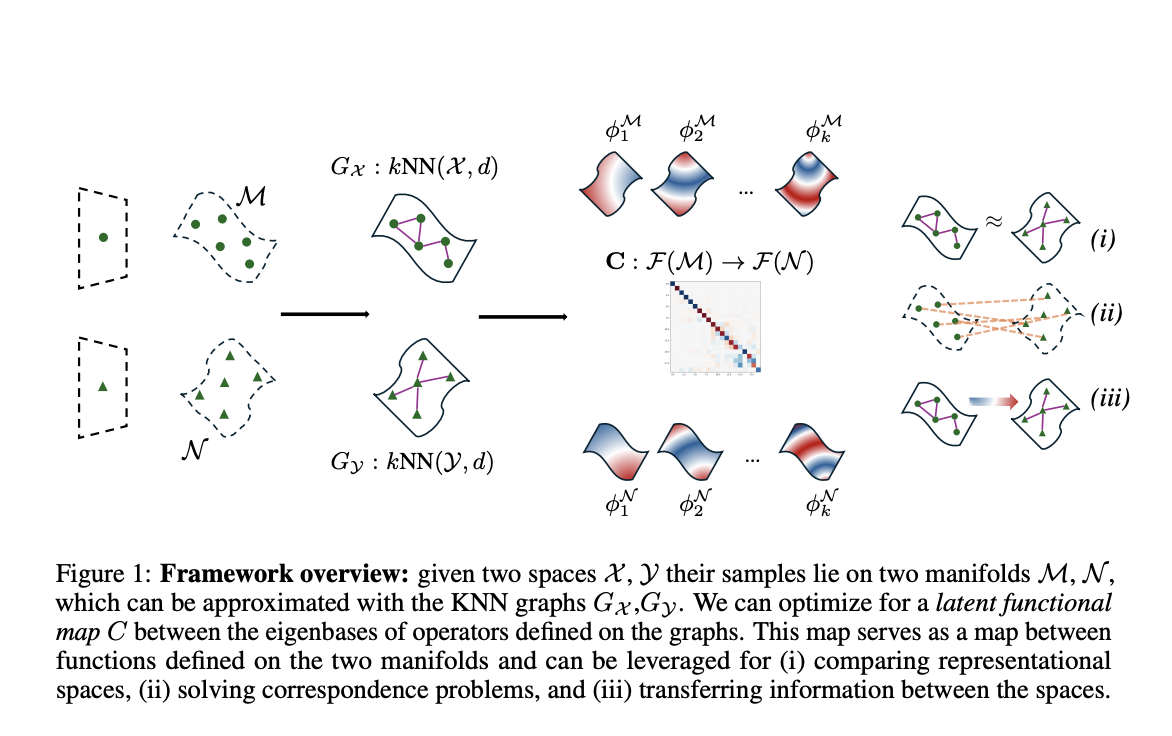

Introducing Latent Functional Maps (LFM)

Researchers have created a new approach called Latent Functional Map (LFM) to better understand neural network representations. By shifting focus from individual samples to relationships between function spaces, LFM utilizes principles from spectral geometry. This innovative technique enables efficient comparison and mapping across different representations, making it easier to transfer information between models.

How LFM Works

The LFM method involves three main steps:

- Graph Representation: It constructs a graph to represent the latent space.

- Descriptor Functions: It encodes important features through specific functions.

- Map Optimization: It optimizes the mapping between different representation spaces.

This method is adaptable, handling various dimensions effortlessly!

Benefits of LFM Over Traditional Methods

LFM shows greater robustness than CKA. While CKA struggles with small changes that keep data separable, LFM remains stable despite significant variations. Results indicate that LFM consistently outperforms CKA when conditions become complex. Moreover, visualization tools like t-SNE projections demonstrate LFM’s ability to maintain data integrity and accuracy in classifications.

Conclusion: A Game Changer in Neural Representation Analysis

Latent Functional Maps present a groundbreaking approach for analyzing neural network representations. By applying advanced methodologies, it offers a powerful framework for understanding and aligning latent spaces across models, promising to tackle key challenges in representation learning effectively.

For further reading, check out the original research paper. All credit goes to the authors involved.

Stay Connected! Follow us on Twitter, join our Telegram Channel, and participate in our LinkedIn Group. Also, join our community of over 60k on our ML SubReddit.

Empower Your Business with AI

Leverage LFM to keep your company competitive! Discover how AI can transform your operations:

- Identify Opportunities: Find key areas for AI to enhance customer interactions.

- Define KPIs: Set measurable goals for AI initiatives.

- Select Tailored Solutions: Choose AI tools that fit your specific needs.

- Implement Gradually: Start small, analyze data, and expand thoughtfully.

For AI management advice, reach out at hello@itinai.com. For ongoing insights, follow us on Twitter or join our Telegram channel.

Reimagine your sales processes and boost customer engagement with our AI solutions at itinai.com.