Understanding KVSharer: A Smart Solution for AI Efficiency

What is KVSharer?

KVSharer is an innovative method designed to optimize the memory usage of large language models (LLMs) without sacrificing performance. It allows different layers of the model to share their key-value (KV) caches during processing, leading to faster and more efficient operations.

The Problem with Current Models

Large language models can be very resource-intensive, especially during inference. They often require a lot of GPU memory, which can increase costs and reduce efficiency. Traditional methods focus on compressing KV caches within individual layers, but they often overlook the potential for sharing caches between different layers.

How KVSharer Works

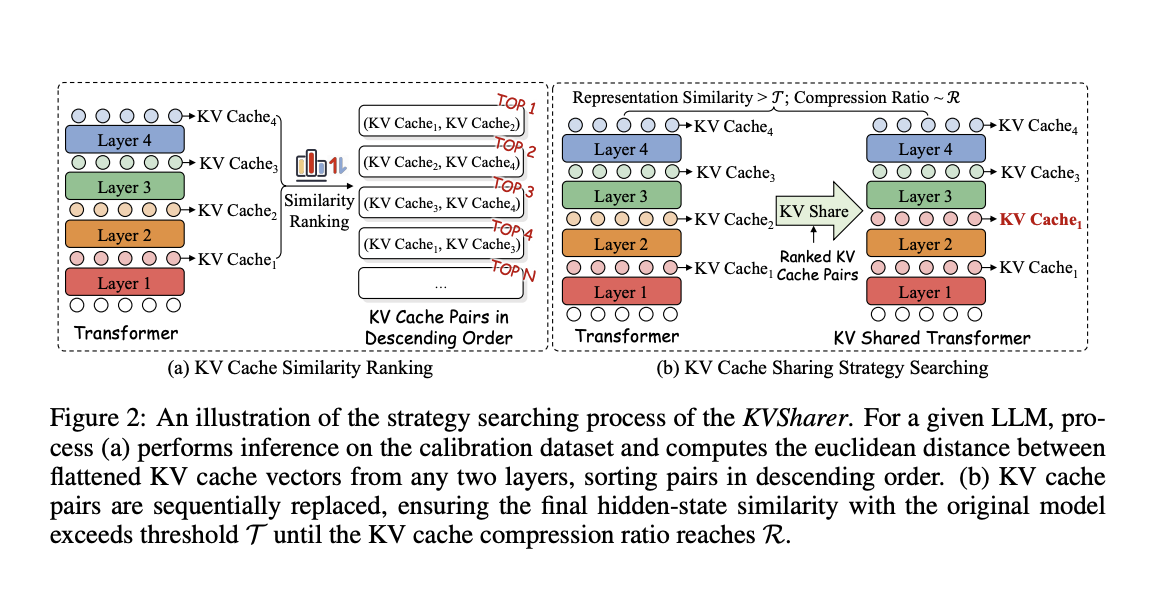

KVSharer introduces a two-step process:

- Layer Sharing Strategy: It identifies which layers can share their KV caches without significantly impacting performance.

- Efficient Usage: During the model’s operations, it uses the shared caches to enhance speed and reduce memory usage.

Benefits of KVSharer

- Reduces Memory Consumption: It can lower the memory needed for KV caches by about 30%.

- Maintains Performance: Even with compression, it retains 90-95% of the original model’s performance.

- Faster Inference: It can accelerate the generation process by at least 1.3 times.

- Seamless Integration: Works well with existing compression methods, enhancing overall efficiency.

Real-World Testing

The researchers tested KVSharer on various models like Llama2 and InternLM2, proving its effectiveness in compressing data while keeping performance intact. It performed well across different tasks and datasets.

Conclusion

KVSharer represents a significant step forward in making AI models more efficient. By sharing KV caches between layers, it optimizes memory use and enhances processing speed without the need for additional training. This makes it a valuable tool for businesses looking to leverage AI solutions effectively.

Get Involved

For more insights and to keep up with advancements in AI, follow us on Twitter, join our Telegram Channel, and check out our LinkedIn Group. If you’re interested in our research, don’t forget to subscribe to our newsletter!

Explore AI Solutions for Your Business

Consider integrating KVSharer into your operations to stay competitive. Here are some steps to get started:

- Identify Automation Opportunities: Find areas in customer interactions that can benefit from AI.

- Define KPIs: Set measurable goals for your AI initiatives.

- Select an AI Solution: Choose tools that fit your needs and allow for customization.

- Implement Gradually: Start small, gather data, and expand your AI applications wisely.

For personalized advice on AI KPI management, reach out to us at hello@itinai.com. Stay informed about leveraging AI by following us on Telegram or Twitter!