The Release of Reader-LM-0.5B and Reader-LM-1.5B by Jina AI

Revolutionizing HTML-to-Markdown Conversion with Small Language Models

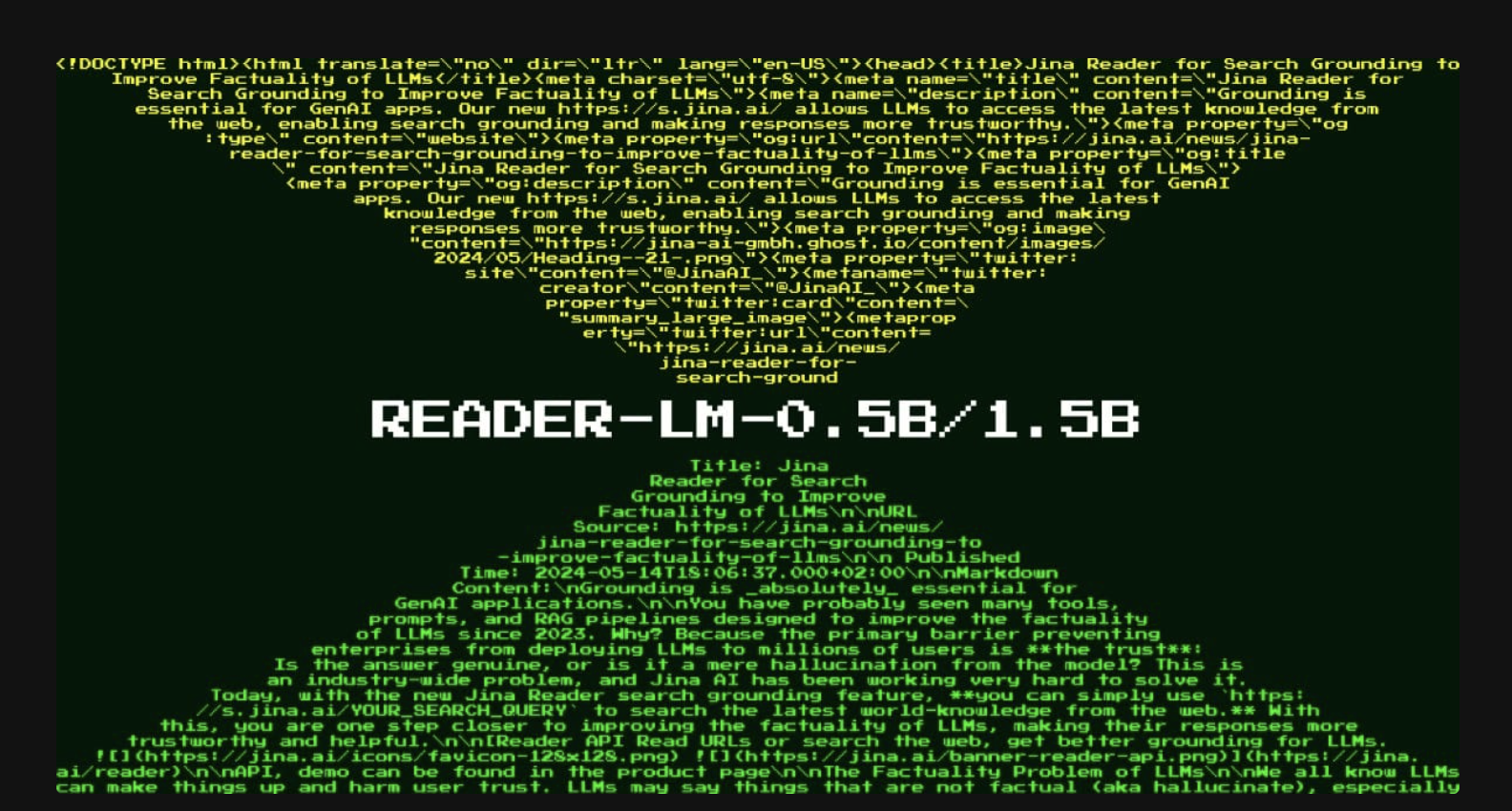

The release of Reader-LM-0.5B and Reader-LM-1.5B by Jina AI marks a significant milestone in small language model (SLM) technology. These models are designed to efficiently convert raw, noisy HTML from the open web into clean markdown format, addressing the challenges of modern web content.

Background and Purpose

In April 2024, Jina AI introduced Jina Reader, an API that converts any URL into a markdown suitable for large language models (LLMs). The API relied on existing tools but faced issues with incorrect content filtering and complex HTML structures. To overcome these limitations, Jina AI developed Reader-LM models to handle HTML-to-markdown conversion more efficiently.

Introduction of Reader-LM Models

Jina AI released two small language models: Reader-LM-0.5B and Reader-LM-1.5B. These models are trained specifically to convert raw HTML into markdown, offering efficient performance without expensive infrastructure. They outperform larger models in the task of HTML-to-markdown conversion while being just a fraction of their size.

Architecture and Specifications

The Reader-LM models are designed to handle long-context inputs and perform selective copying from HTML to markdown. Both models support a context length of up to 256K tokens, crucial for processing lengthy and noisy HTML content found on the web. Their ability to handle multilingual content makes them versatile global application tools.

Performance and Benchmarking

The performance of Reader-LM-0.5B and Reader-LM-1.5B has been rigorously evaluated against several large language models, demonstrating superior results in generating clean, accurate markdowns from HTML.

Training and Development

Training Reader-LM models required preparing high-quality data pairs of raw HTML and corresponding markdown. The models were optimized to handle the task effectively without unnecessary computational overhead, leveraging techniques like contrastive search to prevent token degeneration and repetitive loops during markdown generation.

Real-World Applications

Reader-LM is designed for practical use in both individual and enterprise environments, offering efficient data processing and multilingual capabilities that broaden its applicability to various industries and regions.

Conclusion

The release of Reader-LM-0.5B and Reader-LM-1.5B represents a leap forward in small language model technology, offering a powerful tool for developers and enterprises looking to optimize their data workflows.