Uncovering Insights into Language Processing with AI and Neuroscience

Understanding Brain-Model Similarity

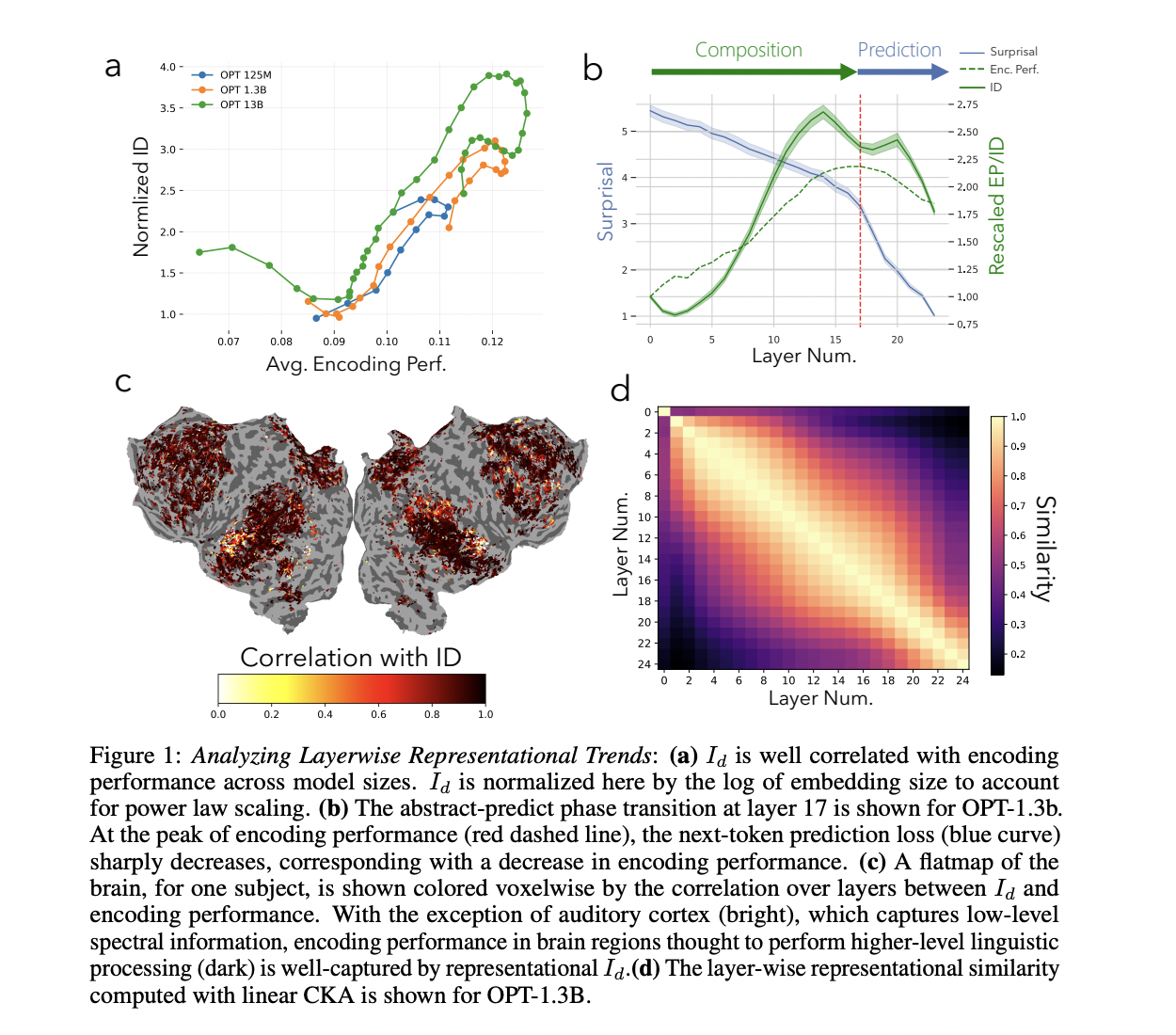

Cognitive neuroscience explores how the brain processes complex information, such as language, and compares it to artificial neural networks, especially large language models (LLMs). By examining how LLMs handle language, researchers aim to improve understanding of human cognition and machine learning systems.

Challenges in Uncovering Brain-Model Similarity

One of the critical challenges is understanding why certain layers of LLMs more closely align with brain activity than others. Traditional tools like fMRI and linear projections have provided insights, but the specific reasons for the success of these layers remain poorly understood. Further research is needed to fully comprehend the underlying processes.

New Methodology for Exploring Brain-Model Similarity

Researchers have introduced a new methodology using manifold learning techniques to explore how LLMs achieve brain-model similarity. They identified a two-phase abstraction process in LLMs, highlighting the importance of this approach in understanding LLM and brain functions.

Insights from the Research

The study found that the composition phase, rather than the prediction phase, is most responsible for the brain-model similarity. This opens new avenues for enhancing brain-language model alignment and suggests that improvements in LLM performance could be achieved by focusing on spectral properties across layers.

Practical Solutions for Leveraging AI

Implementing AI in your company can redefine your way of work. It’s essential to identify automation opportunities, define KPIs, select an AI solution, and implement gradually. Connect with us at hello@itinai.com for AI KPI management advice and continuous insights on leveraging AI.