InternLM2.5-7B-Chat: Open Sourcing Large Language Models with Unmatched Reasoning, Long-Context Handling, and Enhanced Tool Use

Practical Solutions and Value Highlights

InternLM has introduced the InternLM2.5-7B-Chat, a powerful large language model available in GGUF format. This model offers practical solutions for various applications in both research and real-world scenarios.

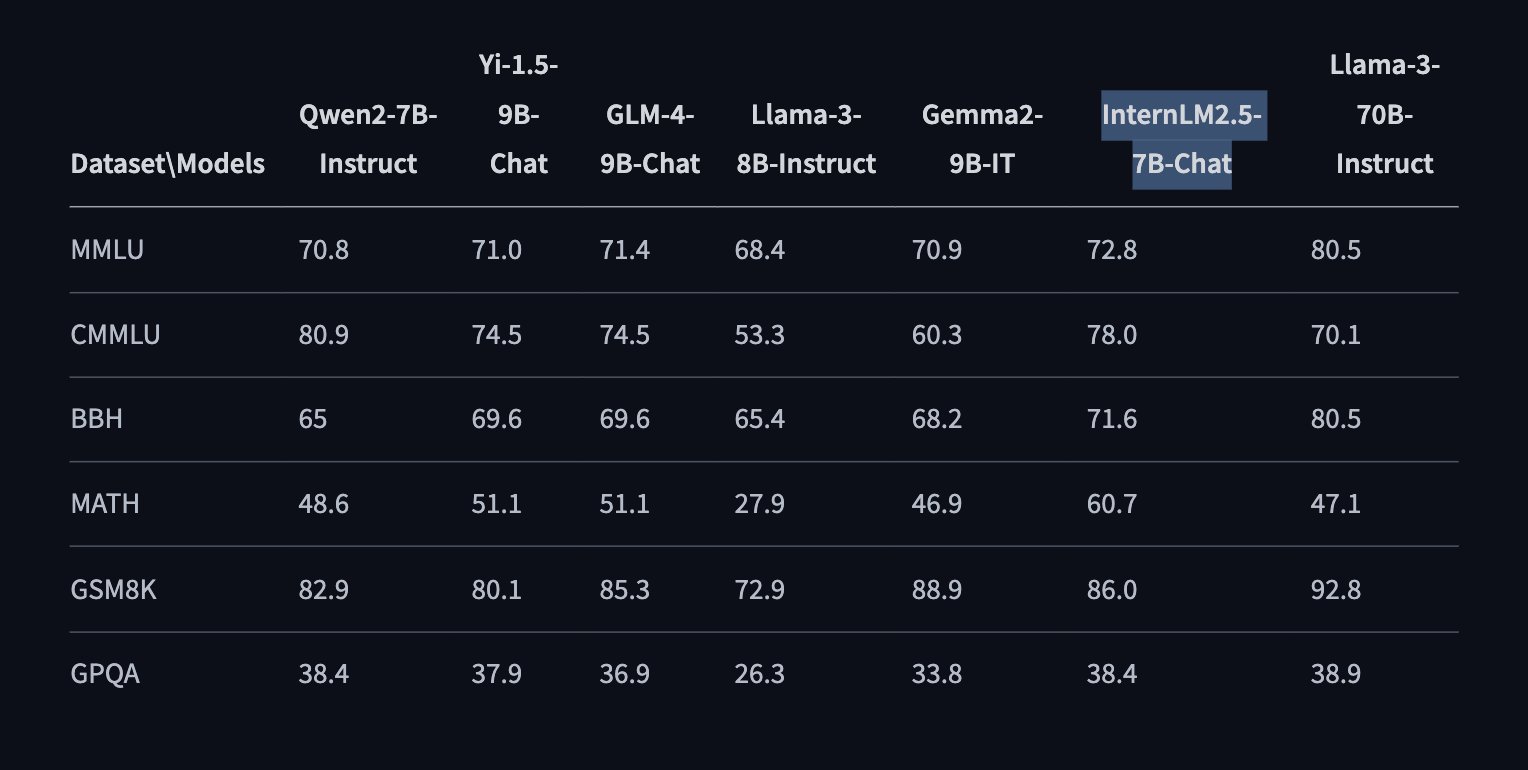

It boasts a 7 billion parameter base model and a chat model tailored for practical scenarios, with state-of-the-art reasoning capabilities, particularly in mathematical reasoning, outperforming competitors like Llama3 and Gemma2-9B.

InternLM2.5-7B-Chat excels in handling long contexts, making it highly effective in retrieving information from extensive documents. When paired with LMDeploy, it becomes even more powerful, especially in 1M-long context inference.

The model’s release includes a comprehensive installation guide, model download instructions, and model inference and service deployment examples, providing practical and easy-to-follow solutions for users.

InternLM2.5-7B-Chat offers enhanced tool use, supporting gathering information from over 100 web pages, and its upcoming release of Lagent will further improve its capabilities in instruction following, tool selection, and reflection.

Users can perform batched offline inference with the quantized model using lmdeploy, which offers up to 2.4x faster inference than FP16 on compatible NVIDIA GPUs, providing a practical and efficient solution for model deployment.

With its advanced reasoning capabilities, long-context handling, and efficient tool use, InternLM2.5-7B-Chat is set to be a valuable resource for various applications in both research and practical scenarios, offering practical solutions and value for users.