Challenges with Large Language Models (LLMs)

Large language models (LLMs) are essential for tasks like machine translation, text summarization, and conversational AI. However, their complexity makes them resource-intensive, causing difficulties in deployment in systems with limited computing power.

Computational Demands

The main issue with LLMs is their high computational needs. Training these models involves billions of parameters, which can limit accessibility. While methods like parameter-efficient fine-tuning (PEFT) help, they often reduce performance. The goal is to find a way to lower resource demands without sacrificing accuracy.

Innovative Solutions from Intel Labs

Researchers at Intel Labs have developed a new method that combines low-rank adaptation (LoRA) with neural architecture search (NAS). This approach improves efficiency and performance while overcoming the limitations of traditional fine-tuning.

LoNAS: A New Framework

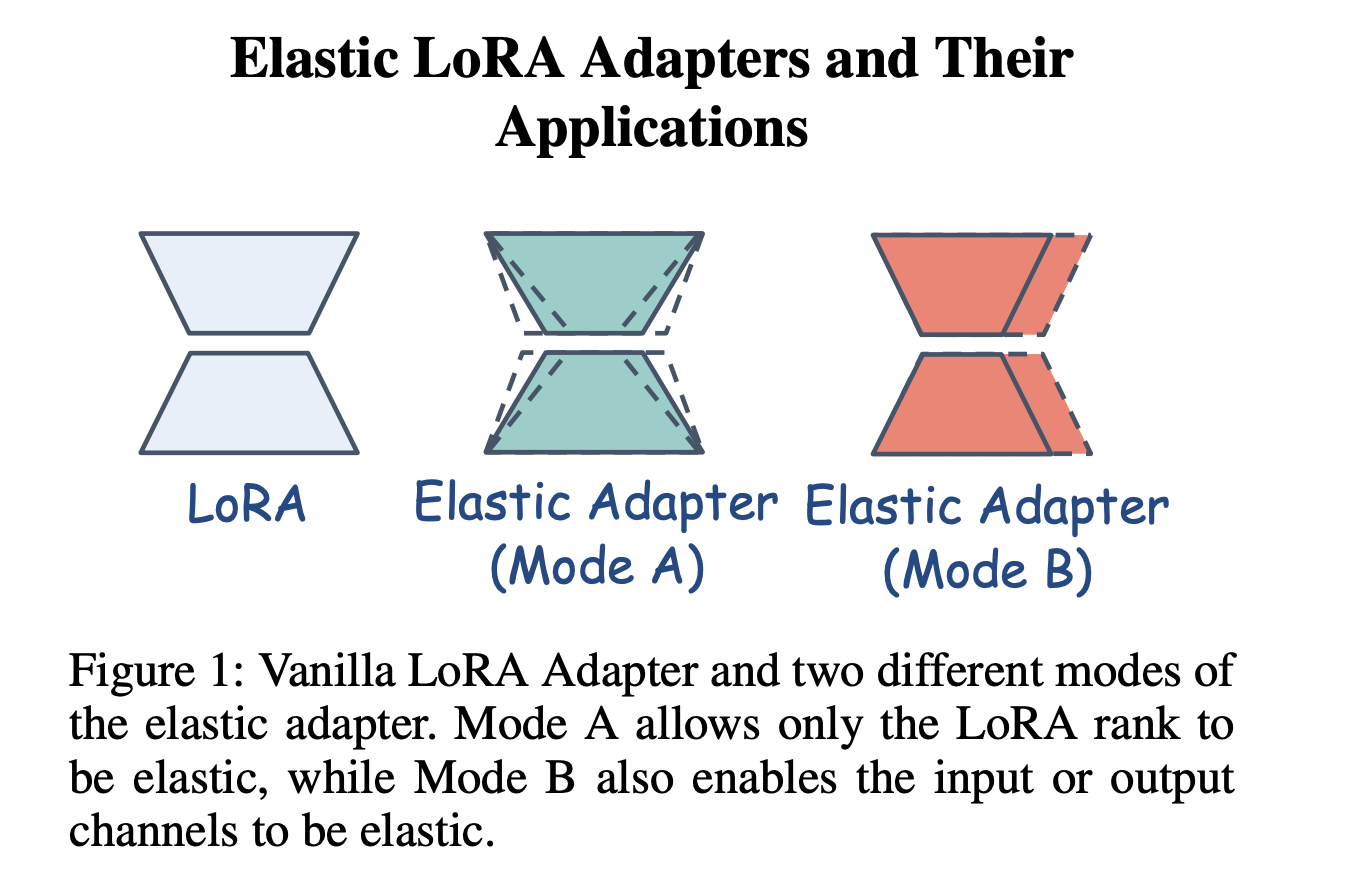

The framework, named LoNAS (Low-rank Neural Architecture Search), uses elastic LoRA adapters for fine-tuning. Instead of fully fine-tuning LLMs, LoNAS selectively activates model parts, reducing unnecessary computations. The elastic adapters adjust based on the model’s needs, achieving a balance between efficiency and performance.

Performance Benefits

LoNAS has shown significant performance improvements. It offers up to 1.4x speedup in inference and reduces model parameters by around 80%. For instance, fine-tuning LLaMA-7B resulted in an average accuracy of 65.8%. In other tests, applying LoNAS to different models led to notable increases in accuracy while keeping efficiency intact.

Further Enhancements: Shears and SQFT

The framework has been improved with new strategies like Shears and SQFT. Shears enhance fine-tuning by focusing on adapter rank, while SQFT uses quantization techniques to maintain efficiency during fine-tuning.

Transforming LLM Optimization

Combining LoRA and NAS transforms how LLMs are optimized. This research shows that significant efficiency can be achieved without losing performance, making LLMs more accessible for various environments.

Explore More

Check out the Paper and GitHub Page for more information. Follow us on Twitter, join our Telegram Channel, and connect on LinkedIn. Don’t forget to join our 70k+ ML SubReddit.

If you want to enhance your business with AI, consider these steps:

- Identify Automation Opportunities: Find customer interaction points that can benefit from AI.

- Define KPIs: Measure the impact of your AI initiatives on business outcomes.

- Select an AI Solution: Choose tools that fit your needs and allow customization.

- Implement Gradually: Start small, collect data, and expand wisely.

For AI KPI management advice, contact us at hello@itinai.com. For ongoing insights, follow us on Telegram or Twitter.

Discover how AI can transform your sales processes and customer interactions at itinai.com.