Transforming Natural Language Processing with AI Solutions

Transformer architectures have transformed Natural Language Processing (NLP), making it easier for machines to understand and generate human language. Large Language Models (LLMs) built on these architectures excel in various applications like chatbots, content creation, and summarization. However, using LLMs efficiently in real-world situations poses challenges due to their high resource requirements, especially for tasks that involve generating sequences of text.

Challenges in LLM Deployment

A major challenge with LLMs is their slow inference speed, limited by the need for high memory bandwidth and the sequential way they generate text. This makes them unsuitable for quick-response applications or for devices with limited processing power, such as personal computers and smartphones. As users seek faster solutions, it’s crucial to resolve these speed and resource issues.

Introducing Speculative Decoding (SD)

One effective solution is Speculative Decoding (SD), which speeds up LLM inference without sacrificing output quality. SD uses draft models to make predictions about token sequences, which are then validated in parallel by the main model. Despite its promise, the uptake of SD has been limited by the availability of efficient draft models that work well with the target LLM’s vocabulary.

FastDraft: A Game-Changer in LLM Training

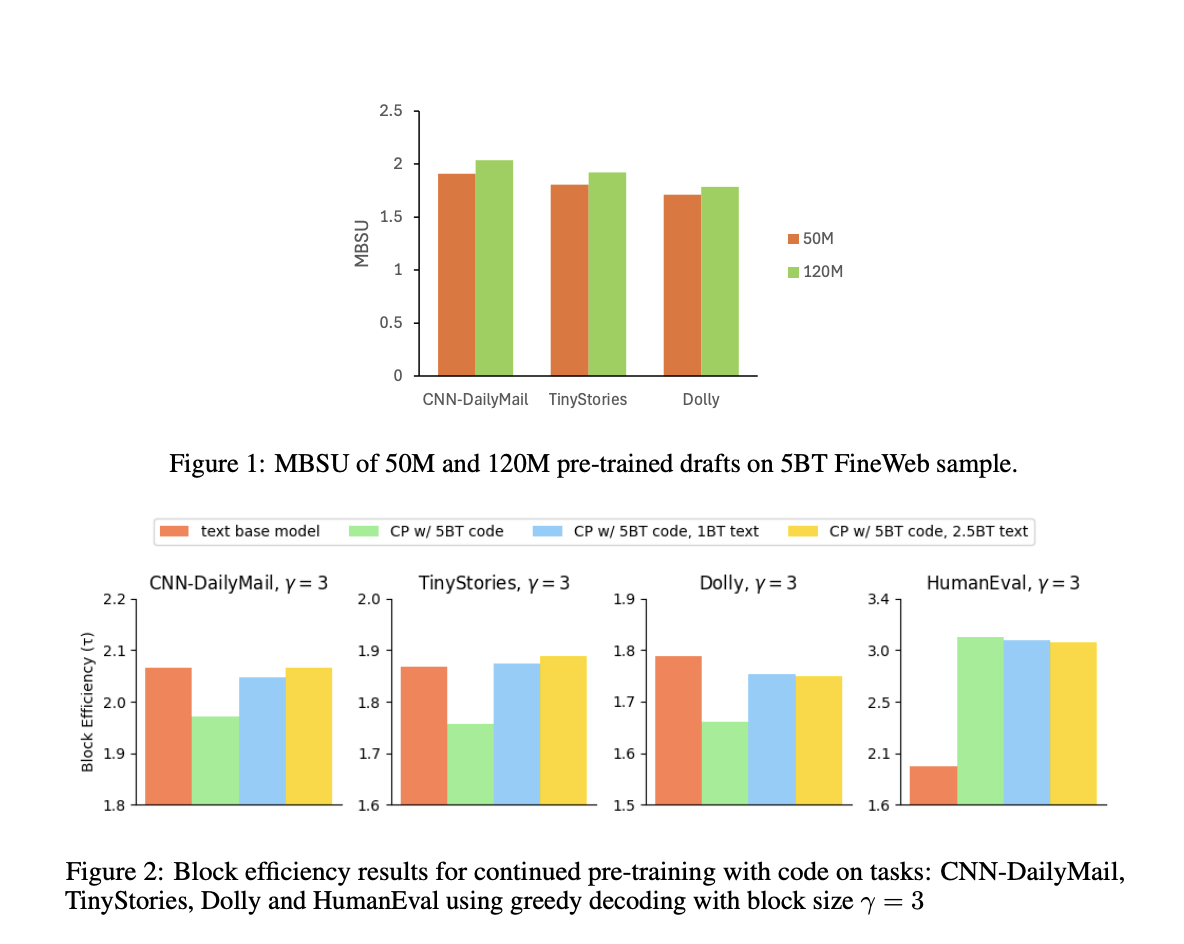

Researchers at Intel Labs have developed FastDraft, a framework that efficiently trains draft models to be compatible with various LLMs, including Phi-3-mini and Llama-3.1-8B. FastDraft is notable for its structured pre-training and fine-tuning process, allowing it to handle large datasets of up to 10 billion tokens. This ensures that draft models deliver optimal performance across many tasks.

Key Features of FastDraft

- Efficient Pre-Training: Draft models learn from vast datasets, enhancing their predictive abilities.

- Structured Alignment: The models fine-tune using synthetic datasets, refining their performance to mirror target models.

- Minimal Hardware Requirements: FastDraft runs efficiently on standard hardware setups, fostering broader accessibility.

- Significant Performance Gains: FastDraft models experienced notable speed improvements, achieving up to a 3x boost in code tasks and 2x in summarization tasks.

Impact and Future Insights

The results show promise for the future of LLM technology:

- High Acceptance Rates: The Phi-3-mini draft model achieved a 67% acceptance rate, indicating strong alignment with targets.

- Training Speed: Draft models were trained in under 24 hours on standard servers, easing resource burdens.

- Scalability: FastDraft is versatile, capable of training models for diverse applications.

In Conclusion

FastDraft effectively overcomes the limitations of LLM inference, offering a scalable and resource-efficient method for training draft models. Its innovative techniques substantially enhance speed and efficiency, making it an ideal solution for deploying LLMs on devices with limited resources.

For deeper insights, check out our Paper, Model on Hugging Face, and Code on GitHub. Stay connected with us on Twitter, join our Telegram Channel, and become part of our LinkedIn Group. If you appreciate our work, you’ll love our newsletter and our thriving 55k+ ML SubReddit.

Join Our Free AI Virtual Conference

Join us for SmallCon, a free virtual GenAI Conference featuring industry leaders. Learn how to maximize potential with small models on Dec 11th.

Elevate Your Company with AI Solutions

Utilize Intel AI Research’s FastDraft to keep your business competitive:

- Identify Automation Opportunities: Discover customer interaction points for AI application.

- Define KPIs: Ensure your AI initiatives produce measurable outcomes.

- Select the Right AI Solution: Choose tools that match your needs.

- Implement Gradually: Start with pilot projects, analyze results, and scale AI usage carefully.

For AI KPI management, connect with us at hello@itinai.com. For continuous AI insights, stay tuned on Telegram or follow us on @itinaicom.

Explore how AI can transform your sales processes and customer engagement at itinai.com.