Practical Solutions and Value of Img-Diff Dataset

Enhancing Multimodal Language Models

Multimodal Language Models (MLLMs) have evolved to improve text-image interactions through various techniques. Models like Flamingo, IDEFICS, BLIP-2, and Qwen-VL use learnable queries, while LLaVA and MGM employ projection-based interfaces. LLaMA-Adapter and LaVIN focus on parameter-efficient tuning.

Datasets significantly impact MLLM effectiveness, with recent studies refining visual instruction tuning datasets to enhance performance across question-answering tasks. High-quality fine-tuning datasets with extensive task diversity excel in image perception, reasoning, and OCR tasks.

The Img-Diff dataset emphasizes image difference analysis, augmenting MLLMs’ VQA proficiency and object localization capabilities. It builds upon foundational works in the field and outperforms state-of-the-art models on various image difference and VQA tasks, highlighting the importance of high-quality data and evolving model architectures in improving MLLM performance.

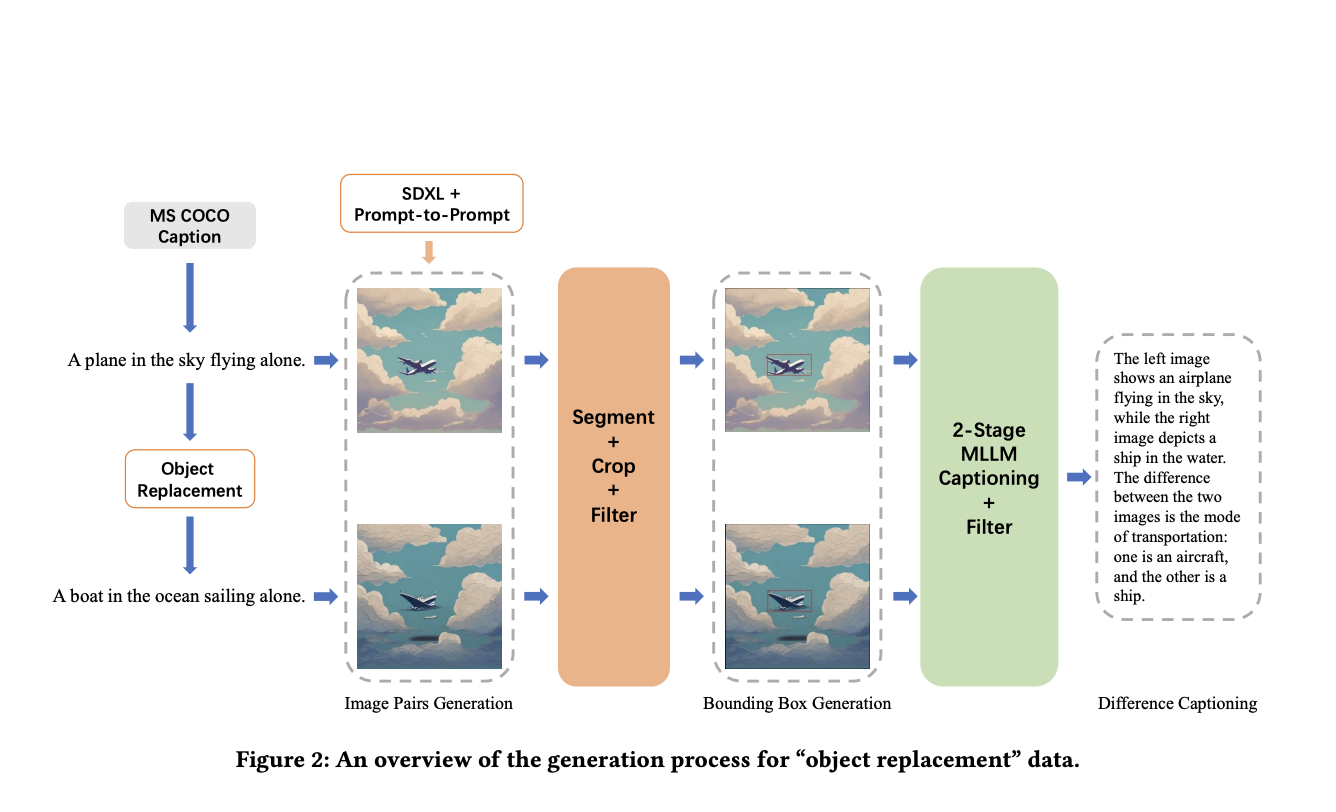

The researchers developed the Img-Diff dataset through a systematic approach, creating 118,000 image pairs and fine-tuning state-of-the-art MLLMs like LLaVA-1.5-7B and MGM-7B to improve performance on image difference tasks and VQA challenges.

LLaVA-1.5-7B and MGM-7B achieved new state-of-the-art scores on the Image-Editing-Request benchmark. The study emphasizes the effectiveness of targeted, high-quality datasets in improving MLLMs’ capabilities and encourages further exploration in fine-grained image recognition and multimodal learning.

AI Solutions for Business Growth

To evolve your company with AI and stay competitive, use Img-Diff for enhancing MLLMs through contrastive learning and image difference analysis. Identify automation opportunities, define KPIs, select an AI solution that aligns with your needs, and implement gradually. Connect with us at hello@itinai.com for AI KPI management advice and continuous insights into leveraging AI.

Discover how AI can redefine your sales processes and customer engagement. Explore solutions at itinai.com.

Don’t forget to join our 48k+ ML SubReddit and find upcoming AI webinars here.

Arcee AI DistillKit Announcement

Arcee AI has released DistillKit, an open-source, easy-to-use tool transforming model distillation for creating efficient, high-performance small language models. Check out the Paper and GitHub for more details. All credit for this research goes to the researchers of this project.

If you like our work, you will love our newsletter. Also, follow us on Twitter and join our Telegram Channel and LinkedIn Group.