Hugging Face Releases Open LLM Leaderboard 2: A Major Upgrade Featuring Tougher Benchmarks, Fairer Scoring, and Enhanced Community Collaboration for Evaluating Language Models

Addressing Benchmark Saturation

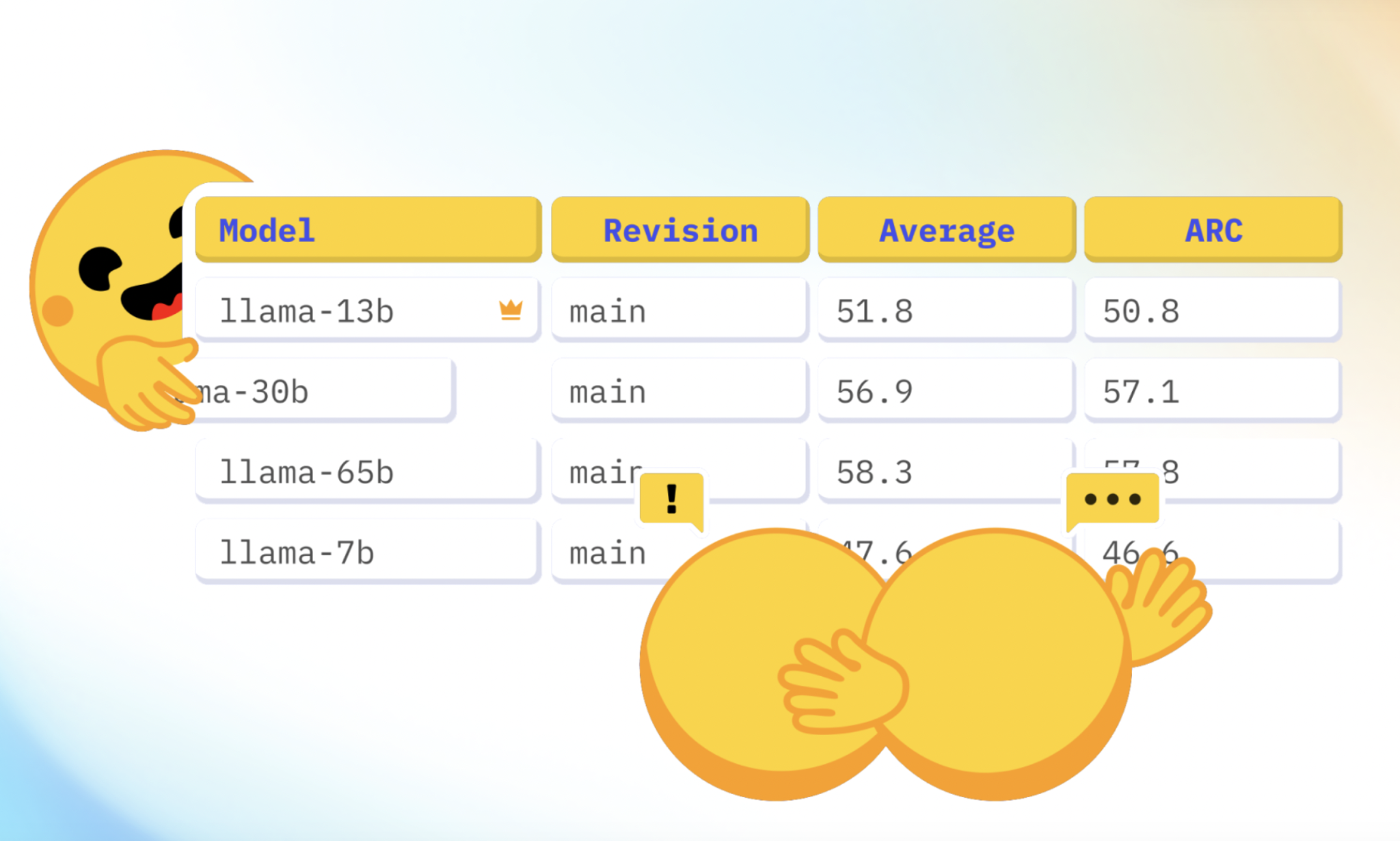

Hugging Face has upgraded the Open LLM Leaderboard to address the challenge of benchmark saturation. The new version offers more rigorous benchmarks and a fairer scoring system, reinvigorating the competitive landscape for language models.

Introduction of New Benchmarks

The Open LLM Leaderboard v2 introduces six new benchmarks to cover a range of model capabilities, ensuring more comprehensive evaluation of language models.

Fairer Rankings with Normalized Scoring

The new leaderboard adopts normalized scores for ranking models, ensuring a fairer comparison across different benchmarks and preventing any single benchmark from disproportionately influencing the final ranking.

Enhanced Reproducibility and Interface

The evaluation suite has been updated to improve reproducibility, and the interface has been significantly enhanced for a faster and more seamless user experience.

Prioritizing Community-Relevant Models

The new leaderboard introduces a “maintainer’s choice” category, highlighting high-quality models from various sources and prioritizing evaluations of the most useful models for the community.

Voting on Model Relevance

A voting system has been implemented to manage the high volume of model submissions, ensuring that the most anticipated models are evaluated first, reflecting the community’s interests.

AI Solutions for Business

To evolve your company with AI, consider identifying automation opportunities, defining KPIs, selecting an AI solution, and implementing gradually. Connect with us for AI KPI management advice and continuous insights into leveraging AI.

AI Solutions for Sales Processes and Customer Engagement

Discover how AI can redefine your sales processes and customer engagement. Explore solutions at itinai.com for continuous insights into leveraging AI.