Challenges in Competitive Programming

In competitive programming, both human competitors and AI systems face unique challenges. Many existing AI models struggle to solve complex problems consistently. A common issue is their difficulty in managing long reasoning processes, which can lead to solutions that only pass simpler tests but fail in rigorous contest settings. Current datasets often only capture a small portion of the challenges found in competitive programming platforms like CodeForces or events like the International Olympiad in Informatics (IOI). This highlights the need for models that not only generate correct code but also follow logical reasoning akin to that required in actual competitions.

Introducing OlympicCoder

Hugging Face has launched OlympicCoder, a series of models specifically designed for olympiad-level programming challenges. This series includes two fine-tuned models—OlympicCoder-7B and OlympicCoder-32B—developed using the CodeForces-CoTs dataset, which comprises nearly 100,000 high-quality reasoning samples. These models demonstrate superior performance compared to proprietary systems like Claude 3.7 Sonnet when tackling IOI problems. By incorporating detailed explanations and multiple correct solutions into their training, OlympicCoder models effectively address the complexities of coding tasks requiring advanced reasoning.

Technical Details and Benefits

Both OlympicCoder-7B and OlympicCoder-32B are built on the Qwen2.5-Coder Instruct model and trained using a refined version of the CodeForces dataset. OlympicCoder-7B, with approximately 7.6 billion parameters, employs a higher learning rate of 4e-5 and a cosine learning rate scheduler, which help retain long reasoning chains. OlympicCoder-32B, with around 32.8 billion parameters, uses distributed training methods to maintain a long context window. These adjustments enhance the models’ ability to handle intricate reasoning sequences crucial for competitive programming.

Results and Insights

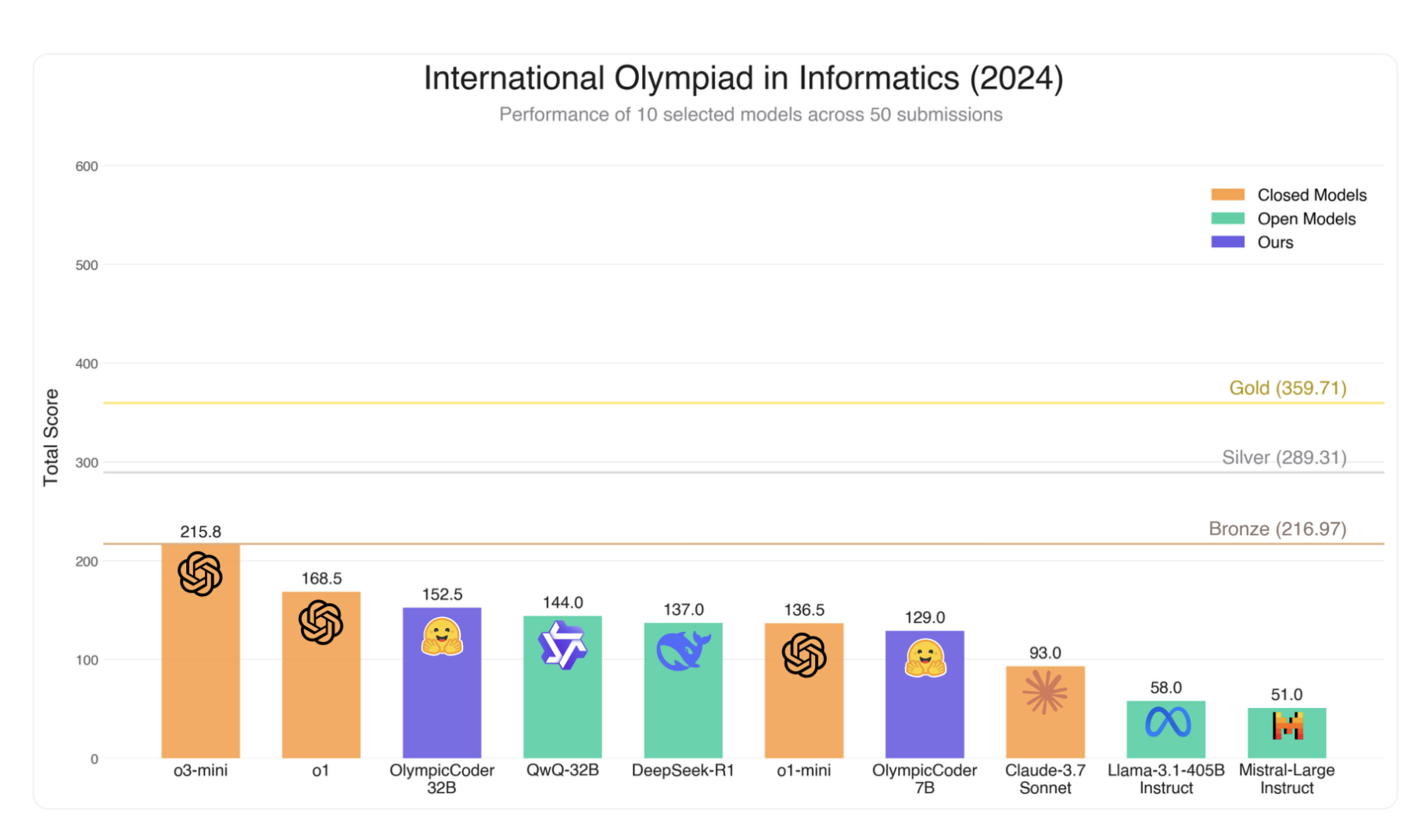

The models were evaluated using benchmarks like LiveCodeBench and the IOI 2024 problems, simulating real contest conditions by generating multiple submissions for each subtask. Evaluation results show that both models perform robustly, with OlympicCoder-32B even exceeding some leading closed-source systems. Key factors for this success include avoiding sample packing and applying a higher learning rate, along with utilizing a carefully curated dataset that captures the complexity of competitive problems.

Conclusion

In summary, OlympicCoder marks a significant advancement in developing open reasoning models for competitive programming. With models that perform exceptionally well against larger, closed-source alternatives, OlympicCoder demonstrates the impact of thoughtful dataset curation and fine-tuning. This initiative offers valuable insights for researchers and practitioners, paving the way for future innovations in AI-driven problem-solving while ensuring a rigorous approach to model development.

Explore Further

Discover the 7B Model and 32B Model on Hugging Face. For more technical details, credit goes to the researchers behind this project. Stay connected with us on Twitter and join our 80k+ ML SubReddit community.

Transform Your Business with AI

Explore how AI technology can enhance your work processes. Identify areas for automation and moments in customer interactions where AI adds value. Determine key performance indicators (KPIs) to assess the positive impact of your AI investments. Choose tools that fit your needs and allow customization to meet your objectives. Start with a small project, gather effectiveness data, and gradually expand your AI applications.

If you need help managing AI in your business, contact us at hello@itinai.ru or reach us on Telegram: https://t.me/itinai, X: https://x.com/vlruso, and LinkedIn: https://www.linkedin.com/company/itinai/.