Advancements in Parallel Computing

Efficient Solutions for High-Performance Tasks

Parallel computing is evolving to meet the needs of demanding tasks like deep learning and scientific simulations. Matrix multiplication is a key operation in this area, crucial for many computational workflows. New hardware innovations, such as Tensor Core Units (TCUs), enhance processing efficiency by optimizing specific matrix multiplications. These TCUs are now being used for various applications beyond neural networks, including graph algorithms and sorting, to boost overall efficiency.

Challenges in Matrix-Based Computations

Despite advancements, there are still challenges with prefix sum algorithms, which calculate cumulative sums in matrix computations. Traditional methods struggle with managing large datasets effectively and have issues with latency during matrix operations. Current techniques based on the Parallel Random Access Machine (PRAM) model work well for simpler tasks but fall short in maximizing the potential of modern tensor core hardware.

Innovative Solutions: MatMulScan

Researchers from Huawei Technologies have developed a new algorithm called MatMulScan, designed specifically for TCUs. This algorithm improves matrix multiplications by reducing computational depth and increasing throughput. It is particularly useful for applications like gradient boosting trees and parallel sorting. MatMulScan utilizes unique designs to efficiently handle matrices, enabling effective calculations of local prefix sums.

How MatMulScan Works

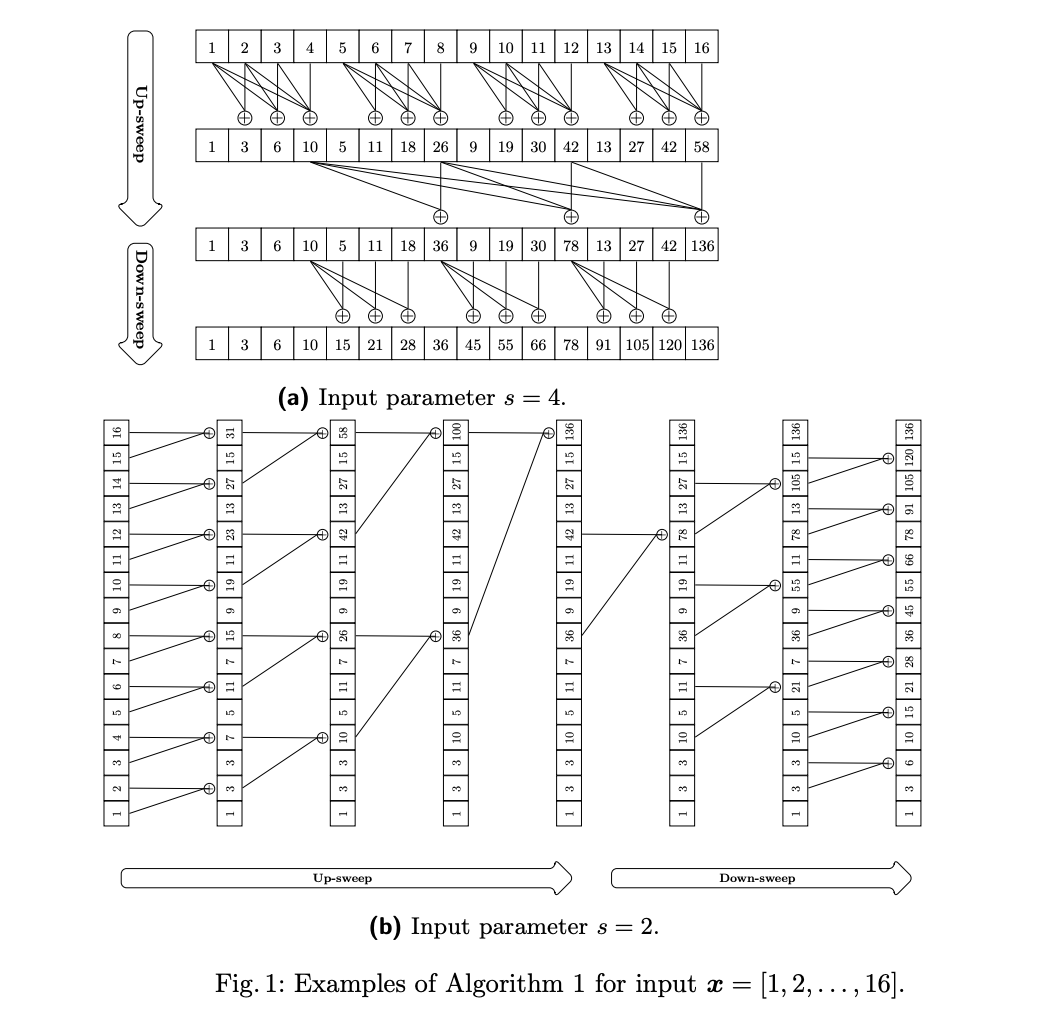

MatMulScan operates in two main phases:

1. **Up-Sweep Phase**: Computes prefix sums by increasing indices, ensuring efficient cumulative sum calculations.

2. **Down-Sweep Phase**: Propagates these sums across the data, correcting any local sums for accuracy. This method optimizes latency and makes the algorithm scalable for large datasets.

Key Benefits of MatMulScan

– **Reduced Computational Depth**: Significantly decreases processing steps needed for large datasets.

– **Enhanced Scalability**: Maintains performance as data sizes grow, suitable for diverse applications.

– **Improved Hardware Utilization**: Leverages TCUs to enhance efficiency, overcoming previous limitations.

– **Broad Applicability**: Beyond prefix sums, it shows promise in various applications like gradient-boosting trees, parallel sorting, and graph algorithms.

Conclusion

MatMulScan represents a significant breakthrough in parallel scan algorithms, addressing issues of scalability and computational depth. By utilizing tensor core technology, it achieves a balance between performance and practicality, setting the stage for future advancements in high-performance computing. This research expands the use of TCUs, leading to innovative applications in computational science and engineering.

Get Involved!

– **Read the Paper**: All credit for this research goes to the project’s researchers.

– **Stay Connected**: Follow us on Twitter, join our Telegram Channel, and LinkedIn Group.

– **Join Our Community**: Be part of our 59k+ ML SubReddit.

Transform Your Business with AI

Discover how AI can reshape your operations. Here are some practical steps:

– **Identify Automation Opportunities**: Find key areas for AI enhancement in customer interactions.

– **Define KPIs**: Ensure your AI efforts have measurable impacts on your business.

– **Select an AI Solution**: Choose tools that fit your needs and allow for customization.

– **Implement Gradually**: Start small, gather insights, and scale your AI usage carefully.

Connect with Us

For AI KPI management advice, reach out at hello@itinai.com. For ongoing insights into leveraging AI, follow us on Telegram t.me/itinainews or Twitter @itinaicom. Explore how AI can enhance your sales processes and customer engagement at itinai.com.