The Value of Kangaroo: Accelerating Large Language Models

Addressing Inference Speed and Efficiency

The development of natural language processing has been significantly propelled by large language models (LLMs), showcasing remarkable performance in tasks like translation, question answering, and text summarization. However, their slow inference speed hinders real-time applications.

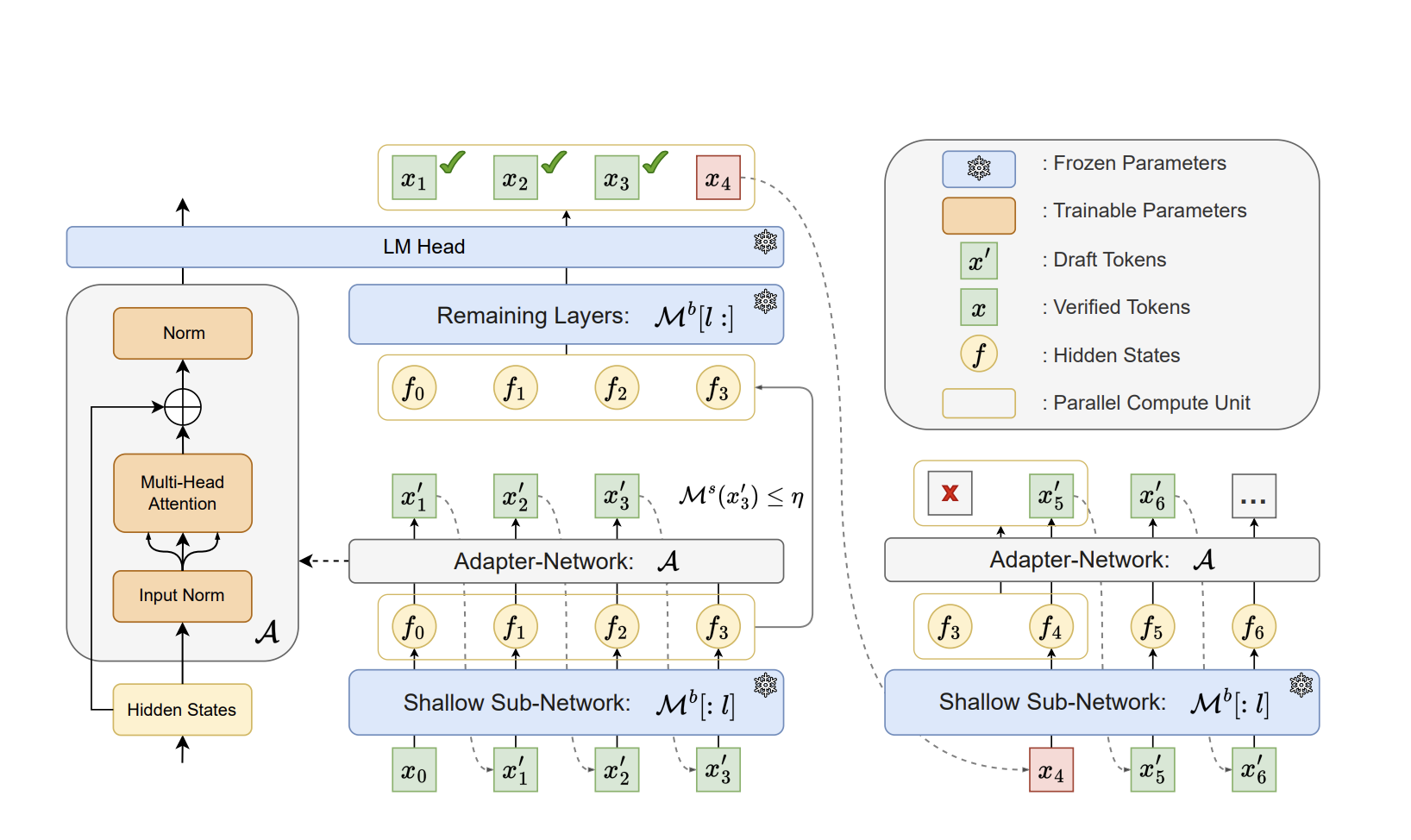

Innovative solutions like Kangaroo introduce efficient speculative decoding approaches, utilizing a fixed shallow LLM sub-network as the draft model and employing an early-exiting mechanism to enhance efficiency further.

Practical Solutions and Results

Kangaroo’s lossless self-speculative decoding framework significantly reduces latency, achieving a speedup ratio of up to 1.7× compared to other methods and using 88.7% fewer additional parameters. It sets a new standard in real-time natural language processing by reducing latency without compromising accuracy.

Engage with AI Solutions

For AI KPI management advice, connect with us at hello@itinai.com. Stay tuned for continuous insights into leveraging AI on our Telegram channel or Twitter.

Spotlight on a Practical AI Solution

Consider the AI Sales Bot from itinai.com/aisalesbot, designed to automate customer engagement 24/7 and manage interactions across all customer journey stages.