Understanding Large Language Models (LLMs)

Large Language Models (LLMs) are designed to mimic human thinking. They can interpret abstract situations described in text, like how objects are arranged or tasks are set up in a real or virtual environment. This research investigates whether LLMs can focus on important details that help achieve specific goals instead of trying to understand every tiny detail of a situation.

Finding the Right Level of Detail

A key challenge in AI is figuring out how detailed an understanding is needed for different tasks. Complex models can enhance decision-making, but overly simplified views might miss crucial information. Researchers are exploring how LLMs balance these needs when processing text descriptions of the world.

Limitations in Current Research

Current methods often aim to uncover complete world states in LLMs. However, there’s a need to distinguish between general understandings and those focused on specific tasks. Some models are good at identifying relationships between entities, while others struggle with understanding dynamic situations. This inconsistency shows the need for a better framework to assess abstraction levels in LLMs.

A New Framework for Improvement

Researchers from Mila, McGill University, and Borealis AI have proposed a new framework based on state abstraction theory from reinforcement learning. This approach simplifies representations while still focusing on task-specific goals. They tested this framework using a task called “REPLACE,” where LLMs manipulate objects in a text environment to achieve set goals.

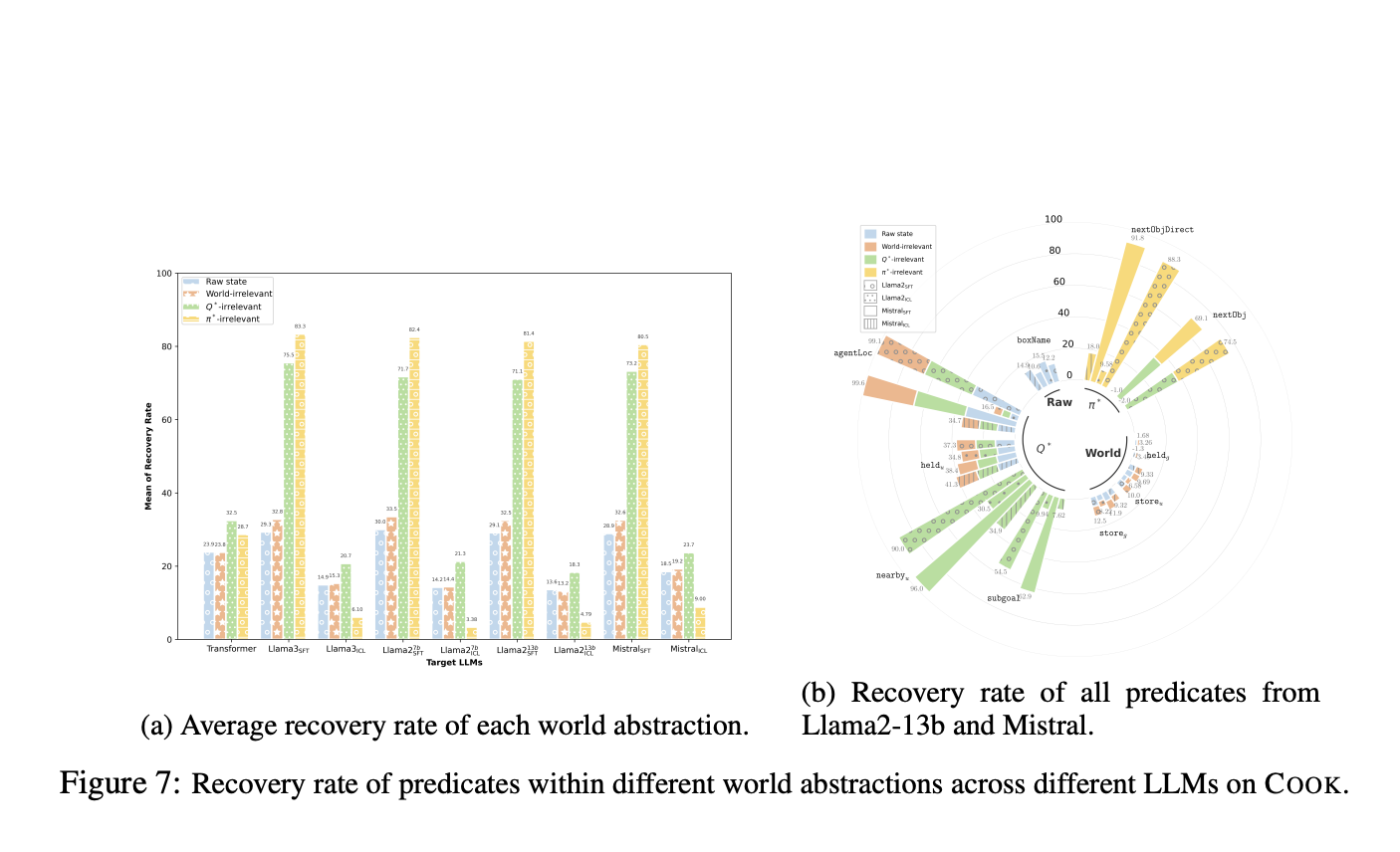

Key Findings on LLM Performance

The study revealed that fine-tuned models prioritize goal-oriented information effectively. For example, fine-tuned models like Llama2-13b and Mistral-13b achieved success rates of 88.30% and 92.15% in the REPLACE task, significantly outperforming their pre-trained versions. This shows that targeted training enhances LLMs’ ability to focus on relevant details.

The Role of Pre-Training

Advanced pre-training boosts reasoning skills in LLMs but mainly for specific tasks. For instance, the pre-trained model Phi3-17b was good at identifying necessary actions but struggled with broader world details. This indicates that while pre-training aids goal-oriented tasks, it might not fully prepare models for comprehensive understanding.

Information Processing During Tasks

Fine-tuned models tend to ignore irrelevant details that don’t impact their goals, streamlining decision-making. However, this can limit their performance in tasks needing detailed knowledge about the environment. They simplify relationships to essential terms, focusing on what’s necessary for task completion.

Key Takeaways

- LLMs excel at prioritizing actionable details: Fine-tuned models like Llama2-13b show high success rates in task completion.

- Pre-training enhances reasoning: While it helps with specific tasks, it doesn’t improve broader understanding.

- Fine-tuning simplifies representations: This helps decision-making but may restrict versatility in complex tasks.

- Tailored training is crucial: Fine-tuning boosts efficiency and success in specific applications.

Conclusion

This research highlights the strengths and limitations of LLMs in understanding and reasoning about the world. While fine-tuned models excel at focusing on actionable insights, they often miss broader environmental dynamics, which can hinder their effectiveness in more complex tasks.

For more insights, check out the paper and follow us on Twitter, join our Telegram Channel, and connect with our LinkedIn Group. If you enjoy our work, subscribe to our newsletter and join our 60k+ ML SubReddit.

Transform Your Business with AI

Stay competitive by leveraging AI solutions. Here’s how:

- Identify Automation Opportunities: Find key customer interactions that can benefit from AI.

- Define KPIs: Ensure measurable impacts on business outcomes.

- Select an AI Solution: Choose tools that fit your needs and allow customization.

- Implement Gradually: Start with a pilot project, gather data, and expand wisely.

For AI KPI management advice, contact us at hello@itinai.com. For continuous insights, follow us on Telegram or Twitter.

Discover how AI can enhance your sales processes and customer engagement by exploring solutions at itinai.com.