Practical Solutions and Value of Homomorphic Encryption Reinforcement Learning (HERL)

Overview

Federated Learning (FL) allows Machine Learning models to be trained on decentralized data sources while maintaining privacy, crucial in industries like healthcare and finance. However, integrating Homomorphic Encryption (HE) for data privacy during training poses challenges.

Challenges of Homomorphic Encryption

Homomorphic Encryption enables computations on encrypted data without decryption but comes with computational and communication overheads, especially in diverse client environments with varying processing capacities and security needs.

Introducing HERL

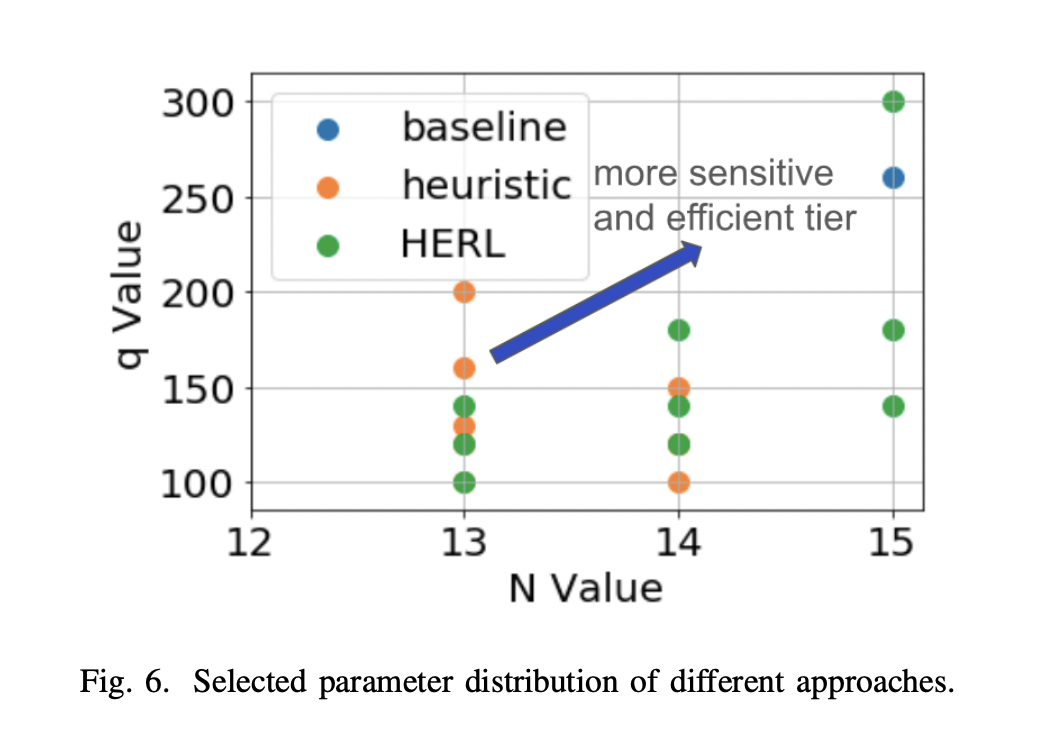

HERL is a Reinforcement Learning-based technique that dynamically optimizes encryption parameters using Q-Learning to cater to different client groups. It focuses on optimizing the coefficient modulus and polynomial modulus degree to balance security and computational load.

Implementation Process

HERL profiles clients based on security needs and computing capabilities, clusters them into tiers, and dynamically selects encryption settings for each tier using Q-Learning. This approach enhances convergence efficiency, reduces convergence time, and improves utility without compromising security.

Key Contributions

The RL agent-based technique adjusts encryption settings for dynamic FL, providing a better security, utility, and latency trade-off. It enhances FL operations’ efficiency while maintaining data security. The approach has shown up to a 24% increase in training efficiency.

Research Summary

The study explores the impact of HE parameters on FL performance, adapting to varied client environments, and optimizing security, computational overhead, and usefulness in FL with HE. It demonstrates the effectiveness of RL in dynamically adjusting encryption parameters for different client tiers.

For more details, refer to the original research post on MarkTechPost.