GuideLLM: Evaluating and Optimizing Large Language Model (LLM) Deployment

Practical Solutions and Value

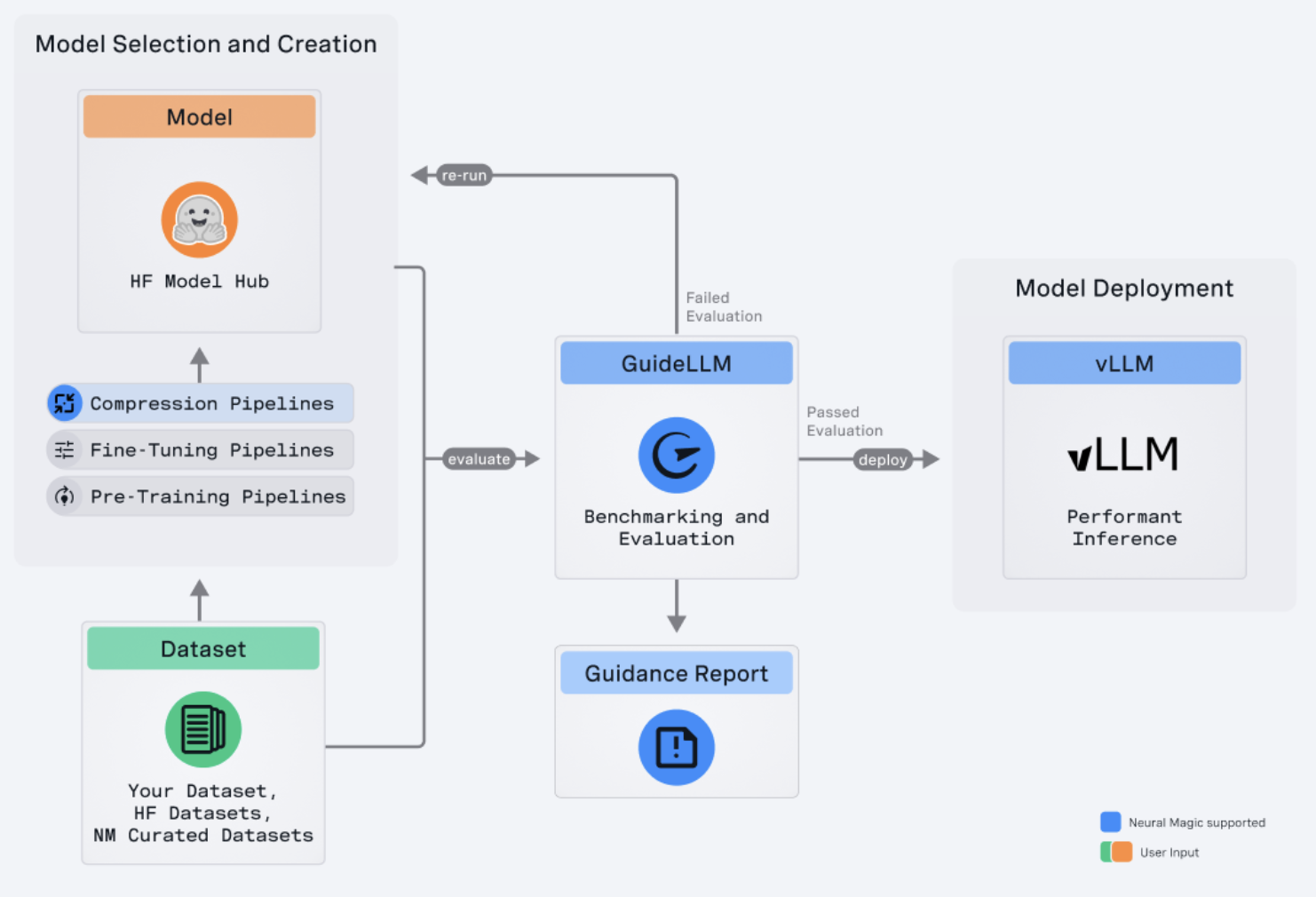

The deployment and optimization of large language models (LLMs) are crucial for various applications. Neural Magic’s GuideLLM is an open-source tool designed to evaluate and optimize LLM deployments, ensuring high performance and minimal resource consumption.

Key Features

- Performance Evaluation: Analyze LLM performance under different load scenarios to meet service level objectives.

- Resource Optimization: Determine the most suitable hardware configurations for optimized resource utilization and cost savings.

- Cost Estimation: Gain insights into the cost implications of different configurations to minimize expenses while maintaining high performance.

- Scalability Testing: Simulate scaling scenarios to handle large numbers of concurrent users without performance degradation.

Getting Started

To start using GuideLLM, users need a compatible environment and can install it through PyPI using the pip command. They can then evaluate their LLM deployments by starting an OpenAI-compatible server, such as vLLM.

Running Evaluations

GuideLLM provides a command-line interface (CLI) to simulate various load scenarios and output detailed performance metrics, crucial for understanding deployment efficiency and responsiveness.

Customizing Evaluations

GuideLLM is highly configurable, allowing users to tailor evaluations to their needs, adjusting benchmark runs, concurrent requests, and request rates.

Analyzing and Using Results

GuideLLM provides a comprehensive summary of the results, identifying performance bottlenecks and optimizing request rates to enhance LLM deployments.

Community and Contribution

Neural Magic encourages community involvement in the development and improvement of GuideLLM. The project is open-source and licensed under the Apache License 2.0.

Conclusion

GuideLLM empowers users to deploy LLMs efficiently and effectively in real-world environments, ensuring high performance and cost efficiency.