Revolutionizing Creativity with Generative AI

Introduction to Generative AI Models

Generative AI models, including Large Language Models (LLMs) and diffusion techniques, are changing creative fields such as art and entertainment. These models can create a wide range of content, from text and images to videos and audio.

Improving Output Quality

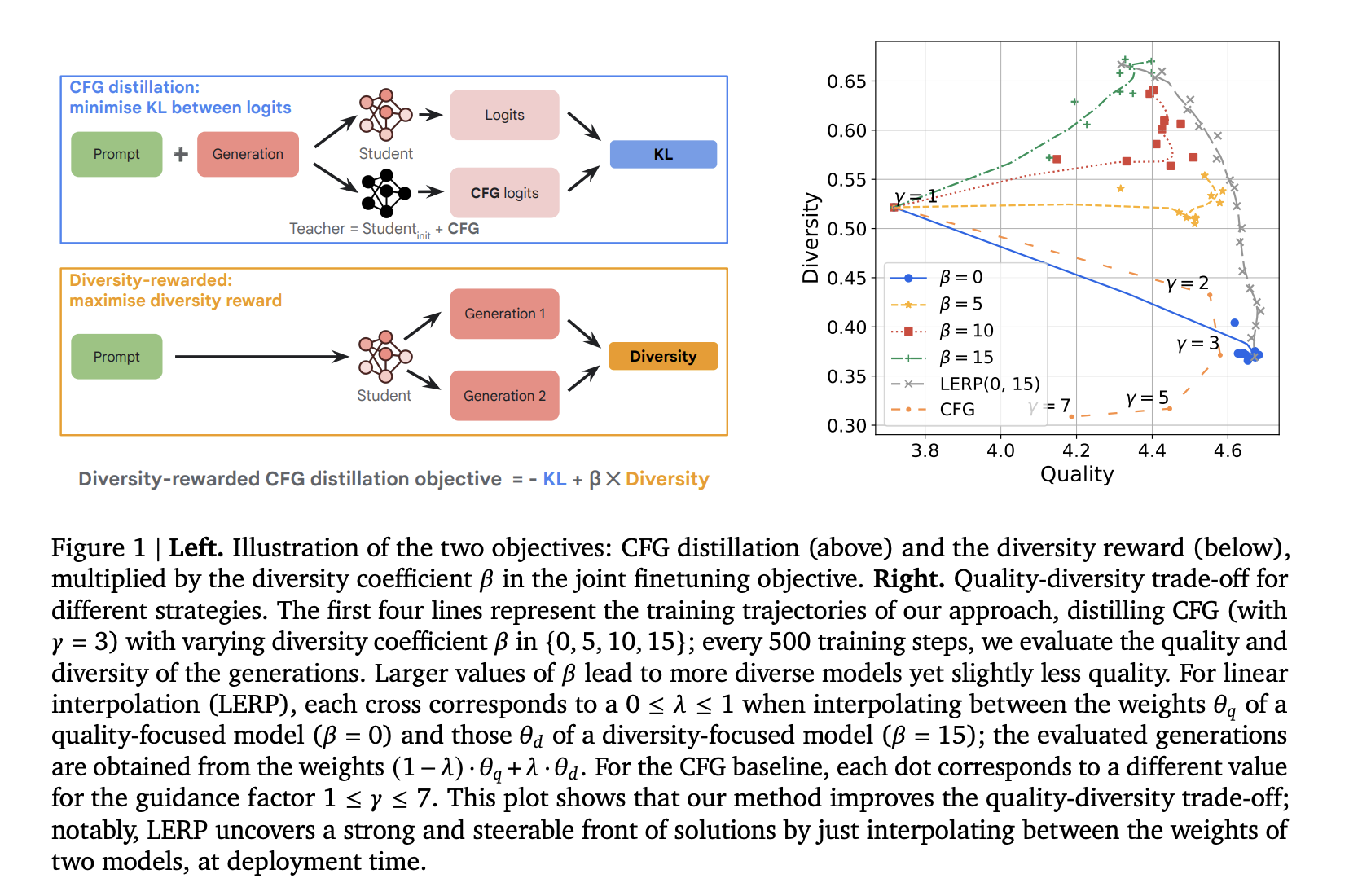

Enhancing the quality of generated content requires additional methods like Classifier-Free Guidance (CFG). While CFG helps make outputs more accurate to prompts, it also comes with challenges:

– **Higher computational costs**

– **Less diversity in outputs**

Finding a balance between quality and diversity is essential for effective AI systems.

Exploring Existing Solutions

Although CFG is useful in generating images, videos, and audio, its limitation on diversity can hinder exploratory tasks. Knowledge distillation has surfaced as a valuable technique to train advanced models, with offline methods proposed for improving CFG-augmented models. Different sampling strategies like temperature, top-k, and nucleus sampling have been compared, with nucleus sampling showing the best results when quality is prioritized.

Innovative Approach by Google DeepMind

Google DeepMind researchers introduced a new finetuning process called diversity-rewarded CFG distillation. This method combines:

– **A distillation objective** to follow CFG-enhanced predictions.

– **A reinforcement learning (RL) objective** with a diversity reward to encourage varied outputs.

This technique allows for the control of quality-diversity balance in real-time and has shown better performance in music generation tasks compared to standard CFG.

Key Research Questions Addressed

The researchers conducted experiments to evaluate:

1. The effectiveness of CFG distillation.

2. The role of diversity rewards in reinforcement learning.

3. The potential of model merging for quality-diversity management.

Evaluation Metrics and Results

Human raters assessed the generated music based on:

– Acoustic quality

– Text adherence

– Musicality

Results showed that the CFG-distilled model matches the quality of the CFG-augmented model and outperforms the original model. The model that included a diversity reward significantly outperformed others in terms of diversity. In various music prompts, the diverse model produced more creative and varied results.

Conclusion

Researchers have developed a powerful technique called diversity-rewarded CFG distillation to enhance the quality-diversity trade-off in generative models. This method combines three vital elements:

– Online distillation to reduce computational load.

– Reinforcement learning with diversity rewards.

– Model merging for flexible quality-diversity management.

These advancements promise great potential for applications that require creativity and alignment with user needs.

Next Steps

To further explore this research, check out the full paper. Follow us on Twitter, join our Telegram Channel, and LinkedIn Group for updates. Sign up for our newsletter for more insights!

Enhancing Your Business with AI

To remain competitive and maximize the advantages of AI, consider the following steps:

– **Identify Automation Opportunities**: Find customer interaction areas that can leverage AI.

– **Define KPIs**: Ensure your AI initiatives are measurable.

– **Select the Right AI Solution**: Choose customizable tools that fit your requirements.

– **Implement Gradually**: Start small, collect data, and expand thoughtfully.

For AI KPI management support, reach out to us at hello@itinai.com. Stay connected for more insights on AI by following our channels.

Upcoming Event

Join us at the RetrieveX – The GenAI Data Retrieval Conference on October 17, 2024!