Google DeepMind Introduces the Frontier Safety Framework: A Set of Protocols Designed to Identify & Mitigate Potential Harms Related to Future AI Systems

As AI technology advances, it brings powerful capabilities that could pose risks in domains like autonomy, cybersecurity, biosecurity, and machine learning. The key challenge is to ensure safe development and deployment of AI systems while preventing potential misuse. Google DeepMind’s Frontier Safety Framework addresses these future risks by proactively identifying and mitigating potential harms from advanced AI models.

Practical Solutions and Value:

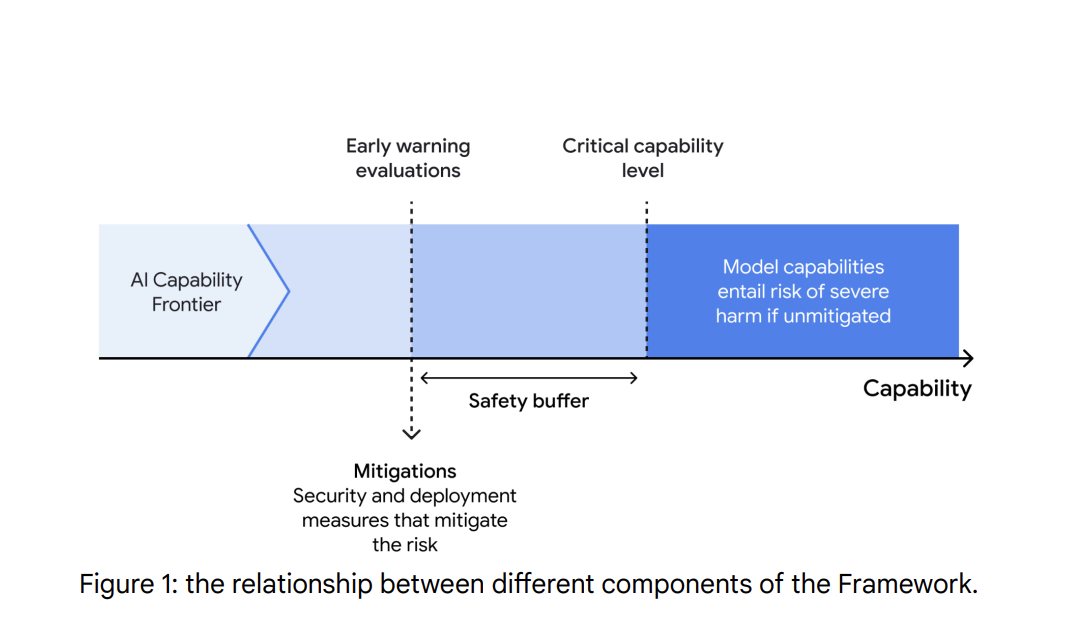

The Frontier Safety Framework offers a proactive approach to AI safety, focusing on future risks from advanced AI capabilities. It comprises three stages of safety:

- Identifying Critical Capability Levels (CCLs): Researching potential harm scenarios and determining the minimal level of capabilities a model must have to cause harm in high-risk domains.

- Evaluating Models for CCLs: Developing early warning evaluations to detect when a model is approaching a dangerous capability threshold.

- Applying Mitigation Plans: Implementing tailored mitigation plans when a model reaches a CCL, balancing benefits and risks while preventing misuse of critical capabilities.

The Framework initially focuses on four risk domains: autonomy, biosecurity, cybersecurity, and machine learning R&D, aiming to prevent severe harm from advanced AI models while balancing innovation and accessibility.

AI Solutions for Your Company:

Discover how AI can redefine your way of work by identifying automation opportunities, defining KPIs, selecting AI solutions, and implementing gradually. For AI KPI management advice and continuous insights into leveraging AI, connect with us at hello@itinai.com or stay tuned on our Telegram or Twitter.

Spotlight on a Practical AI Solution:

Consider the AI Sales Bot from itinai.com/aisalesbot designed to automate customer engagement 24/7 and manage interactions across all customer journey stages.