Transforming Sequence Modeling with Titans

Overview of Large Language Models (LLMs)

Large Language Models (LLMs) have changed how we process sequences by utilizing advanced learning capabilities. They rely on attention mechanisms that work like memory to store and retrieve information. However, these models face challenges as their computational needs increase significantly with longer inputs, making them less practical for real-world applications like language modeling and video understanding.

Addressing Computational Challenges

Researchers have identified three main strategies to improve Transformer models:

- Linear Recurrent Models: These models focus on efficient training and inference, evolving from earlier designs to incorporate advanced mechanisms.

- Optimized Transformer Architectures: These aim to enhance attention mechanisms through various techniques.

- Memory-Augmented Models: These designs integrate persistent memory but often encounter limitations like memory overflow.

Introducing the Titans Architecture

Google Researchers have developed a new memory module that enhances attention mechanisms. This system allows access to historical context while ensuring efficient training. The Titans architecture includes:

- Core Module: Uses attention for short-term memory.

- Long-term Memory Branch: Stores historical information.

- Persistent Memory Component: Contains learnable parameters for improved data handling.

Performance and Advantages

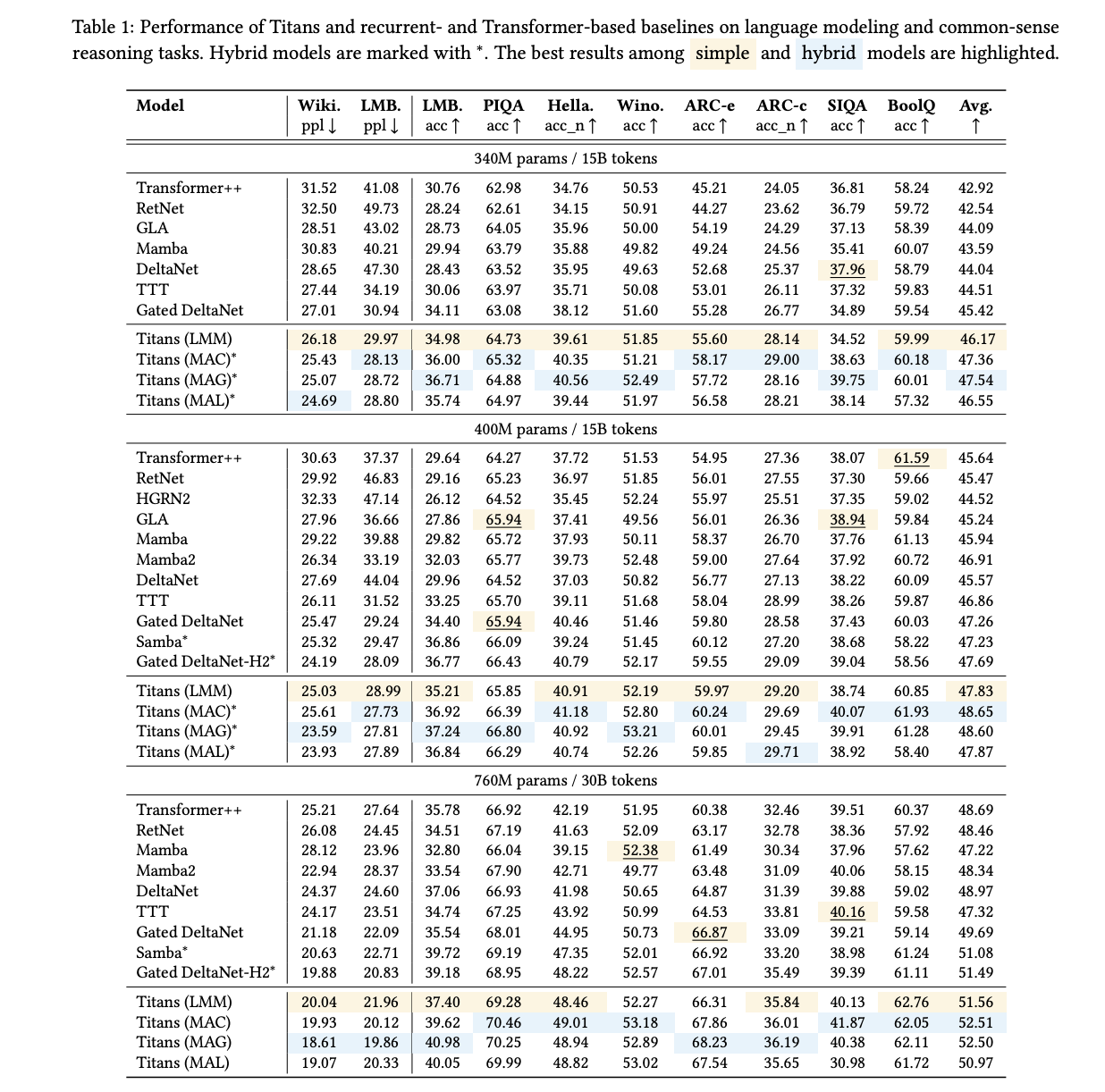

The Titans architecture has shown exceptional performance across various configurations. It excels in processing long sequences, surpassing existing hybrid models. Key advantages include:

- Efficient Memory Management: Handles extensive data without overflow.

- Deep Non-linear Memory Capabilities: Provides complex memory management.

- Effective Memory Erasure Functionality: Ensures relevant information is retained.

Significance of the Titans System

This innovative approach allows the Titans architecture to process sequences exceeding 2 million tokens while maintaining high accuracy. It marks a major advancement in sequence modeling, opening new avenues for tackling complex tasks.

Get Involved

Check out the research paper for more details, and stay connected on our social media platforms for updates and insights. If you’re interested in leveraging AI for your business, consider exploring the following steps:

- Identify Automation Opportunities: Find areas in customer interactions that can benefit from AI.

- Define KPIs: Ensure your AI efforts yield measurable business outcomes.

- Select an AI Solution: Choose tools that meet your specific needs.

- Implement Gradually: Start small, collect data, and expand wisely.

For more information, reach out to us at hello@itinai.com and follow our channels for continuous updates.