Google AI Announces Scaling LLM Test-Time Compute Optimally can be More Effective than Scaling Model Parameters

Overview

Researchers are exploring ways to enable large language models (LLMs) to think longer on difficult problems, similar to human cognition. This could lead to new avenues in agentic and reasoning tasks, enable smaller on-device models to replace datacenter-scale LLMs, and provide a path toward general self-improvement algorithms with reduced human supervision.

Practical Solutions and Value

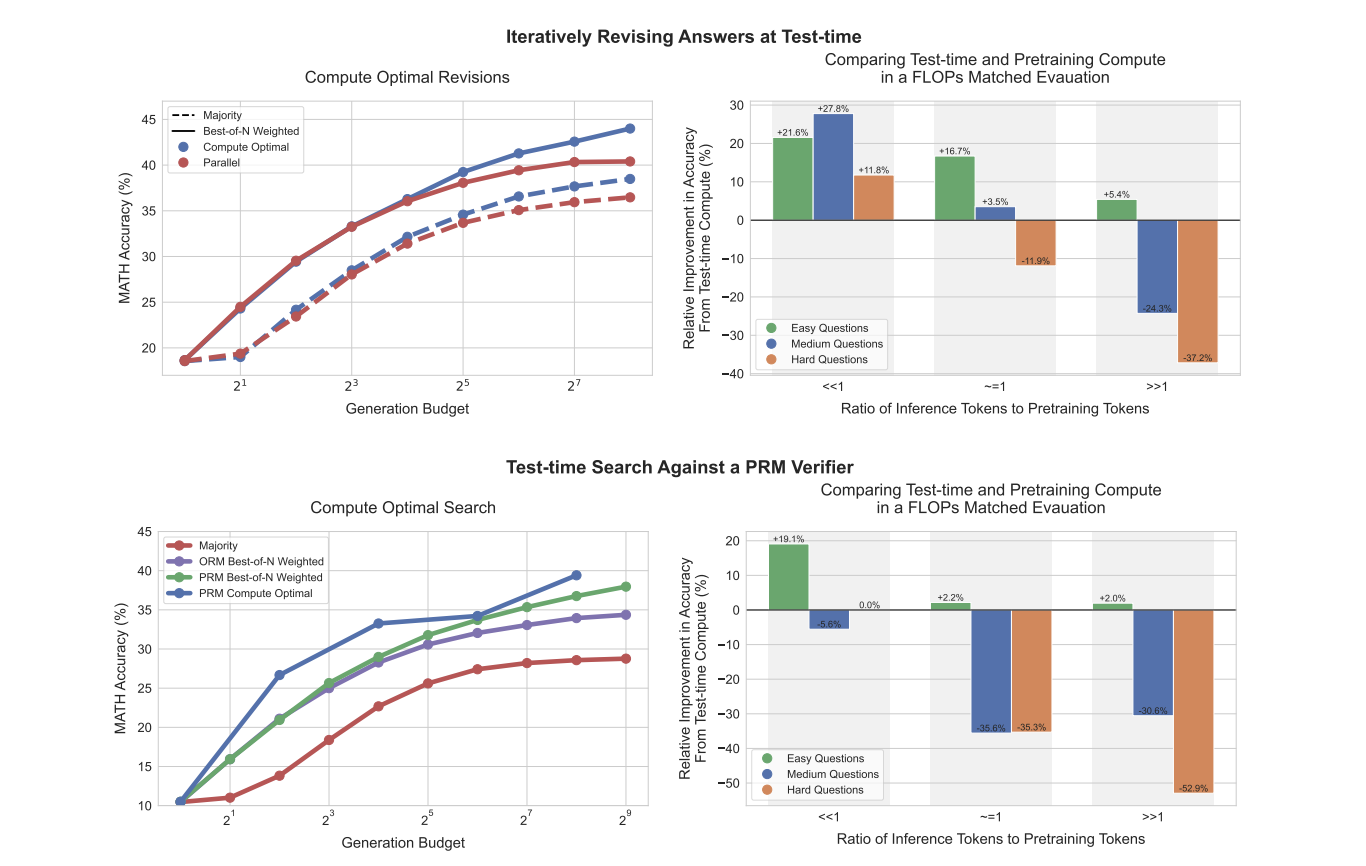

Researchers have made significant progress in improving language model performance on mathematical reasoning tasks through various approaches, such as continued pretraining on math-focused data and enabling LLMs to benefit from additional test-time computation using finetuned verifiers. The adaptive “compute-optimal” strategy for scaling test-time computing in LLMs significantly improves performance, surpassing best-of-N baselines while using approximately 4 times less computation for both revision and search methods.

The study demonstrates the importance of adaptive “compute-optimal” strategies for scaling test-time computes in LLMs. By predicting test-time computation effectiveness based on question difficulty, researchers implemented a practical strategy that outperformed best-of-N baselines using 4x less computation. The findings suggest a potential shift towards allocating fewer FLOPs to pretraining and more to inference in the future, highlighting the evolving landscape of LLM optimization and deployment.

AI Solutions for Business

Discover how AI can redefine your way of work. Identify Automation Opportunities, Define KPIs, Select an AI Solution, and Implement Gradually. For AI KPI management advice, connect with us at hello@itinai.com. And for continuous insights into leveraging AI, stay tuned on our Telegram t.me/itinainews or Twitter @itinaicom.

Discover how AI can redefine your sales processes and customer engagement. Explore solutions at itinai.com.