Practical Solutions for Running Large Language Models on Commodity Hardware

Deploying advanced machine learning models on resource-constrained devices like edge devices, mobile platforms, or low-power hardware has been challenging due to the computational and memory resources required. This has limited real-time applications and increased latency, particularly for smaller organizations and individuals.

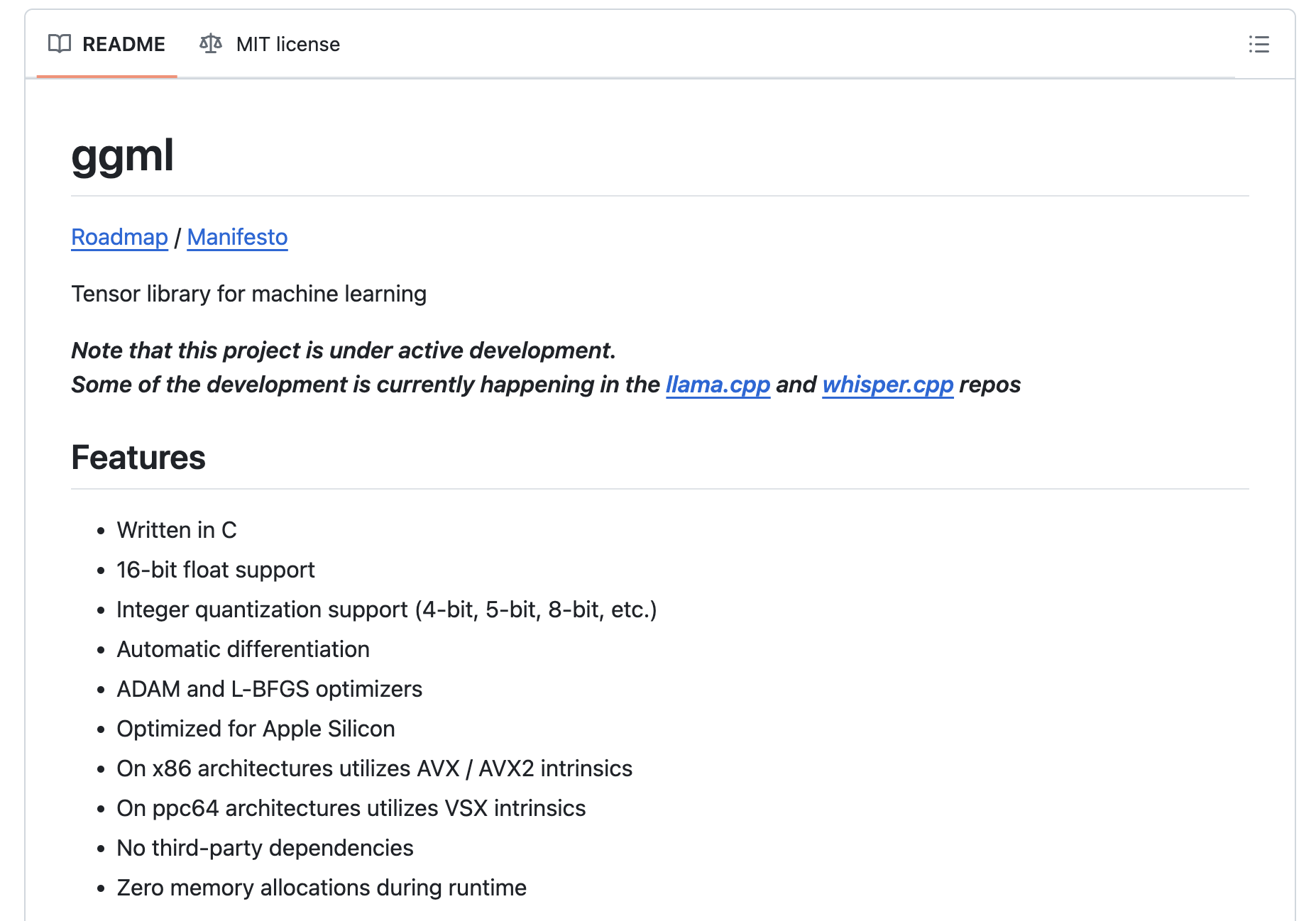

Introducing ggml: A High-Performance Tensor Library

ggml is a lightweight and high-performance tensor library designed to enable the efficient execution of large language models on commodity hardware. It optimizes computations and memory usage, making these models accessible across various platforms, including CPUs, GPUs, and WebAssembly.

Key Innovations of ggml

ggml’s state-of-the-art data structures and computational optimizations minimize memory access and computational overhead. The use of kernel fusion reduces function call overhead, and ggml fully utilizes the parallel computation capabilities of contemporary processors. Additionally, ggml employs quantization techniques to reduce model size and improve inference times without sacrificing accuracy.

Benefits of ggml

ggml enables low latency, high throughput, and low memory usage, allowing the running of large language models on devices like Raspberry Pi, smartphones, and laptops. This overcomes previous limitations and paves the way for broader accessibility and deployment of advanced machine learning models across a wide range of environments.

Value of ggml in AI Evolution

ggml presents a significant advancement by addressing the challenges of computational resource intensity, making it possible to run powerful models on resource-constrained devices.

Evolve Your Company with ggml

Discover how AI can redefine your way of work and how ggml can be used to evolve your company with AI. Identify automation opportunities, define KPIs, select an AI solution, and implement gradually. For AI KPI management advice and continuous insights into leveraging AI, connect with us at hello@itinai.com.