Google DeepMind Unveils Gemma 2 2B: Advanced AI Model

Enhanced Text Generation and Safety Features

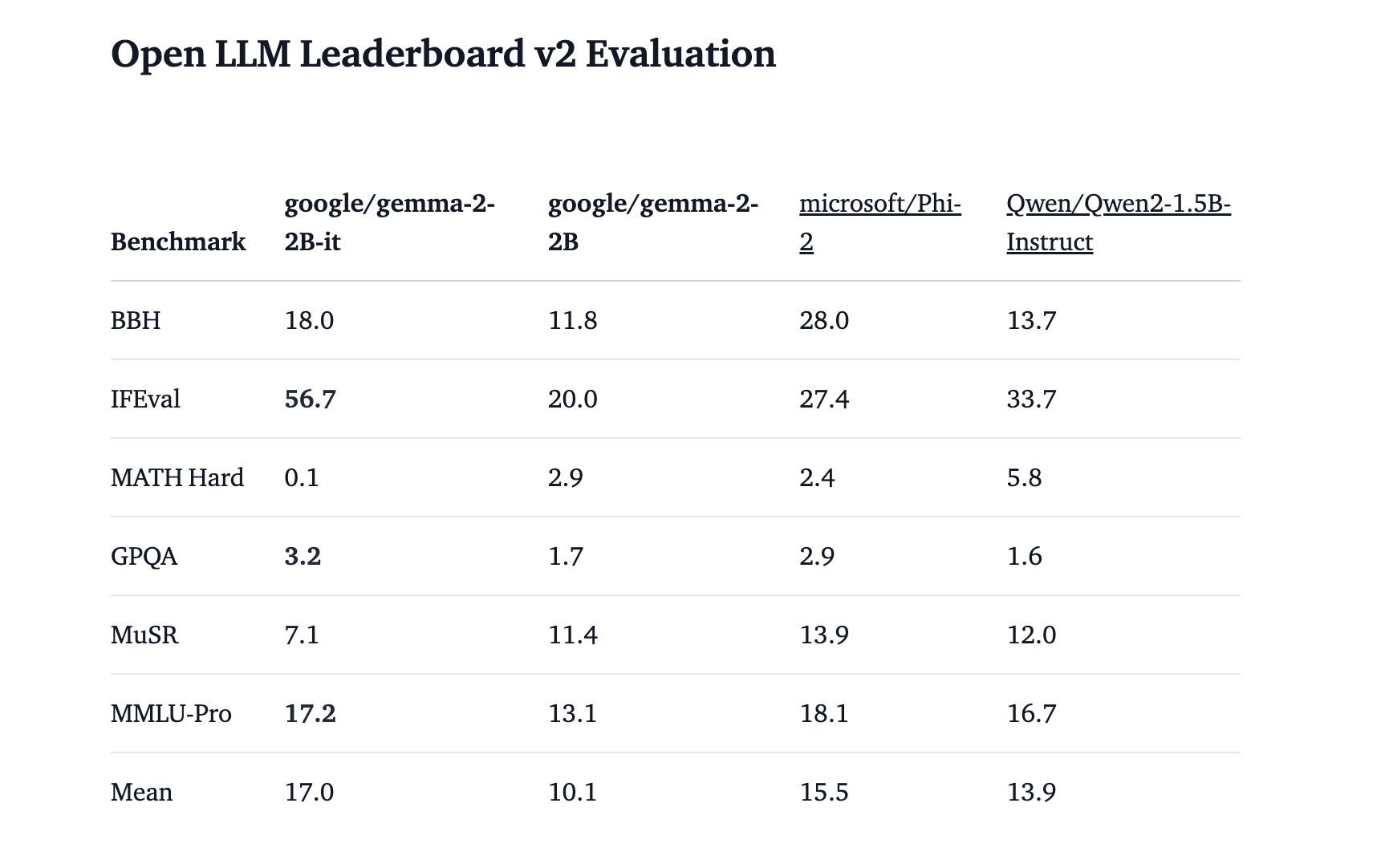

Google DeepMind introduces Gemma 2 2B, a 2.6 billion parameter model designed for high performance and efficiency in diverse technological and research environments.

The Gemma models, renowned for their large language architecture, now include new tools such as sliding attention and logit soft-capping, enhancing text generation efficiency and accuracy.

Developers can seamlessly integrate Gemma 2 2B into their applications through the Hugging Face ecosystem, ensuring quick deployment for text generation, content creation, and conversational AI applications.

ShieldGemma, a safety classifier series, filters out harmful content and supports public-facing applications, reflecting Google’s commitment to responsible AI deployment.

Gemma 2 2B also supports on-device deployment across various operating systems, making it accessible for personal projects and enterprise-level applications.

Assisted generation speeds up text generation without compromising quality, while Gemma Scope aids researchers in interpreting and improving large language models.

The model’s versatility and support for various deployment scenarios make it suitable for natural language processing, content creation, and interactive AI applications.

Google DeepMind’s Gemma 2 2B offers advanced text generation, on-device deployment, and enhanced safety features, positioning it as a leading AI solution.