Practical Solutions and Value of Gated Slot Attention in AI

Revolutionizing Sequence Modeling with Gated Slot Attention

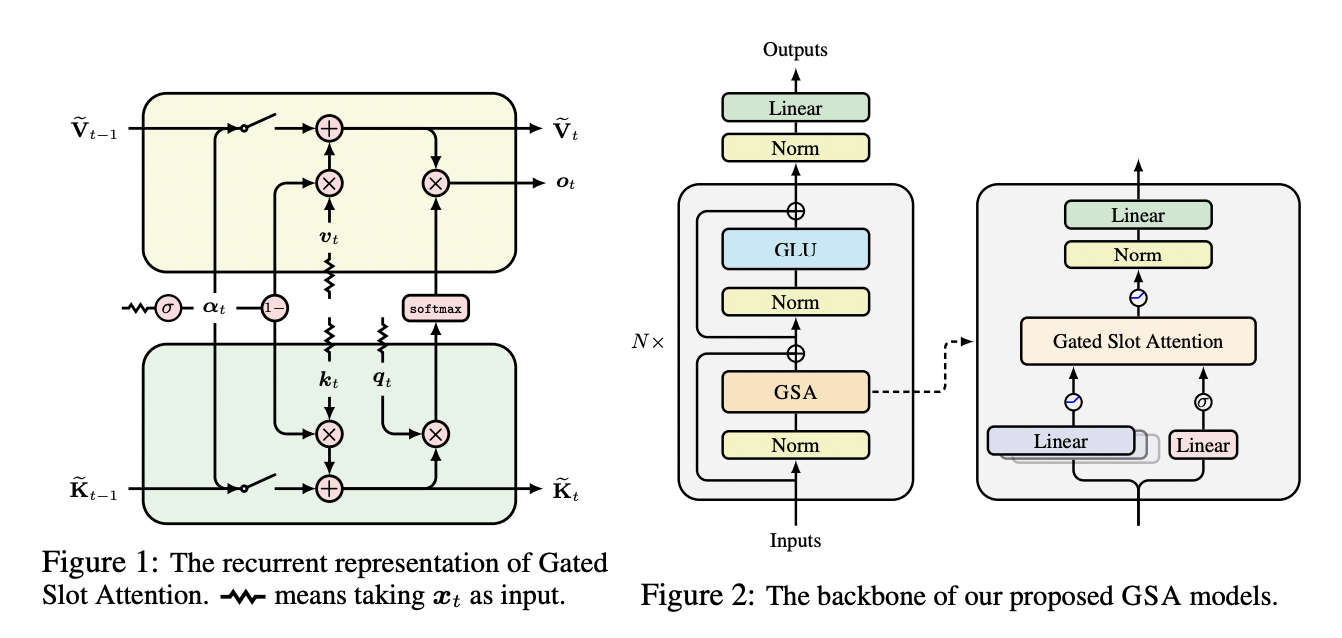

Transformers have improved sequence modeling, but struggle with long sequences. Gated Slot Attention offers efficient processing for video and biological data.

Enhancing Efficiency with Linear Attention

Linear attention models like Gated Slot Attention provide strong performance and constant memory complexity, improving efficiency for long-sequence tasks.

Improving Language Processing with GSA

Gated Slot Attention outperforms other models in language tasks and in-context recall-intensive tasks, offering superior performance and efficiency.

Efficient Training and Inference

GSA balances efficiency and effectiveness by controlling parameters, achieving competitive results in language modeling and recall-intensive tasks.

Advancing Linear Attention Models

Gated Slot Attention bridges the gap between linear attention models and traditional Transformers, offering a promising direction for high-performance language tasks.

For more information, visit the Paper and GitHub.