Understanding Retrieval-Augmented Generation (RAG)

Retrieval-Augmented Generation (RAG) is a research area aimed at enhancing large language models (LLMs) by integrating external knowledge. It consists of two main parts:

- Retrieval Module: Finds relevant external information.

- Generation Module: Uses this information to create accurate responses.

This method is especially useful for open-domain question-answering (QA), allowing models to provide more informed and precise answers by accessing large external datasets.

Challenges in Existing Retrieval Systems

Current retrieval systems face several challenges:

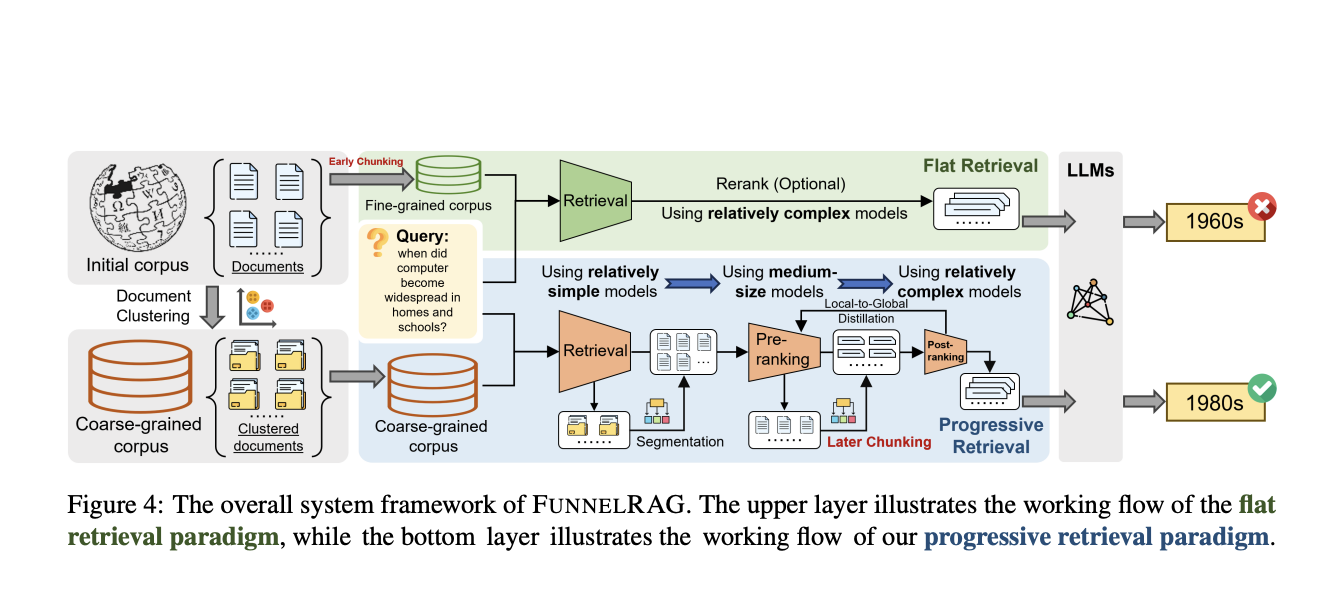

- Flat Retrieval Paradigm: Treats retrieval as a single step, causing inefficiencies.

- High Computational Burden: Individual retrievers process millions of data points at once.

- Limited Refinement: Information granularity remains constant, hindering accuracy.

Introducing FunnelRAG

Researchers from the Harbin Institute of Technology and Peking University developed FunnelRAG, a new retrieval framework that improves efficiency and accuracy by refining data in stages:

- Stage 1: Large-scale retrieval using sparse retrievers to reduce data from millions to 600,000 clusters.

- Stage 2: Pre-ranking with advanced models to refine clusters to about 1,000 tokens.

- Stage 3: Post-ranking to segment documents into short passages for final retrieval.

This coarse-to-fine approach balances efficiency and accuracy, ensuring relevant information is retrieved without unnecessary computational load.

Performance Benefits of FunnelRAG

FunnelRAG has shown significant improvements:

- Time Efficiency: Reduced retrieval time by nearly 40% compared to flat methods.

- High Recall Rates: Achieved 75.22% and 80.00% recall on Natural Questions and Trivia QA datasets.

- Reduced Candidate Pool: Cut down from 21 million to 600,000 clusters while maintaining accuracy.

Conclusion

FunnelRAG effectively addresses the inefficiencies of traditional retrieval systems, enhancing retrieval efficiency and accuracy for large-scale open-domain QA tasks. Its innovative approach allows for better data handling and improved performance.

Explore More

Check out the Paper for full details. Follow us on Twitter, join our Telegram Channel, and participate in our LinkedIn Group. If you enjoy our work, subscribe to our newsletter and join our 50k+ ML SubReddit.

Upcoming Live Webinar

Oct 29, 2024: The Best Platform for Serving Fine-Tuned Models: Predibase Inference Engine.

Transform Your Business with AI

Stay competitive by leveraging FunnelRAG:

- Identify Automation Opportunities: Find key customer interaction points for AI benefits.

- Define KPIs: Measure the impact of your AI initiatives.

- Select an AI Solution: Choose tools that fit your needs and allow customization.

- Implement Gradually: Start small, gather data, and expand wisely.

For AI KPI management advice, connect with us at hello@itinai.com. For ongoing insights, follow us on Telegram or Twitter.

Revolutionize Your Sales and Customer Engagement

Discover solutions at itinai.com.